The venue

Despite of being held at the new three-story Moscone West building, this year 55th DAC in San Francisco bore many similarities as compared with last year’s. Similar booth decors and floorplan positioning of the big two, Synopsys and Cadence, which were across of each other and right next to the first floor entrance –although this time Synopsys had similar screen size for partner presentations as Cadence’s. There were also the same purple-dress magician and the (Penn &) Teller-look-alike comedian at two of the vendor booths; the end-of-each-day poster sessions competing with mouth-watering hors d’oeuvres; and some vendor giveaways luring DAC attendees in hope they also carry along the takeaways from partners’ 10-minute flash presentations. Likewise, many returning vendors and replays of product offerings with incremental updates.

Unique and new things at this year DAC

While from talking with many attendees the sentiment seems to be rather subdued on the exhibit floor, the sheer number of technical papers and behind-closed-door sessions still reflect a leap of enthusiasm in propagating AI, ML and cloud into the EDA space. Key EDA players shared their continued tools benchmarking and rolling out their fruitful collaborative efforts (such as Synopsys Fusion integration with Ansys’ RedHawk, Mentor Calibre-RTD with major P&R tools, Aldecwith its co-simulations, among others).

At this DAC, two seasoned Wall-street EDA/semiconductor analysts (Richard Valera from Needham and Jay Vleeschhouwer from Griffin Securities) were given the opportunity to share their EDA/semiconductor prognosis during Sunday’s DAC kick-off reception and at the Tuesday’s DAC Pavilion session, respectively. They are in agreement that EDA growth is there, although around mid single digit amidst further consolidation of players. Jay also noted that there are more cloud players participation in developing their own silicons (not only doing software developments).

There was Design Infrastructure Alley designated for cloud providers along with Design-on-Cloud pavilion to showcase their EDA on cloud collaborations. Out of the big three, Cadence offers the most comprehensive cloud-enabled solutions including different engagement models while Mentor embraced cloud by offering emulation capabilities on AWS. Despite good traction in addressing cloud technical related aspects such as security and scalability, I believe the cloud adoption is still at the exploration stage. Other than ideally providing capacity at peak demand, the business model portion is still evolving as it is quite a challenge to undo or align major customers existing on-premise capacities with a cloud expansion. On the other hand, it is good news and lowers barrier-of-entry for smaller design houses which may be more ready to embrace metric-based-usage model.

IP is getting more attention

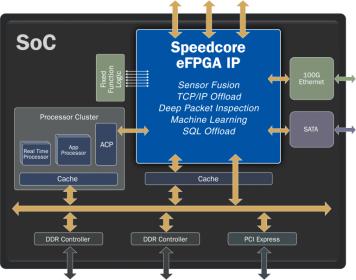

Jay’s feedback on the IP resumption growth resonates well with a number of talks given during DAC. IP has its own ecosystem, encompassing a swath of enablers: from smallest footprint IP providers such as PLL from Silicon Creation to verification IP’s from Synopsys.

In the core IP segment, RISC-V is making a comeback and seems to showcase adopters and aligning a number of talks. Krste Asanovic, Chairman of RISC-V foundation and SiFive co-founder made the Skytalk speech 2 years ago in Austin DAC and it’s Dave Patterson turn this year to make a pitch. “Why open source compilers and operating systems but not ISAs?”, Dave touted. “..The thirst for open architecture that everybody could use and they look all over for the instruction sets and stumbled into ours and liked it and started using it without telling us about it…” Patterson says about his encounters with RISC-V early adopters a few years back. Once realizing of such thirst for an open architecture, it had prompted him and few others to try to make it happen by building the ecosystem through the RISC-V Foundation.

As Synopsys Aart de Geus stated at Silicon Valley SNUGthis year that action is happening at the interface, (Instruction Set Architecture) ISA is the most important interface in a computer system as it connects software to hardware. The motivation behind forming the RISC-V Foundation is also ensuring its openness. According to Rick O’Connor, its executive director, “…so the technology is not controlled by a single company or entity.” He mentioned that using ISA does not require membership or license. Only when it is used as part of non-open-source device or commercial products it requires a RISC-V trademark/license.

While it is a good addition to the core IP selection (as MIPS is now owned by Wave Computing) and Dave showed its anticipated volume ramp, it is still a bit early to make a significant impact on the existing IP-core ecosystem, which is currently being dominated by both X86 and ARM based architectures. Which one will be the leader? Perhaps this involves a bit of reading tea leaves, since it is impossible to predict which one will be dominating IoT and automotive applications.

But ARM has also recently aligned its organization to serve these two-market segments. I asked ARM’s John Ronco, VP & GM of Embedded & Auto Line of Business regarding the appeal of RISC-V instruction set ownership and customization in addressing security related needs, for example. He is not worried as adopters only gain control on the ISA, they still need to design the CPU.

Furthermore, he said “My view on that, vast majority of cases you don’t want to customize the instruction sets. There are two reasons…firstly, actually if you do that you’ll break the software ecosystem. One of the huge benefit of ARM is that you’ve got these vast network of tools/software companies that work on ARM platform…” Secondly, he believes adopters will be confined to incremental customization that offers no performance benefit as they have to ensure no deviations from its fundamental architecture.

ML and AI

At the conference, there were more progress shared by the EDA providers in embedding ML into their solutions. Synopsys shared incremental QoR gains and significant cycle time saving upon deploying ML (and three of their four Fusion interfaces: design, signoff, ECO), while Cadence announced augmenting ML on their characterization tool enabling smart interpolation of points and critical corners to also significantly reduce overall timing library generation. Likewise, Silvaco ML augmentation in behavioral Verilog-A spice modeling generation and its characterization tools has enabled less input collaterals and has reduced the required overall runtime.

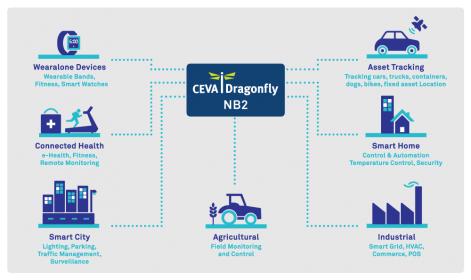

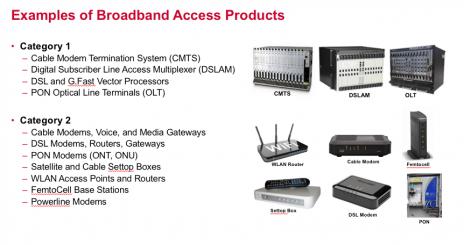

As our electronics industry is venturing into the IoT and automotive while sustaining efforts for the upcoming mobile migration towards 5G network, there are many smaller solution providers at the exhibit showing either their niche point-tools or flexible design services. It seems that smaller product form factors, focused functionalities, IP availability and now cloud enablement may have enticed more participants into this foray. Let’s hope it will be more vibrant in Vegas next year!