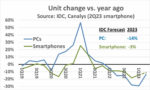

The current slump in the electronics market began in 2021. Smartphone shipments versus a year earlier turned negative in 3Q 2021. The smartphone market declines in 2020 were primarily due to COVID-19 related production cutbacks. The current smartphone decline is due to weak demand. According to IDC, smartphone shipments were 269 million units in 1Q 2023, the lowest level in almost ten years since 262 million units were shipped in 3Q 2013. In its June forecast, IDC projected smartphone unit shipments will drop 3% in 2023. However, we could have reached the bottom of the decline. IDC estimated 1Q 2023 smartphones were down 14.5% from a year earlier, following an 18.3% year-on-year decline in 4Q 2022. IDC 2Q 2023 estimates are not yet available, but Canalys puts 2Q 2023 smartphones down 11% from a year ago. This could signify the bottoming of the downturn and the beginning of a recovery. Smartphones could show positive year-to-year growth by 4Q 2023.

PCs experienced a COVID-19 related boom in 2020 after years of flat to declining shipments. After peaking at 57% in 1Q 2021, PC shipment year-to-year change has steadily declined, hitting a low of minus 29% in 1Q 2023. IDC’s estimate of 56.9 million PCs shipped in 1Q 2023 is the lowest since 54.1 million PCs were shipped in 1Q 2020. IDC’s 2Q 2023 estimates indicate the beginning of a recovery, with PCs down 13% from a year ago versus down 29% in 1Q 2023. 2Q 2023 PC shipments were up 8.3% from 1Q 2023, the strongest quarter-to-quarter growth since 10% in 4Q 2020. IDC’s June PC forecast was a 14% decline in 2023. The second half of 2023 would need to grow 12% from the first half to meet the 14% decline for the year. This scenario seems reasonable since it would only require about 5% to 6% quarter-to-quarter growth. PCs could return to positive year-to-year change by 4Q 2023 or 1Q 2024.

China is the world’s largest producer of electronics – including TVs, mobile phones and PCs. China production data shows a turn towards improvement. Three-month-average unit production versus a year ago for May 2023 shows color TVs up 8% after negative change in the first quarter of 2023. Mobile phones were down 3.3%, an improvement from double-digit declines in January and February 2023. PCs were still weak with a 17% decline but improved from 20% plus declines earlier in 2023. Total China electronics production in local currency (yuan) was positive in May with a 1.0% three-month-average change versus a year ago. Electronics change had been negative in the first three months of 2023.

Electronics production data for other significant countries in Asia show differing trends. Japan bounced back from a weak 2022 to show three-month-average change versus a year ago of 8.4% in April 2023. In contrast, Taiwan experienced strong growth in 2022, but slowed to 5.1% in April 2023. Vietnam also showed strong growth in 2022 but turned negative in February 2023. Vietnam was down 10.8% in May 2023, but improved slightly to a 7.8% decline in June. South Korea electronics production has been volatile, experiencing double-digit growth in late 2022, but falling to a 12.4% decline in May.

Electronics production for the 27 nations of the European Union (EU) and for the United States has been on a deceleration trend in the last several months. U.S. three-month-average change versus a year ago peaked at 8.1% in November 2022 and has decelerated each month since, reaching 2.8% in April 2023. EU 27 production growth peaked at 22% in October 2022 and has since slowed to 4.2% in April 2023. UK production growth is down from its peak of 17% in October 2022, but has held in the 8% to 11% range for the first five months of 2023.

A near-term turnaround in the electronics markets is far from certain. Global economies are expected to be generally weak in the second half of 2023. Trading Economics projects U.S. GDP growth will slow from 2.0% in 1Q 2023 and 1.9% in 2Q to a 0.1% decline in 3Q before bouncing back to a modest 0.6% growth in 4Q. The Euro area and the UK are expected to have relatively low GDP growth in the last half of 2023 ranging from 0.1% to 0.4%. China is forecast to have lower GDP growth in the second half of 2023 compared to the first half. Japan’s second half 2023 should be slightly lower than in the first half.

The good news is we have probably reached the low point in the electronics downturn. However, the recovery could be slow. A significant return to growth may not happen before 2024.

Semiconductor Intelligence is a consulting firm providing market analysis, market insights and company analysis for anyone involved in the semiconductor industry – manufacturers, designers, foundries, suppliers, users or investors. Please contact me if you would like further information.

Bill Jewell

Semiconductor Intelligence, LLC

billjewell@sc-iq.com

Also Read:

Semiconductor CapEx down in 2023

Electronics Production in Decline