Artificial intelligence (AI) is transforming every layer of computing, from hyperscale data centers training trillion-parameter models to battery-powered edge devices performing real-time inference. Hardware requirements are escalating on every front: compute density is increasing, power budgets are tightening, and new algorithms arrive faster than traditional chip roadmaps can adapt.

The first wave of AI hardware was built on proprietary instruction sets and closed ecosystems. Those approaches are now straining under the pace of change. Designers need a way to innovate without constraints, to add custom acceleration where workloads demand it, and to build systems that scale from edge to cloud under a single software umbrella.

This is where RISC-V—an open, modular instruction set architecture—has moved from a promising alternative to a market imperative. The sections below show why the market is pivoting toward RISC-V, how it empowers next-generation AI chip designers, how AI-system builders can take advantage of it, and how companies like Akeana are converting opportunity into real silicon.

Why the Market Wants RISC-V

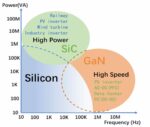

Global AI-processor revenues are expected to exceed $260 billion this year, but the diversity of AI workloads and the drive for energy efficiency expose the limits of fixed instruction sets such as x86 and Arm. RISC-V’s openness allows designers to integrate custom instructions—whether for matrix operations, tensor accelerators, or compute-in-memory features—without waiting for a single vendor to approve the roadmap.

RISC-V also provides greater flexibility and broader choice in IP availability, eliminating dependence on a single or limited number of sources, as is often the case with Arm or x86. For example, in multi-threaded processors within heterogeneous compute systems, if the incumbent supplier does not offer a solution, there may be no alternative available.

Governments and enterprises are drawn to the freedom from restrictive licensing. Hyperscalers and semiconductor companies see the chance to fine-tune performance per watt for a rapidly widening range of AI tasks. Together these factors create a powerful demand for hardware that is at once open, customizable, and future-proof.

How RISC-V Enables Next-Gen AI Chip Designers

For chip architects and product teams, RISC-V offers a blank canvas for differentiation. Its extensible ISA supports wide-vector units, matrix engines, and other domain-specific accelerators, all integrated within a coherent hardware–software stack.

Because the ecosystem is open and fast-moving, software support keeps pace with silicon. Open-source compilers, optimized libraries, and ratified vector and matrix extensions are ready when new chips arrive. Designers can focus on compute density, power efficiency, and time to market without sacrificing compatibility with industry-standard development tools.

How AI-System Builders Can Leverage RISC-V and Chip Designer Innovation

AI-system builders—whether they are assembling servers, edge devices, or specialized appliances—require platforms that merge heterogeneous compute resources without creating software silos. RISC-V’s unified ISA makes it possible to combine general-purpose CPUs with vector engines, general matrix-multiply (GEMM) accelerators, and customer-specific xPUs under a single software target.

This reduces integration complexity and ensures consistent performance across edge and data-center deployments. System architects can scale performance, tune energy efficiency, and incorporate custom accelerators while maintaining one toolchain and development flow, shortening the path from concept to production.

A Unique Window of Opportunity

The push for open, customizable AI hardware coincides with a moment when the RISC-V supply chain has reached maturity. Proven IP blocks, hardened design flows, and robust software stacks allow both chip vendors and system builders to move quickly from design to deployment.

Those who act now can capture customers looking for sovereignty and specialization while delivering next-generation AI performance that closed architectures may struggle to match. The alignment of market pull and technology readiness creates a rare but powerful window of opportunity.

What Akeana Offers

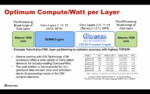

Akeana stands at the forefront of this movement with a full-stack portfolio of RISC-V–based IP and subsystems purpose-built for AI. In its recent webinar, “Leveraging RISC-V as a Unified Heterogeneous Hardware and Software Platform for Next-Gen AI Chips,” Akeana engineers described how SoC developers can meet aggressive compute-per-watt goals and heterogeneous system requirements using the company’s technology.

Akeana provides RVA23 compatible high-performance RISC-V processors, advanced vector and matrix accelerators, and powerful data-movement engines. Its flexible compute-subsystem interconnect links multi-core CPUs, partner accelerators, and custom hardware blocks into a seamless architecture. The company is uniquely able to deliver simultaneous multi-threaded (SMT) RVA23 compatible In-Order and Out-of-Order cores. The company also delivers a mature software environment—optimized compilers, AI performance libraries, and robust simulation tools—so developers can bring up operating systems and AI frameworks immediately.

For chip designers, these offerings shorten the path from concept to production while allowing fine-grained performance tuning. For AI-system builders, they provide ready-to-integrate compute subsystems that simplify scaling and reduce power consumption. Akeana’s technology enables customers to innovate aggressively while meeting stringent efficiency and time-to-market targets.

Summary

The AI industry is entering a new growth phase, and RISC-V is the architecture of choice for flexibility, efficiency, and long-term control. Chip designers gain freedom to create custom instructions and accelerators, while AI-system builders gain a unified platform for heterogeneous computing.

Akeana sits at the center of this transformation. By supplying RISC-V IP cores, powerful compute subsystems, and a complete software stack, the company helps both chip creators and system integrators capture the opportunity that today’s market demands. The combination of surging demand and a mature supply chain is compelling, and Akeana is ready to help partners seize it.

See Akeana at RISC-V Summit 2025

To experience Akeana’s innovations firsthand, visit them at Booth #P2 at the RISC-V Summit in Santa Clara, October 22–23, 2025. There you can explore live demonstrations of RVA23 cores that achieve Spec2K6/GHZ score >20, In-Order and Out-of-Order SMT cores, highly efficient data movement engine for sparse data and speak directly with their team about accelerating your own AI hardware and system designs.

Learn more at www.akeana.com

Also Read:

Moores Lab(AI): Agentic AI and the New Era of Semiconductor Design

GlobalFoundries, MIPS, and the Chiplet Race for AI Datacenters

The RISC-V Revolution: Insights from the 2025 Summits and Andes Technology’s Pivotal Role