IP-SoC 2011is the 20[SUP]th[/SUP] anniversary for the first Conference completely dedicated to IP. IP market is a small world, as EDA a small market if you look at the generated revenue… but both are essential building blocks for the semiconductor industry. It was not clear back in 1995 that IP will become essential: at that time, the IP concept was devalued by some products exhibiting poor quality level, un-efficient technical support, leading program manager to be very cautious to simply decide to buy. Making was sometimes more efficient… In the mean time, the market has been cleaned up, the poor quality product suppliers disappearing (being bankrupt or sold for asset) and the remaining IP vendors have understood the lesson. None of the renewed vendor marketing a protocol based (digital) function would take the chance to launch a product which has not passed an extensive verification program, and the vendors of mixed-signal IP functions know that the “Day of Judgment” will be when the Silicon prototypes will be validated. This leaves very small room for low quality products, even if you may still find some new comers deliberately launching a poor quality RTL function, naively thinking that lowering the development cost will allow to sell at low price and buy market share, or some respected Analog IP vendor failing to deliver “at spec” function, just because… analog is analog, and sometimes closer to black magic than to science!

If you don’t trust me, just look at products like Application Processor for Wireless handset, or for Set-Top-Box: these chips are made at 80% of reused functions, whether internal or coming from an IP vendor. This means literally that several dozen functions, digital or mixed-signal, are IP. Would only one of these failed and a $50+ million SoC development will miss the market window. That said, will the IP concept, as it is today in 2011, will be enough to support the “More than Moore” trend? In other word, if IP in the 2000-10’s is like Standard Cell was in the 1980-90’s, what will be the IP of the 2020’s? You will find people addressing this question at IP-SoC Conference! Just have a look at the program, with some presentations:

……

“The past and the next 20 years? Scalable computing as a key evolution“

…..

“IP’s 20 year evolution – adaptation or extinction“

…..

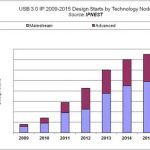

“Interface IP Market Birth, Evolution and Consolidation, from 1995 to 2015. And further?”

……

Obviously, you first have to look at the past to be able to forecast the future, but the latter is the most important reason to attend the conference. Because, as we have moved from Transistor based design to Standard Cell based deign, then from Standard Cell to IP, we will have to invent the next move.

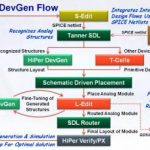

So, the interesting question will be to know where the IP industry stands on the spectrum starting from a single IP function, ending to a complete system. Nobody would allege that we have reached the upper side of the spectrum and claim that you can source complete system from an IP vendor. The death of EDA360 is a clear illustration of this status. Maybe because the SC industry is not ready to source a complete IP system (what would be the added value of the Fabless companies if/when will occur?), most certainly because the IP vendors are far to be able to do it (it will require strong understanding of specific application and market segment, associated technical know-how of such application and, even more difficult to met, adequate funding to support up-front development, accepting the risk to miss the target…). This is why an intermediate step may be to offer IP Subsystem. According with D&R, who organize IP-SoC, the IP market is already here: “Over the year IPs have become Subsystems or Platforms and thus as a natural applicative extension IP-SoC will definitively include a strong Embedded Systems track addressing a continuous technical spectrum from IP to SoC to Embedded System.” So IP-SoC 2011 will be no more IP-centric only, but IP Subsystem centric!

It will be interesting to hear the different definitions of what is exactly an IP Subsystem. If I offer a PCI Express Controller with an AMBA AXI application interface, may I call it a subsystem? I don’t think so too! But should I add another IP function (like for example Snowbush offering PCI Express plus SATA) to call it a subsystem? Or should I consider the application first, and pick –or design- the different functions needed to support this specific application? Then, how to market the CPU, the memories and probably other IP which belongs to my competitor? The answer is far to be trivial, and this will make the next IP-SoC conference worth to attend! You probably should not expect to come back home with a 100% definite answer (if anybody knows the solution, he should start a company a.s.a.p.) but you will have the chance to share the experience of people who have explored different tracks, and learn from them.

If you plan to attend, just register here, and send me a note (

eric.esteve@ip-nest.com

) , it will be a pleasure to meet you there!

By Eric Esteve from IPnest