Over the last couple of decades, the electronics communications industry has been a significant driver behind the growth of the FPGA market and continues on. A major reason behind this is the many different high-speed interfaces built into FPGAs to support a variety of communications standards/protocols. The underlying input-output… Read More

Tag: speedster7t

Integrated 2D NoC vs a Soft Implemented 2D NoC

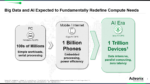

We are living in the age of big data and the future is going to be even more data centric. Today’s major market drivers all have one thing in common: efficient management of data. Whether it is 5G, hyperscale computing, artificial intelligence, autonomous vehicles, or IoT, there is data creation, processing, transmission, and … Read More

PCIe Gen5 Interface Demo Running on a Speedster7t FPGA

The major market drivers of today all have one thing in common and that is the efficient management of data. Whether it is 5G, hyperscale computing, artificial intelligence, autonomous vehicles or IoT, there is data creation, processing, transmission and storage. All of these aspects of data management need to happen very fast.… Read More

Take the Achronix Speedster7t FPGA for a Test Drive in the Lab

Achronix is known for its high-performance FPGA solutions. In this post, I’ll explore the Speedster7T FPGA. This FPGA family is optimized for high-bandwidth workloads and eliminates performance bottlenecks with an innovative architecture. Built on TSMC’s 7nm FinFET process, the family delivers ASIC-level performance … Read More

An FPGA-Based Solution for a Graph Neural Network (GNN) Accelerator

Earlier this year, Achronix made a product announcement about shipping the industry’s highest performance Speedster7t FPGA devices. The press release included lot of details about the architecture and features of the device and how that family of devices is well suited to satisfy the demands of the artificial intelligence … Read More

Data Orchestration Hardware Unlocks the Full Potential of AI

We all know that artificial intelligence (AI) and machine learning (ML) are fundamentally changing the world. From the smart devices that gather data to the hyperscale data centers that analyze it, the impact of AI/ML can be felt almost everywhere. It is also well-known that hardware accelerators have opened the door to real-time… Read More

Achronix Next-Gen FPGAs Now Shipping

Earlier in April, Achronix made a product announcement with the headline “Achronix Now Shipping Industry’s Highest Performance Speedster7t FPGA Devices.” The press release drew attention to the fact that the 7nm Speedster®7t AC7t1500 FPGAs have started shipping to customers ahead of schedule. In the complex product world… Read More

Achronix Demystifies FPGA Technology Migration

System designers who are switching to a new FPGA platform have a lot to think about. Naturally a change like this is usually done for good reasons, but there are always considerations regarding device configurations, interfaces and the tool chain to deal with. To help users who have decided to switch to their FPGA technology, Achronix… Read More

Webinar – FPGA Native Block Floating Point for Optimizing AI/ML Workloads

Block floating point (BFP) has been around for a while but is just now starting to be seen as a very useful technique for performing machine learning operations. It’s worth pointing out up front that bfloat is not the same thing. BFP combines the efficiency of fixed point operations and also offers the dynamic range of full floating… Read More

Network on Chip Brings Big Benefits to FPGAs

The conventional thinking about programmable solutions such as FPGAs is that you have to be willing to make a lot of trade-offs for their flexibility. This has certainly been the case in many instances. Even just getting data across the chip can eat up valuable routing resources and add a lot of overhead. These problems are exacerbated… Read More