When it comes to Arm, we think mostly of phones and the “things” in the IoT. We know they’re in a lot of other places too, such as communications infrastructure but that’s a kind of diffuse image – “yeah, they’re everywhere”. We like easy-to-understand targets: phones, IoT devices, we get those. More recently Arm started to talk about… Read More

Tag: nvidia

Automating Timing Arc Prediction for AMS IP using ML

NVIDIA designs some of the most complex chips for GPU and AI applications these days, with SoCs exceeding 21 billion transistors. They certainly know how to push the limits of all EDA tools, and they have a strong motivation to automate more manual tasks in order to quicken their time to market. I missed their Designer/IP Track Poster… Read More

WEBINAR: AI-Powered Automated Timing Arc Prediction for AMS IP’s

A directed approach to reduce Risk and improve Quality

Safety and reliability are critical for most applications of integrated circuits (ICs) today. Even more so when they serve markets like ADAS, autonomous driving, healthcare and aeronautics where they are paramount. Safety and reliability transcend all levels of an integrated… Read More

GloFo & TSMC lawsuit- A Surrogate Trade War- Pushing TSMC into China’s open arms?

Surrogate Wars

Is GloFo using the trade war as an excuse?

Does TSMC get lumped in with China on trade?

Does this alienate TSMC into China’s embrace?

Much like Vietnam and Korea before it, there have been a number of “surrogate” wars between the US and China as well as many other wars between the US and Russia using… Read More

2019 GSA Silicon Summit and SiFive

Naveed Sherwani, President and CEO of SiFive, did the keynote for this year’s Silicon Summit. This is one of the premier events for the C level executives in Silicon Valley, absolutely. Naveed is one of the top visionaries for the semiconductor industry and he certainly did not disappoint this time or any other time in my experience.… Read More

Double-digit semiconductor decline in 2019

The global semiconductor market is headed for a double-digit decline for the year 2019 after a decline of 15.6% in first quarter 2019 from fourth quarter 2018. According to WSTS (World Semiconductor Trade Statistics) data, this was the largest quarter-to-quarter decline since a 16.3% decline in first quarter 2009, ten years … Read More

Tesla: The Day the Industry Stood Still

Tesla Motors held an investor event at its Palo Alto headquarters. CEO Elon Musk and a series of Tesla executives announced a new in-house developed microprocessor (already in production and being deployed in Tesla vehicles) and its plans and progress toward autonomous vehicle operation.

Tesla Autonomy Day Live Stream

To be … Read More

Deep Learning, Reshaping the Industry or Holding to the Status Quo

AI, Machine Learning, Deep Learning and neural networks are all hot industry topics in 2019, but you probably want to know if these concepts are changing how we actually design or verify an SoC. To answer that question what better place to get an answer than from a panel of industry experts who recently gathered at DVcon with moderator… Read More

Google Stadia is Here. What’s Your Move, Nvidia?

On March 16, 2019, Google introduced the world to its cloud-based, multi-platform gaming service, Stadia. Described as “a gaming platform for everyone” by Google CEO Sundar Pichai at the Game Developers Conference, Stadia would make high-end games accessible to everyone. The video gaming industry, as we know it, will never … Read More

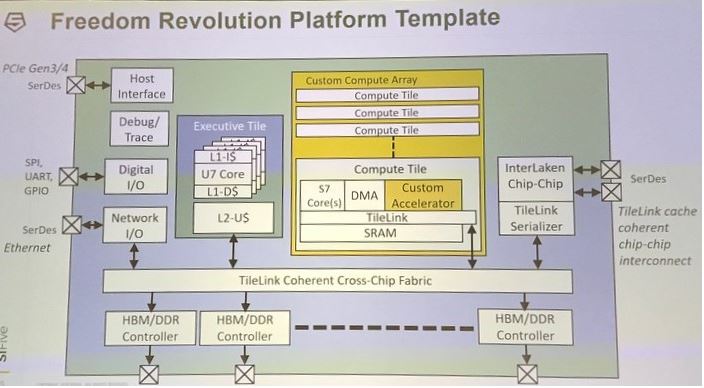

The Revolution Evolution Continues – SiFive RISC-V Technology Symposium – Part II

During the afternoon session of the Symposium, Jack Kang, SiFive VP sales then addressed the RISC-V Core IP for vertical markets from consumer/smart home/wearables to storage/networking/5G to ML/edge. Embedding intelligence from the edge to the cloud can occur with U Cores 64-bit Application Processors, S Cores 64-bit Embedded… Read More