Tis the season for transformer-centric articles apparently – this is my third within a month. Clearly this is a domain with both great opportunities and challenges: extending large language model (LLM) potential to new edge products and revenue opportunities, with unbounded applications and volumes yet challenges in meeting performance, power, and cost goals. Which no doubt explains the explosion in solutions we are seeing. One dimension of differentiation in this race is in the underlying foundation model, especially GPT (OpenAI) versus Llama (Meta). This does not reduce to a simple “which is better?” choice it appears, rather opportunities to show strengths in different domains.

Llama versus other LLMs

GPT has enjoyed most of the press coverage so far but Llama is demonstrating it can do better in some areas. First a caveat – as in everything AI, the picture continues to change and fragment rapidly. GPT already comes in 3.5, 4, and 4.5 versions, Google has added Retro, LaMDA and PaLM2, Meta has multiple variants of Llama, etc, etc.

GPT openly aims to be king of the LLM hill both in capability and size, able from a simple prompt to return a complete essay, write software, or create images. Llama offers a more compact (and more accessible) model which should immediately attract edge developers, especially now that the Baby Llama proof of concept has been demonstrated.

GPT 4 is estimated to run to over a trillion parameters, GPT 3.5 around 150 billion, and Llama 2 has variants from 7 to 70 billion. Baby Llama is now available (as a prototype) in variants including 15 million, 42 million and 110 million parameters, a huge reduction making this direction potentially very interesting for edge devices. Notable here is that Baby Llama was developed by Andrej Karpathy of OpenAI (not Meta) as a weekend project to prove the network could be slimmed down to run on a single core laptop.

As a proof of concept, Baby Llama is yet to be independently characterized or benchmarked, however Karpathy has demonstrated ~100 tokens/second rates when running on an M1 MacBook Air. Tokens/second is a key metric for LLMs in measuring throughput in response to a prompt.

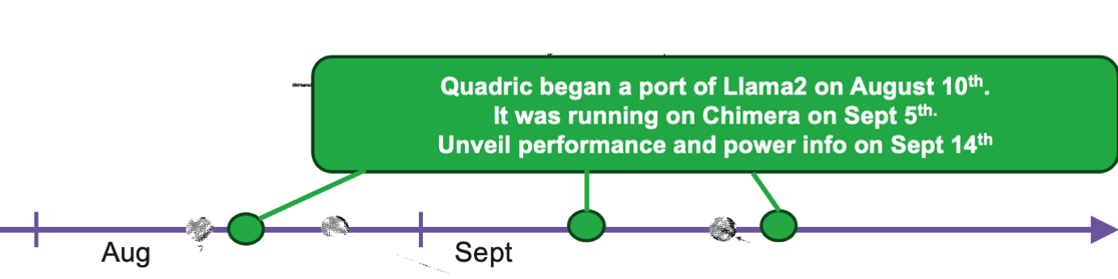

Quadric brings Baby Llama up on Chimera core in 6 weeks

Assuming that Baby Llama is a good proxy for an edge based LLM, Quadric made the following interesting points. First, they were able to port the 15 million parameter network to their Chimera core in just 6 weeks. Second, this port required no hardware changes, only some (ONNX) operation tweaking in C code to optimize for accuracy and performance. Third they were able to reach 225 tokens/second/watt, using a 4MB L2 memory, 16 GB/second DDR, a 5nm process and 1GHz clock. And fourth the whole process consumed 13 engineer weeks.

By way of comparison, they ran the identical model on an M1-based Pro laptop running the ONNX runtime with 48MB RAM (L2 + system cache) and 200 GB/sec DDR, with a 3.3 GHz clock. That delivered 11 tokens/second/watt. Quadric aims to extend their comparison to edge devices once they arrive.

Takeaways

There are obvious caveats. Baby Llama is a proof of concept with undefined use-rights as far as I know. I don’t know what (if anything) is compromised in reducing full Llama 2 to Baby Llama, though I’m guessing for the right edge applications this might not be an issue. Also performance numbers are simulation-based estimates, comparing with laptop performance rather than between implemented edge devices.

What you can do with a small LLM at the edge has already been demonstrated by recent Apple IoS/MacOS releases which now support word/phrase completion as you type. Unsurprising – next word/phrase prediction is what LLMs do. A detailed review from Jack Cook suggests their model might be a greatly reduced GPT 2 at about 34 million parameters. Unrelated recent work also suggests value for small LLMs in sensing (e.g. for predictive maintenance).

Quadric’s 6-week port with no need for hardware changes is a remarkable result, important as much in showing the ability of the Chimera core to adapt easily to new networks as in the performance claims for this specific example. Impressive! You can learn more about this demonstration HERE.

Also Read:

Vision Transformers Challenge Accelerator Architectures

An SDK for an Advanced AI Engine

Quadric’s Chimera GPNPU IP Blends NPU and DSP to Create a New Category of Hybrid SoC Processor

Share this post via:

Comments

There are no comments yet.

You must register or log in to view/post comments.