If you practice in advanced levels of power management, you know about dynamic voltage and frequency scaling (DVFS). This is where you allow some part of a circuit, say a CPU, to run at different voltages and frequencies depending on acceptable performance versus thermal tradeoffs and battery life on a mobile device. Need to run fast? Crank up the voltage and frequency to run a task quickly, then drop both back down to save power and allow generated heat to dissipate.

DVFS is a well-known technique in PCs and servers, where boosting performance (for the whole processor) is an option and slowing down to cool off is the noticeable price you have to pay for that temporary advantage. This method is used at a more fine-grained level in the application processor at the heart of your smart-phone, where multiple functions may host their own separate DVFS domains, switching up and down as your usage varies. This method to balance performance versus power-saving can be especially important in any edge application demanding long battery life.

DVFS as commonly used is not an arbitrarily tunable option. System architects specify a fixed set of voltage/frequency possibilities, commonly two or three, then these options are hardwired into the chip design. In synchronous circuit design each clock option comes at a cost in complexity and size for the PLL, dividers or however else you generate accurate clocks.

But what if you’re using self-timed logic? Not necessarily for the whole SoC, but certainly for some critical components. I know of only one independent set of IP options today, from Eta Compute, so I’ll describe my understanding of how they implement tunable DVFS to get ultra-low power in intelligent IoT devices, down to a level that harvested power may be an usable complement to a backup battery. This is based on my discussion with Dave Baker, Chief architect at the company.

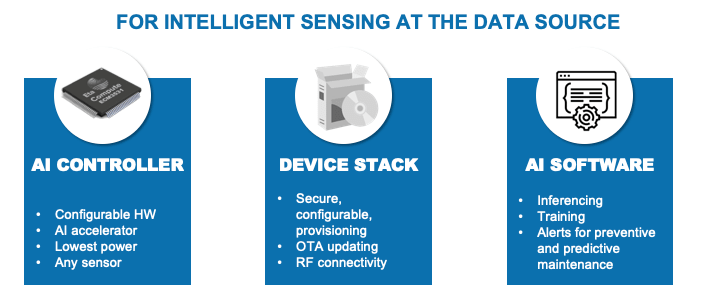

I introduced this company a while ago, on their introduction of a self-timed Cortex M3 core which would be a natural to use in this kind of IoT device. Since then, they have also struck a partnership with NXP to offer a CoolFlux DSP which hosts AI computation. As a reference design based on these cores they have developed their ECM3531 testchip with all the usual system functions, serial interfaces, a variety of on-board memory features and a 2-channel ADC interface (to connect to sensors). The system is supported by the Apache MyNewt OS, designed for the IoT, with built-in support for BLE, Bluetooth mesh and other wireless interfaces. Eval boards are already available.

OK, so far pretty standard except for the self-timed cores, but here comes the really clever part. Because this is self-timed logic, performance can be tuned simply by adjusting the voltage supply to the core. If the converter supplying that voltage is tunable, you can dial-in a voltage and therefore a performance. Eta Compute provide their own frequency-mode buck converter for this purpose. And you can tune the converter through firmware. The company’s RTOS scheduler monitors idle-time per heartbeat and computes if idle time is dropping, it should raise the voltage, whereas if idle time is growing, it can afford to lower the voltage. An optimal setting can be tuned to fall somewhere between a target setting for an application stage down to a frequency below which interrupt latencies may become a problem.

Now compare this approach with what I call run-fast-then-stop and Dave calls race-to-idle. When you have work to do, you crank the frequency (and voltage) to the maximum option, do the work as fast as you can, then drop back to the lowest frequency/voltage option. There is timer uncertainty in switching so power wasted during those transitions, scaling with the size of the transition. And of course power (CV2f) during the on phase is high. Compare this with the Eta Compute approach. On-voltage scales up only as high as will meet the idle-time objective, typically much lower than the peak voltage in the first approach. And power wasted during switching is correspondingly lower because transition times are shorter. Even the idle voltage can be lower since this too is tunable, unlike the hardwired option in conventional DVFS.

Eta Compute have run CoreMark and ULPMark benchmarks against a number of comparable solutions and are showing easily an order of magnitude better energy efficiency (down to 5mW at 96MHz), along with IoT and sensor application operating efficiency at better than 4.5uA/MHz. So yeah, you really can run this stuff off harvested power. In fact, they have shown a solar-powered Bluetooth application running battery-less at 50uW in continuous operation.

I skipped a lot of detail in this description in the interest of a quick read. Dave told me for example that the interconnect is also self-timed, important because buffers in the interconnect consume a lot of power. Therefore intelligent scaling of voltage in the interconnect is equally important. If you want to dig more into the details, click HERE.

Share this post via:

The Name Changes but the Vision Remains the Same – ESD Alliance Through the Years