When people talk about bottlenecks in digital signal processors (DSPs), they usually focus on compute throughput: how many MACs per second, how wide the vector unit is, how fast the clock runs. But ask any embedded AI engineer working on always-on voice, radar, or low-power vision—and they’ll tell you the truth: memory stalls are the silent killer. In today’s edge AI and signal processing workloads, DSPs are expected to handle inference, filtering, and data transformation under increasingly tight power and timing budgets. The compute cores have evolved, but edge computing’s goal is to move the compute engine closer to the memory.

The toolchains have evolved. But memory? Still often too slow. And here’s the twist: it’s not because the memory is bad. It’s because the data doesn’t arrive on time.

Why DSPs Struggle with Latency

Unlike general-purpose CPUs, most DSPs used in embedded AI rely on non-cacheable memory regions—local buffers, scratchpads, or deterministic tightly coupled memory (TCM). That design choice makes sense: real-time systems can’t afford cache misses or non-deterministic latencies. But that also means every memory access must have exact load latency —or else the pipeline stalls. You can be in the middle of processing a spectrogram, a convolution window, or a beamforming sequence—and suddenly everything halts while the processor waits on data to arrive. Multiply-accumulate units sit idle. Latency compounds. Power is wasted.

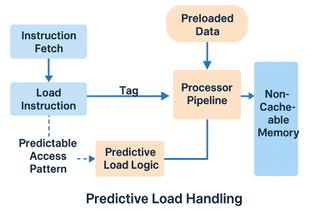

Enter Predictive Load Handling

Now imagine if the DSP could recognize the pattern. If it could see that your loop is accessing memory in fixed strides—say, reading every 4th address—and preload that data ahead of time, —commonly referred to as “deep prefetch”—so that when the actual load instruction is issued, the data is already there. No stall. No pipeline bubble. Just smooth execution.

That’s the traditional model of prefetching or stride-based streaming—and while it’s useful and widely used, it’s not what we’re describing here.

A new Predictive Load Handling innovation takes a fundamentally different approach. This is not just a smarter prefetch—it’s a fundamentally different technique. Instead of predicting what address will be accessed next, Predictive Load Handling focuses on how long a memory access is likely to take.

By tracking the latency of past loads—whether from SRAM, bypassed caches, or DRAM—it learns how long memory requests from each region typically take. Instead of issuing loads early, the CPU proceeds normally. The latency prediction is applied on the vector side to schedule the execution at the predicted time, allowing the processor to adapt to memory timing without changing instruction flow. This isn’t speculative or risky. It’s conservative, reliable, and fits perfectly into deterministic DSP pipelines. It’s especially effective when the processor is working with large AI models or temporary buffers stored in DRAM—where latency is relatively consistent but still long. That distinction is critical. We’re not just doing a smarter prefetch—we’re enabling the processor to be latency-aware and timing-adaptive, even in the with or without a traditional cache or stride pattern.

When integrated into a generic DSP pipeline, Predictive Load Handling delivers immediate, measurable performance and power gains. The table shows how it looks in typical AI/DSP scenarios. These numbers reflect expectations in workloads like:

- Convolution over image tiles

- Sliding FFT windows

- AI model inference over quantized inputs

- Filtering or decoding over streaming sensor data

| Metric | Baseline DSP | With Predictive Load | Result |

| Memory Access Latency | 200 ns | 120 ns | 40% faster |

| Data Stall Cycles | 800 cycles | 500 cycles | 38% reduction |

| Power per Memory Load | 0.35 mW | 0.25 mW | 29% reduction |

Minimal Overhead, Maximum Impact

One of the advantages of Predictive Load Handling is how non-intrusive it is. There’s no need for deep reordering logic, cache controllers, or heavyweight speculation. It can be dropped into the dispatch or load decode stages of many DSPs, either as dedicated logic or compiler-assisted prefetch tags. And because it operates deterministically, it’s compatible with functional safety requirements—including ISO 26262—making it ideal for automotive radar, medical diagnostics, and industrial control systems.

Rethinking the AI Data Pipeline

What Predictive Load Handling teaches us is that acceleration isn’t just about the math—it’s about data readiness. As processor speeds continue to outpace memory latency—a gap known as the memory wall—the most efficient architectures won’t just rely on faster cores. They’ll depend on smarter data pathways to deliver information precisely when needed, breaking the bottlenecks that leave powerful CPUs idle. As DSPs increasingly carry the weight of edge AI, we believe Predictive Load Handling will become a defining feature of next-generation signal processing cores.

Because sometimes, it’s not the clock speed—it’s the wait.

Also Read:

Even HBM Isn’t Fast Enough All the Time

RISC-V’s Privileged Spec and Architectural Advances Achieve Security Parity with Proprietary ISAs

Harnessing Modular Vector Processing for Scalable, Power-Efficient AI Acceleration

An Open-Source Approach to Developing a RISC-V Chip with XiangShan and Mulan PSL v2

Share this post via:

CEO Interview with Aftkhar Aslam of yieldWerx