ISO 26262 is serious stuff, the governing process behind automotive safety. But, as I have observed before, it doesn’t make for light reading. The standard is all about process and V-diagrams, mountains of documentation and accredited experts. I wouldn’t trade a word of it (or my safety) for a more satisfying read, but all that process stuff doesn’t really speak to my analytic soul. I’ve recently seen detailed tutorials / white-papers from several sources covering the analytics, which I’ll touch on in extracts in upcoming blogs but I’ll start with the Synopsys functional safety tutorial at DVCon, to set the analytics big picture (apologies to the real big picture folks – this is a blog, I have to keep it short).

To open, chip and IP suppliers have to satisfy Safety Element out of Contexttesting requirements under Assumptions of Use which basically comes down to demonstrating fault avoidance/control and independent verification for expected ASIL requirements under expected integration contexts. Which in turn means that random hardware failures/faults can be detected/mitigated with an appropriate level of coverage (assuming design/manufacturing faults are already handled).

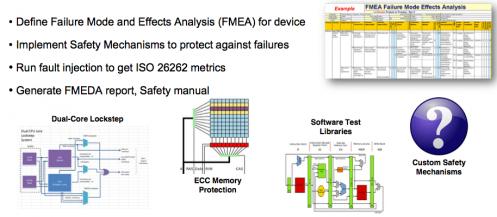

Functional safety analysis/optimization then starts with a failure mode and effects analysis (FMEA), a breakdown of the potential functional modes of failure in the IP/design. Also included in this analysis is an assessment of the consequence of the failure and the likely probability/severity of the failure (how important is this potential failure given the project use-modes?). For example, a failure mode for a FIFO would be that the FULL flag is not raised when the FIFO is full, and a consequence would be that data could be overwritten. A safety mechanism to mitigate the problem (assuming this is a critical concern for projected use-cases) might be a redundant read/write control. All of this obviously requires significant design/architecture expertise and might be captured in a spreadsheet or a spreadsheet-like tool automating some of this process.

The next step is called failure mode and effects diagnostic analysis (FMEDA) which really comes down to “how well did we do in meeting the safety goal?” This document winds up being a part of the ISO 26262 signoff so it’s a very important step where you assess safety metrics based on the FMEA analysis together with planned safety mechanisms where provided. Inputs to this step include acceptable FIT-rates or MTBF values for various types of failure and a model for distribution of possible failures across the design.

Here’s where we get to fault simulation along with all the usual pros and cons of simulation. First, performance is critical; a direct approach would require total run-times comparable to logic simulation time multiplied by the number of faults being simulated, which would be impossibly slow when you consider the number of nodes that may have to be faulted. Apparently, Synopsys’ Z01X fault simulator is able to concurrently simulate several thousand faults at a time (I’m guessing through clever overlapping of redundant analysis – only branch when needed), which should significantly improve performance.

There are two more challenges: how comprehensively you want to fault areas in the design and, as always, how good your test suite is. Synopsys suggests that at the outset of what they call your fault campaign, you start with a relatively low percentage of faults (around a given failure mode) to check that your safety mechanism meets expectations. Later you may want to crank up fault coverage depending on confidence (or otherwise) in the safety mechanisms you are using. They also make a point that formal verification can significantly improve fault-sim productivity by pre-eliminating faults that can’t be activated or can’t be observed (see also Finding your way through Formal Verification for a discussion on this topic).

An area I find especially interesting in this domain is coverage – how well is simulation covering the faults you have injected? The standard requires determining whether the effect of a fault can be detected at observation points(generally the outputs of a block) and whether diagnostic points in a safety mechanism are activated in the presence of the fault (e.g. a safety alarm pin is activated). A natural concern is that the stimulus you supply may not be sufficient for a fault to propagate. This is where coverage analysis, typically specific to fault simulation, becomes important (Synopsys provides this through Inspect Fault Viewer).

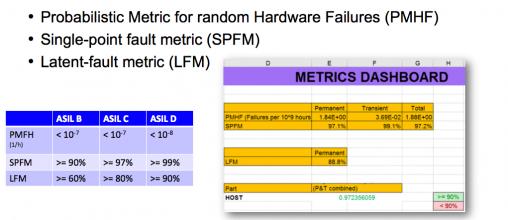

At the end of all this analysis, refinement and design improvement you get estimated MTBFs for all the different classes of fault which ultimately roll up into 26262 metrics for the design. These can then be aligned to the standard required for the various ASIL levels.

Now that’s analytics. You can learn more about the Synopsys safety solutions HERE.

Share this post via:

Comments

There are no comments yet.

You must register or log in to view/post comments.