Nvidia has found that video games are the perfect metaphor for autonomous driving. To understand why this is so relevant you have to realize that the way self-driving cars see the world is through a virtual world created in real time inside the processors used for autonomous driving – very much like a video game. It’s a little bit like the Matrix, only it is real. Perhaps this was the realization that caused Nvidia to enter the autonomous driving (ADAS) market. Regardless it certainly also has a lot to do with the truly massive computing power they are putting into their latest products.

Nevertheless, the Occupancy Grid which is the virtual world that the ADAS system creates to model the real world around the vehicle is very much like a video game. I learned more about how it works at a recent event hosted by Synopsys and SAE. Shri Sundaram a Product Manager from Nvidia spoke about their offerings for ADAS. He framed his discussion by reviewing where our current era of computing fits into the big picture.

In 1995 there were probably one billion PC users, by 2005 there were two and a half billion mobile users. Today we are looking at a world with hundreds of billions of devices – this is the age of AI and intelligent devices. It is fueled by deep learning and GPU’s. This has set the stage for self-driving vehicles. They promise to be safer, will allow us to redesign our transportation infrastructure, and offer a wider range of mobility services.

It will be great for people – driving is not always fun and can be difficult. It is this difficulty that makes automating it a challenge. Not just a technical challenge either. There is consumer acceptance and the regulatory environment to deal with. Yet, just focusing just on the technical side there are many challenges.

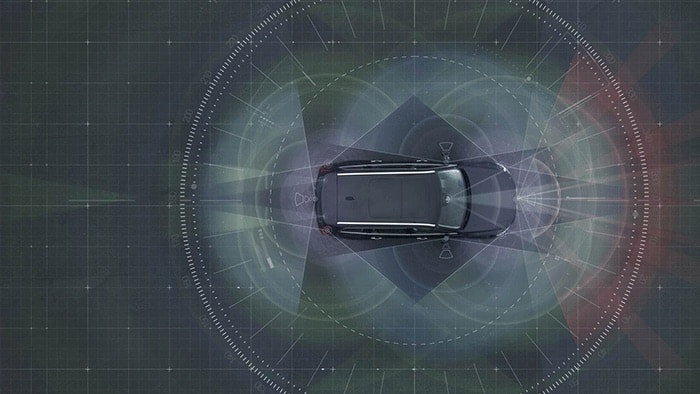

Shri lists the technical challenges as: sensors and fusion, massive compute requirements, algorithm development, and assuring functional safety. He spoke first about sensors. Sensors are used for navigation, detection and classification, and avoidance. We see GPS, inertial motion unit (IMU), multiple cameras, LiDar, radar, sonar and ultrasonic sensors. These create huge bandwidth requirements. Cameras are each over 2MP and running at 30fps. Lidar can provide over 500K samples per second. All of this needs to be fused in real time to create the occupancy grid.

Localization is the first task – using sensors and map data, the ADAS system needs to determine the location of the vehicle. At the same time, more information is needed to successfully navigate through the environment. The ADAS system will combine all the map data, location data, and perceived environment to plan actions and execute them. As Shri pointed out, there are many exceptions – snow or leaf covered roads, pedestrians, people directing traffic, construction zones, emergency vehicles, etc.

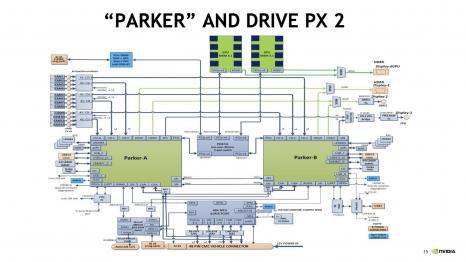

Nvidia has developed an SDK for self-driving cars called Driveworks. It uses deep learning to help perform path planning for self-driving vehicles. Driveworks runs on Nvidia’s Drive PX 2 ADAS hardware. It uses multiple layers of deep learning to separately perform operations like road surface identification, object detection, lane detection, and others and then combine those into a composite model.

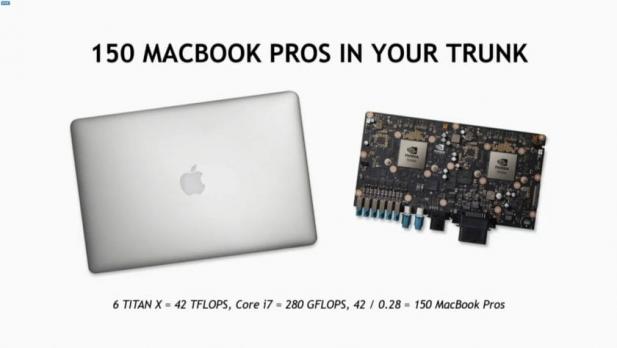

Drive PX 2 sports 2 Parker Tegra Cores, 2 Pascal GPU’s and consumes about 80W when in normal operation. Shri said that it has the equivalent compute power of about 150 Mac Book Pro’s. Their next generation will have even more processing power and will require only about 20W.

We have all heard about the recent announcement of the partnership between Tesla – the auto company, not the namesake Nvidia GPU. In addition, Shri spoke about several other self driving cars that are in development and using Nvidia AI. These include Baidi, nuTonomy, Volvo, TomTom, and WEpods.

It’s clear that Nvidia is taking a lead role in the rapidly developing ADAS market. It’s no surprise that they accepted the invitation to present during the SAE and Synopsys Seminar. Nvidia uses a number of Synopsys tools in the development of systems like Drive PX 2. The range of Synopsys products that address ADAS systems is wide. Starting at system design and modeling and moving all the way down to IP and physical implementation. For a better understanding of these offerings be sure to look more closely at the Synopsys Automotive web page.

Read More Articles by Tom Simon

Share this post via:

Comments

0 Replies to “Nvidia Drives into New Market with Deep Learning and the Drive PX 2”

You must register or log in to view/post comments.