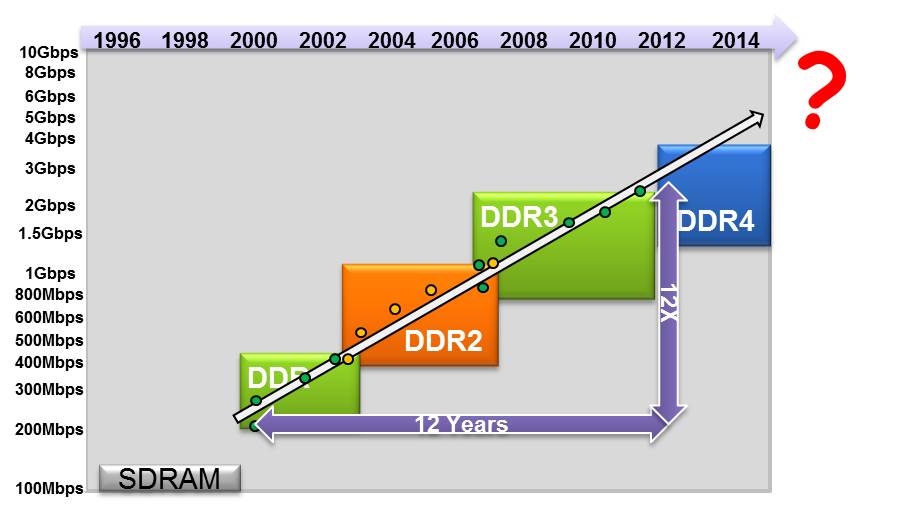

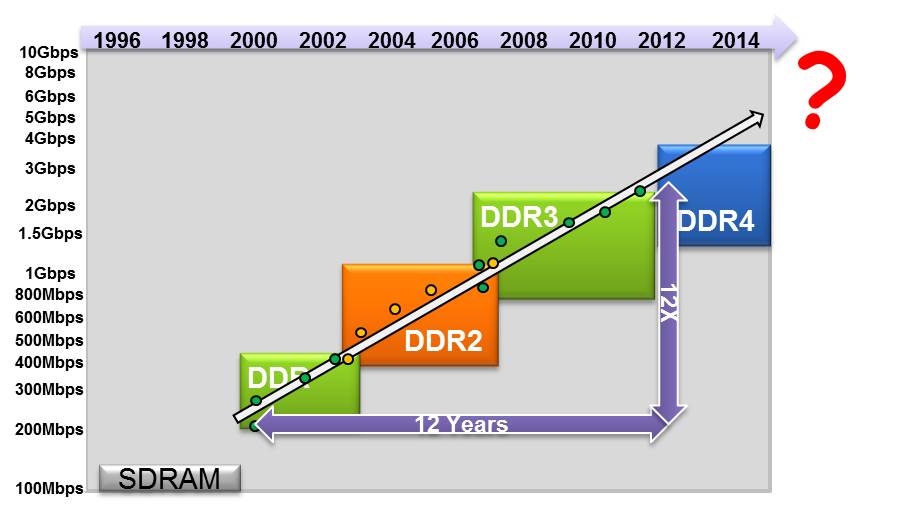

In fact, this assertion is provocative, as the DDR4 protocol standard has just been released by JEDEC… after 10 years discussion around the protocol features. Yes, the first discussions about DDR4 have started ten years ago! Will DDR4 be used in the industry? The answer is certainly yes, and DDR4 will most probably be used for years. But memory controllers experts, like Graham Allan, Sr Marketing Manager at Synopsys (and active JEDEC member), consider that DDR4 will be the last protocol standard for interfacing SDRAM. We can expect the next protocol to finally escape from parallel bus based architecture, with clock, addresses and command signals being separated. The DDRn architecture is known to generate routing issues at PCB level, high power consumption and require a complex implementation at SoC level, leading chip maker to increasingly outsource DDRn memory controller. The latter is the positive point, as it allows the SoC designers to focus of the real differentiators. But why not going to high speed serial protocol, based on a 10 Gbps (or more) SerDes with embedded clock, like PCIe or Ethernet, to name a few?

Nevertheless, this is a big day for DDR4, as for the first time, Intel has announced DDR4 support in their desktop CPU roadmap. As for many protocols (USB 3.0, PCI express or SATA), Intel’s decision to integrate it within their processor or chipset is ringing the bell for this protocol wide adoption. If we take a look at the market segment where DDR4 is expected to be adopted first, the PC segment will surprisingly not come first, as it was the case for DDR3, DDR2 and so on. DDR4 adoption will first come from Enterprise, Servers and Storage SoC. The reason is crystal clear: the PC market is strongly cost driven, and the DDR3 memory chip cost is at its lowest today, when the DDR4 chip cost is still too high for the PC market. But the technical benefits linked with this new DDR4 protocol, like power consumption (but not only), justifies using DDR4 for these high end segments like Enterprise, Networking, Server or Storage. Would you ask the question if DDR4 could be used for high end laptop or media tablets (maybe less cost sensitive than desktop PC), the answer is no, as these applications will use LPDDR4 instead…

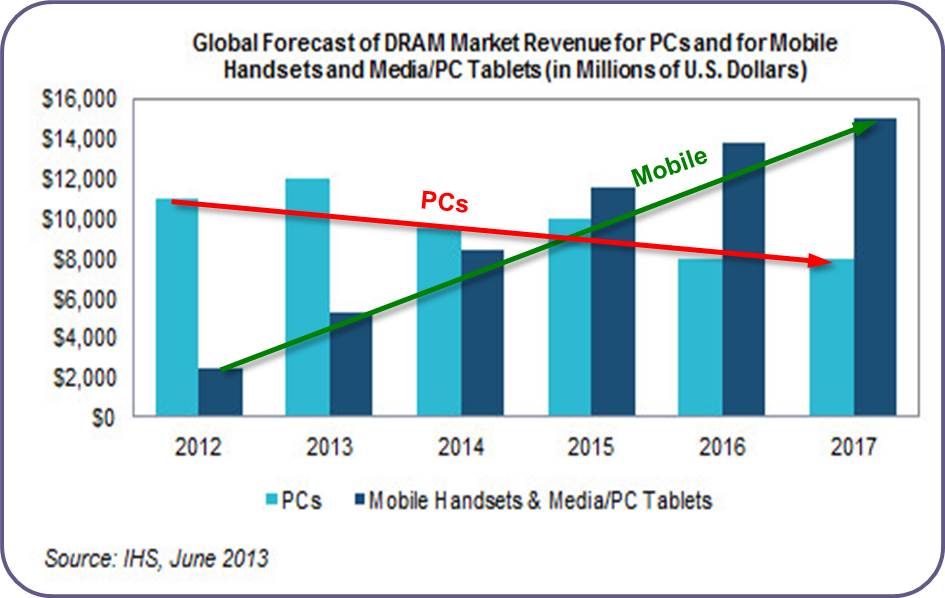

If you take a look at the above picture, you can see that the DRAM Market revenues for PC is still higher than for Mobile handset in 2014… but not for long, as the market dynamics are clearly in favor of the DRAM segment for Mobile handsets and Media/PC Tablets, and this is not a real surprise. We may expect that DDR4 will be used in the enterprise market for the next two years, then in the Desktop PC segment when the DDR4 memory device price will have come down to the same level, or below DDR3 devices.

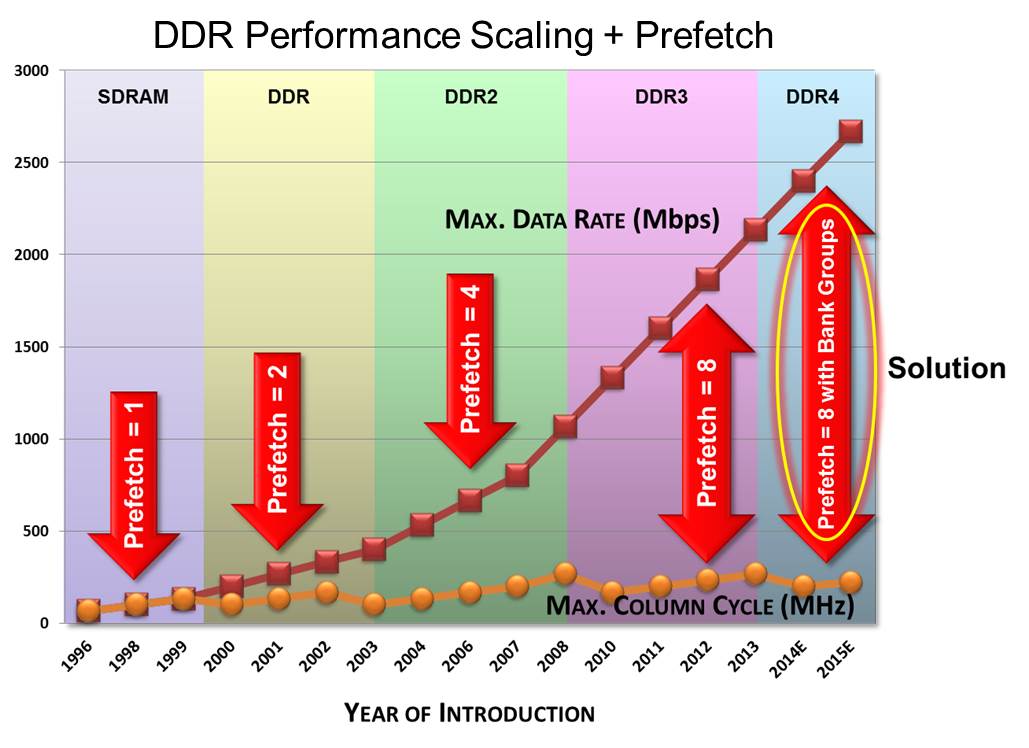

If we take a look at the DDR performance scaling across time, we see that the internal performance (max. column cycle) has stayed almost flat. Thus, the DDR performance improvement (max. data rate) came from the “prefetch” mechanism, passing from 1 for SRAM to 8 words prefetch for DDR3. As a prefetch of 16 was undesirable, adding too much die area to the DDR4 device, the performance increase has been obtained by multiplexing two bank groups, keeping the 8 words prefetch scheme.

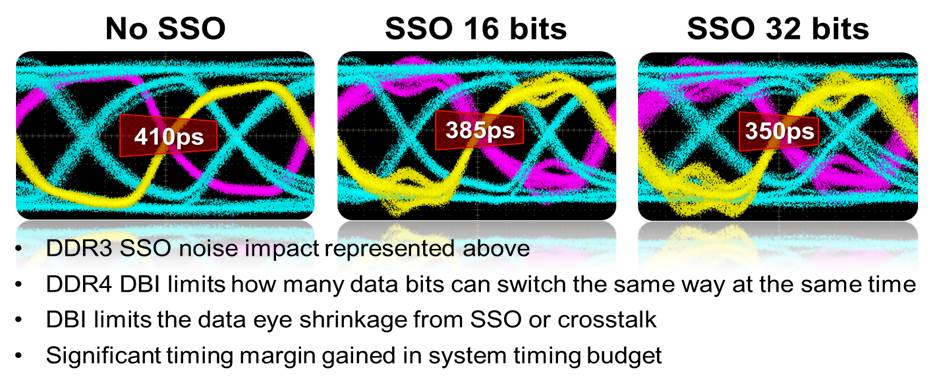

Another DDR4 feature allows improving the noise impact due to Simultaneously Switching Output (SSO): Data Bus Inversion or DBI. Everybody who has ever been part of an ASIC design team knows that you must limit the number of output switching at the same time, or SSO, to guarantee a good enough noise margin. On the picture below, you can measure the SSO impact on the data eye, ranging from 410ps (no SSO) down to 350ps (32-b SSO). The data eye is directly linked with the available timing margin a designer will benefit to implement the SoC design. The shorter the data eye, the most difficult a design will be to manage!

Another benefit linked with DBI is that you can get up to 30% power savings when using the feature. If you remember that one of the target applications is server farm, this 30% power savings can be extremely welcomed in this case, and good to have in any case.

Another benefit of DDR4, especially important for enterprise systems is improved Reliability, Availability and Serviceability (RAS), thanks to the implementation of several features in the DDR4 protocol, like ECC, training and calibration, temperature monitoring, DBI, C/A parity check (with system retry), CRC, DDR4 Controller and PHY diagnostic register, to name a few.

We have to remember that it took almost 10 years to JEDEC to come to DDR4 protocol release, one of the reasons being that the “4” in DDRn will certainly be the latest of this kind, the next SDRAM protocol being expected to be completely different (hopefully High Speed Serial SerDes based, with clock recovery to simplify both the SoC and the board level implementation). Thus, it appears that JEDEC committee has decided to implement has many as possible of the available techniques used to improve noise margin, power consumption and RAS. No doubt that the enterprise market will appreciate…

From Eric Esteve from IPNEST

More Articles by Eric Esteve…..

lang: en_US

Share this post via:

Comments

0 Replies to “Bye-Bye DDRn Protocol?”

You must register or log in to view/post comments.