In business we all have heard the maxim, “Time is Money.” I learned this lesson early on in my semiconductor career when doing DRAM design, discovering that the packaging costs and time on the tester were actually higher than the fabrication costs. System companies like IBM were early adopters of Design For Test (DFT) by adopting scan design with special test Flip-Flops and then using Automatic Test Pattern Generation (ATPG) software to create test implementations that had high fault coverage, with a minimum amount of time on the tester.

It took awhile for logic designers at IDMs to adopt DFT techniques, because they were hesitant to give up silicon area in order to improve fault coverage numbers, instead favoring the smallest die size to maximize profits.

Challenges

Today there are many challenges to test implementation time and costs:

- Higher Design Complexity

- >100,000,000 cell instances

- 100’s of cores

- Subtle Defects

- >50% of failures not found with standard tests

- In-system testing required

- Fewer test pins

- Designs/core with <7 test pins

Consider a modern GPU design that has 50 Billion transistors, with 100 million cell instances, just how do you create enough test patterns to meet fault coverage goals while spending the minimum time on a tester?

A Solution

Adding scan Flip-Flops is a great start and a proven DFT methodology, but what if you want to meet these newer challenges?

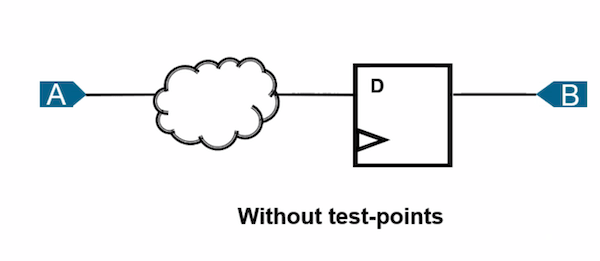

It’s all about controllability and observability in the test world, and by adding something called a Test Point, you make controlling and observing a low coverage point in your logic much, much easier. Consider the following cloud of logic, followed by a D FF:

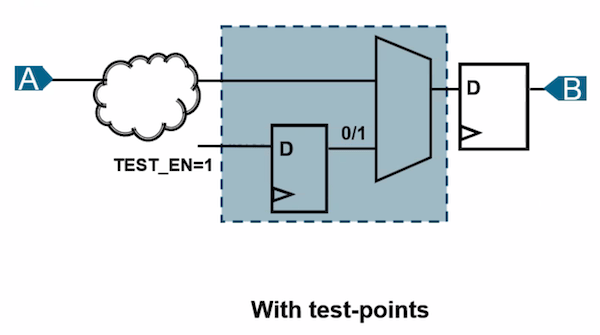

If the D input to the FF is difficult to control, or set, then we never observe a change in the output at point B. By adding a Test Point, we can now control the D input, thus improving the fault coverage:

Ideally, a test engineer wants to use Test Points that are compatible with existing scan compression, having minimal impact on Power/Performance/Area (PPA), and is easy to use.

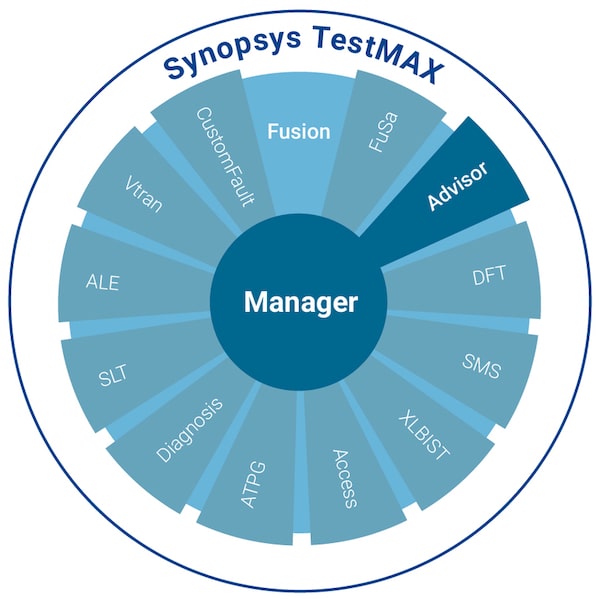

Synopsys TestMAX Advisor

I spoke with Robert Ruiz, Director of Product Marketing, Test Automation Products and Pawini Mahajan, Sr. Product Marketing Manager, Digital Design Group over a Zoom call to learn how the Synopsys TestMAX Advisor tool fits into an overall suite of test tools. Robert and I first met back in the 1990s when he worked at Sunrise Test Systems, and I was at Viewlogic, so he has a deep history in the test world. Pawini and I both worked at Intel, still the number one semiconductor company in the world by revenue.

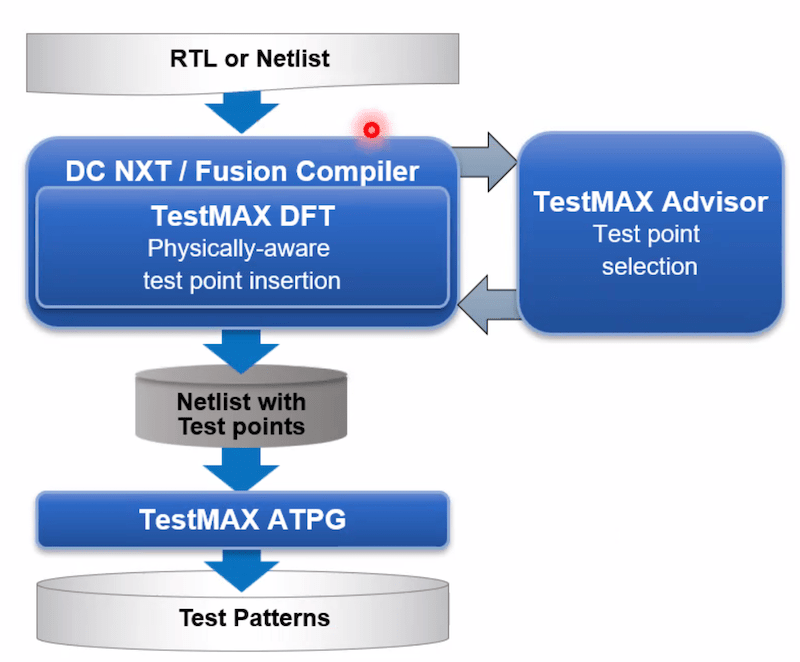

Here’s the tool sub-flow for TestMAX Advisor:

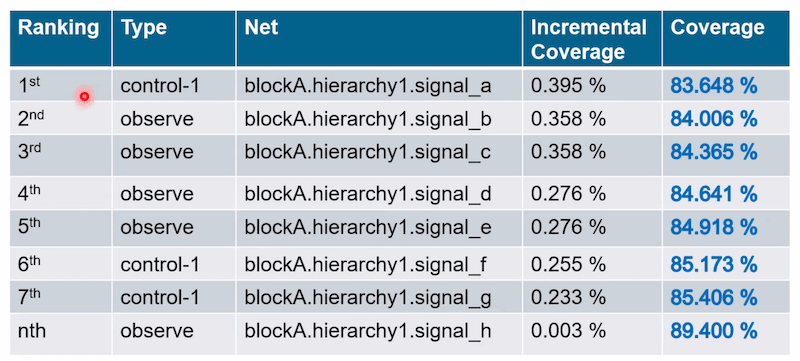

The TestMAX Advisor tool will analyze and rank all of the FFs used in a design, then determine which nets need added controllability or observability, so a test engineer can then determine just how many Test Points should be inserted and see how much the fault coverage improvement and shorter test patterns are going to be. The engineer can even set the percentage coverage goals and allow TestMAX Advisor to add Test Points automatically to meet the goals.

Side note to users of other ATPG tools, yes, you can use TestMAX Advisor with your favorite ATPG tool.

You get to see the incremental fault coverage improvement by adding Test Points in a table format:

Test Implementation

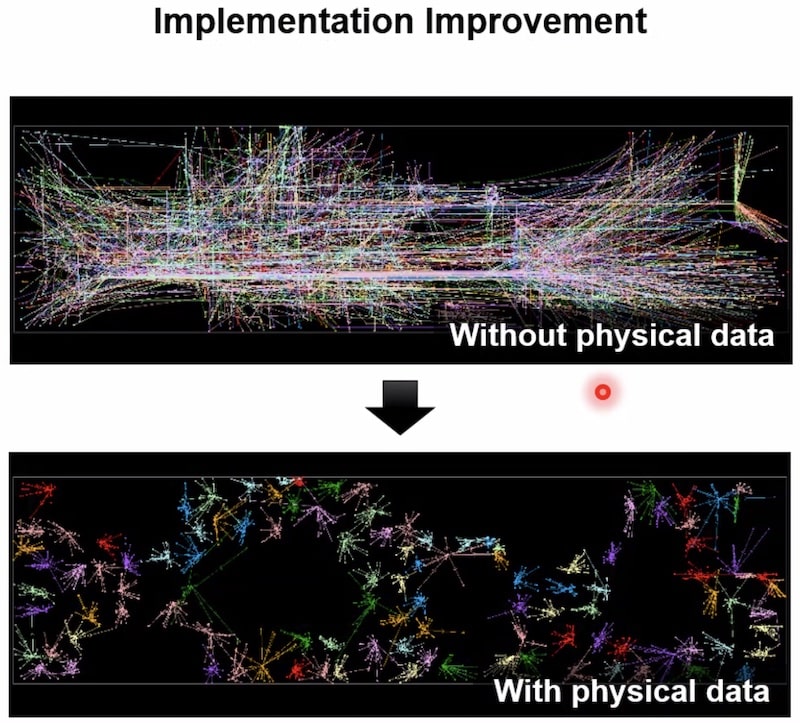

Adding extra Test Points is going to add new congestion to the routing, so the developers figured out how to make the placement of Test Points be physically-aware, so that congestion is minimized and timing impacts are reduced. Just look at the congestion map comparison below:

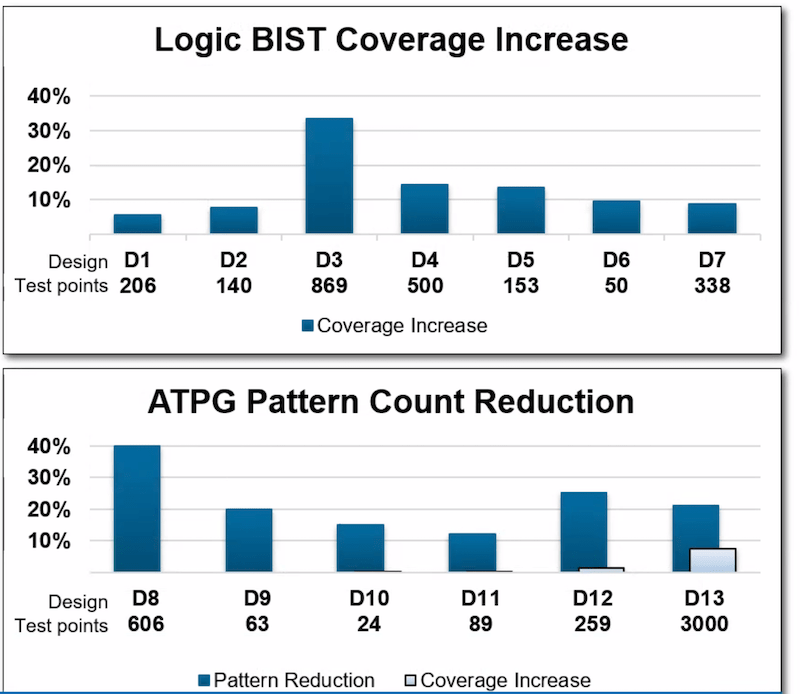

Seven examples of fault coverage improvements (up to 30%) and pattern count reductions (up to 40%) were provided:

Summary

DFT using scan and ATPG tools is recommended to achieve fault coverage goals, and when shortest test times are important then you can consider adding new Test Points to improve controllability and observability. EDA developers at Synopsys have coded all of the features to make Test Point insertion an easy task that is producing some promising results to address the test implementation time and costs. TestMAX Advisor looks to be another worthy tool in your toolbox.

Related Blogs

- Synopsys Enhances Chips and Systems with New Silicon Lifecycle Management Platform

- Accelerating Functional Safety Verification

Comments

There are no comments yet.

You must register or log in to view/post comments.