Of late, it has become painfully obvious that the value of electronics is in the system. And since systems  demand continuing improvement, increasing performance and decreasing cost (once partially guaranteed by semiconductor process advances) is now sought through algorithm advances – witness the Google TPU and custom fabrics for high-performance server designs. But designing algorithms in RTL would be a masochistic exercise. The right place to do this is in software, whether C/C++, MatLab or similar platforms.

demand continuing improvement, increasing performance and decreasing cost (once partially guaranteed by semiconductor process advances) is now sought through algorithm advances – witness the Google TPU and custom fabrics for high-performance server designs. But designing algorithms in RTL would be a masochistic exercise. The right place to do this is in software, whether C/C++, MatLab or similar platforms.

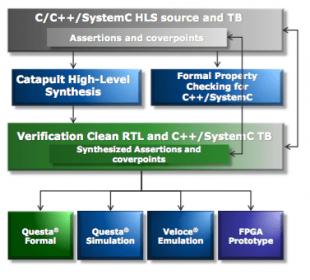

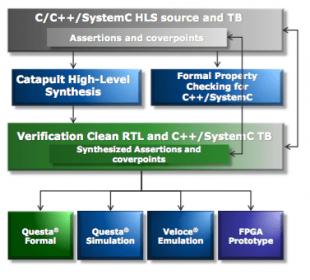

Another important change is in increasing use of software-based verification. Design verification is running on emulators and prototype platforms on more of the software stack, in order to provide confidence and coverage across a range of use models. These factors together mean significant components of the design and overall SoC verification are increasingly centered on software rather than RTL. The Mentor Calypto group have recognized this shift and are building a solution to address both design and verification together at high-level design (with appropriate re-verification at RTL).

The synthesis part of the story is or should be well known by now. Catapult may well be the most capable of the commercial HLS solutions because it can synthesize from both SystemC and C++. It also allows for untimed, loosely-timed or cycle-accurate models, providing the ability to use timing-based constructs where needed but to expand to full C++ for complex algorithm development. And naturally algorithm design, experimentation and synthesis at this level is more productive than at RTL.

The verification part of the story is where this gets really interesting. If you know Calypto, you know they also have high-level property checking (non-temporal today). This is now embedded in the flow, meaning you can check for things like array-bounds errors, incomplete cases statements and user-defined assertions while you are still working with the software model. And since dynamic verification on a software model running software-based tests runs 1000’s of times faster than any RTL-based testbench could hope to run, this is a much better place than RTL to do intensive testing across many realistic use-cases.

The Catapult verification flow also automatically runs verification on the generated RTL using the same high-level testbench and compares results between the two simulations, all tightly coupled to Mentor verification solutions. Further, C-based assertions and cover statementrs will be converted to (synthesizable) OVL or PSL equivalents in the generated RTL so they can be checked in RTL-based verification.

This tight coupling gets you pretty close to RTL verification signoff without needing to lift a finger in RTL testbench generation and debug. Pretty close but not perfect and Mentor freely admitted as much to me. Control logic added during synthesis isn’t tested by the high-level test bench, so isn’t tested in RTL verification either. Verification engineers have to add their own tests for these components, e.g. for stall and reset tests and unreachability, a task which seems not too onerous today, at least based on customer experiences. I wouldn’t be surprised to see Mentor patch some of these automation holes in future releases.

All nice, but customer results are the real test. NVIDIA, on a 10M gate video decoder for Tegra X1, were able to improve design productivity by 50% and cut verification cost by 80% (from an estimated 1000 CPUs for 3 months to 14 CPUs for 2 weeks). In a later spec revision, they were able to re-optimize the IP from 20nm/500Mhz to 28nm/800Mhz in 3 days!

Google built a similar video decoder. They were able to get from start of design to verified RTL twice as fast as they had projected without this flow. And IP modifications were 4X faster. If Google thinks this is a good idea, might want to ponder the way you are doing it today, even if you believe you get better results. In the systems world (aka the majority of our volume market), schedule is generally more important than the highest-possible performance result.

All of which makes me wonder what role RTL will have to play in the future, at least at the system level. Does RTL become the new gate-level, good for emulation and ECOs, but otherwise destined to remain unseen? You can learn more about Catapult advances HERE.

Share this post via:

Comments

There are no comments yet.

You must register or log in to view/post comments.