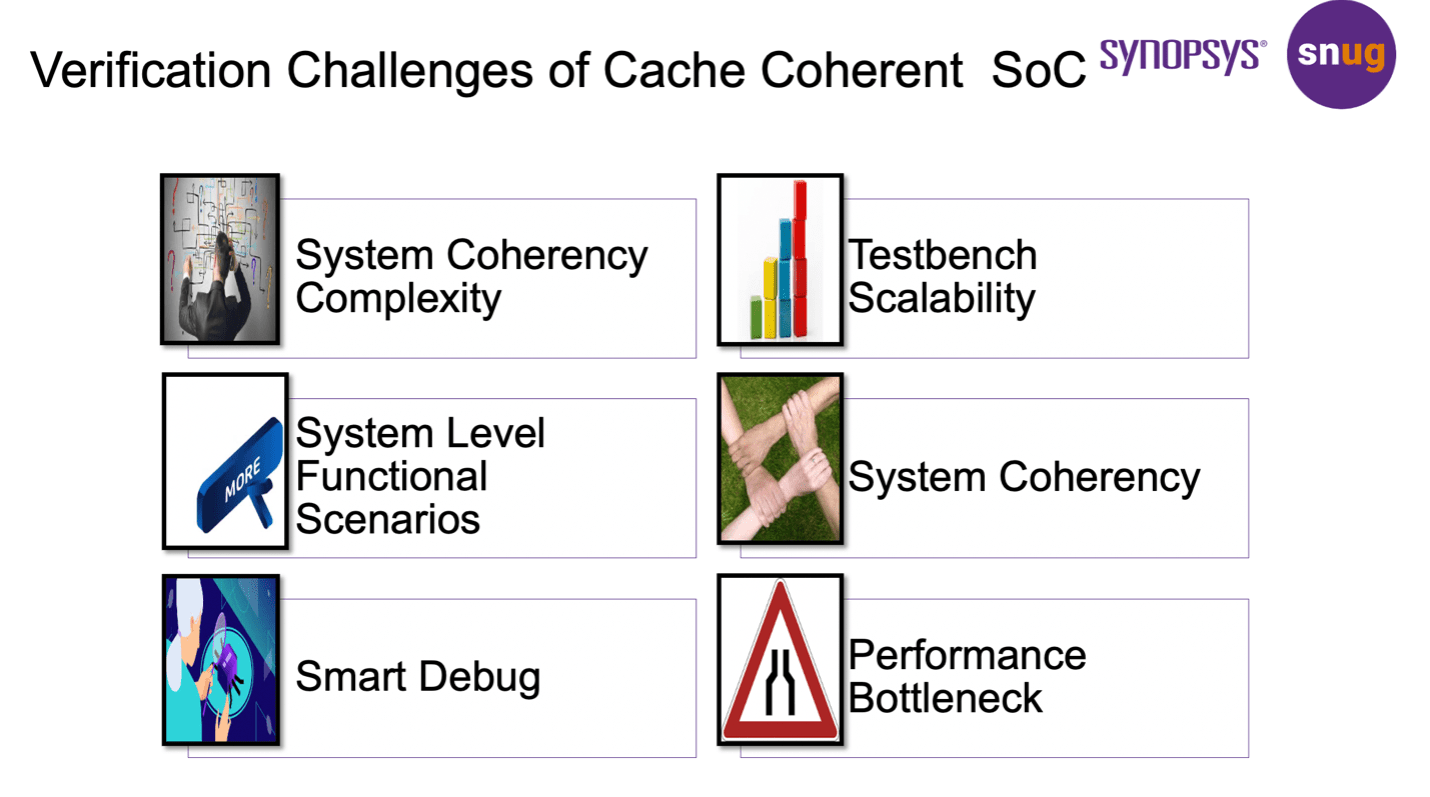

It would be nice if there were a pre-packaged set of assertions which could formally check all aspects of cache coherence in an SoC. In fact, formal checks do a very nice job for the control aspects of a coherent network. But that covers only one part of the cache coherence verification task. Dataflow checks are just as important, where many things can go wrong, such as unintended reads of stale data. And where performance bottlenecks will inevitably appear.

The need for directed test

In other words, directed test verification is unavoidable, whether through simulation or emulation. So what? You have to create testbenches for lots of other verification objectives. This is just another testbench, right? But directed coherence testing can get very complicated very fast. First consider the most basic test: from a single processor in a single cluster, through coherent interconnect. Does the cache in the interconnect behave correctly? Returning the value in the cache if found there, otherwise falling through to the memory controller to collect that value from off-chip memory. Repeat for every coherent master on every coherent interconnect.

Now get a bit more sophisticated. Two masters, maybe two CPUs, are reading and writing at the same time. Except they’re doing so on two separate coherent networks (which must be mutually coherent). CPU-A writes a value to a memory location in cache in its network, then CPU-B wants to read the equivalent location from cache in its network. Snooping should detect the change and ensure that CPU-B picks up the correct value. Does it? Repeat for every possible such sequence and every pairwise combination of who goes first and who goes second (or even at the same time)? Also permute in the slaves that also need to access memory.

Automating test generation

The number of combinations here could spin out of control very fast. Especially when you may have more than 1000 interfaces on the network, not uncommon in datacenter SoCs. This needs thoughtful planning. Avoid confusing interaction between bugs in multiple blocks, and run faster, by using VIPs for most blocks except the ones you want to include in a given test. Avoid having to test all N2+ combinations through a carefully considered plan to meet coverage.

Which also means you need a way to automatically generate a series of tests in which first only A, B and C blocks are RTL and everything else is VIP. Then B, C and D are RTL and everything else is VIP. And so on. For each test you’d like to be able to draw on pre-defined coherency test sequences, either at the simple test level or at the system level. And monitors to check across interconnect ports. All ready to apply.

All of this is provided through the VC SoC AutoTestbench, building tests starting from a DUT IP-XACT model (or Verdi KDB) and VIP IP-XACT models. Which can then feed into the SoC Interconnect Workbench. An automated workflow to run all those zillions of tests.

Coverage can be monitored through a verification plan you can define in Verdi. Debug you handle through Verdi Protocol Analyzer. Here with a protocol view, a transaction view and some pretty sophisticated filtering to isolate what is happening in transactions and resulting memory values. This is where you would pick up those instances (hopefully few) of reading a stale value.

Performance testing

The last big verification objective is for performance. It’s nice that your system works well when unstressed, but what happens when you’re running at speed and there’s a lot of traffic churning through those coherent networks? Here Synopsys provides a capability called VC VIP Auto Performance which will generate traffic following the Arm Adaptive Traffic Profile. (You need to create a test profile as input to this tool.) Subsequently you can analyze for latency and bandwidth problems in Verdi Performance Analyzer.

A very comprehensive solution for directed cache coherency testing, especially at the system level. Satyapriya Acharya (Sr. AE Manager at Synopsys) has created a recorded presentation on this topic, with more detail than I have provided. He wraps up with a discussion of how quickly this testing can be mapped over to ZeBu emulation, delivering a 10k performance speedup.

Also Read:

Addressing SoC Test Implementation Time and Costs

Your Car Is a Smartphone on Wheels—and It Needs Smartphone Security

Global Variation and Its Impact on Time-to-Market for Designs

Share this post via:

Comments

There are no comments yet.

You must register or log in to view/post comments.