Mentor just released a white paper on this topic which I confess has taxed my abilities to blog the topic. It’s not that the white paper is not worthy – I’m sure it is. I’m less sure that I’m worthy to blog on such a detailed technical paper. But I’m always up for a challenge, so let’s see what I can make of this, extracting a quick and not very technical read from very technical source material.

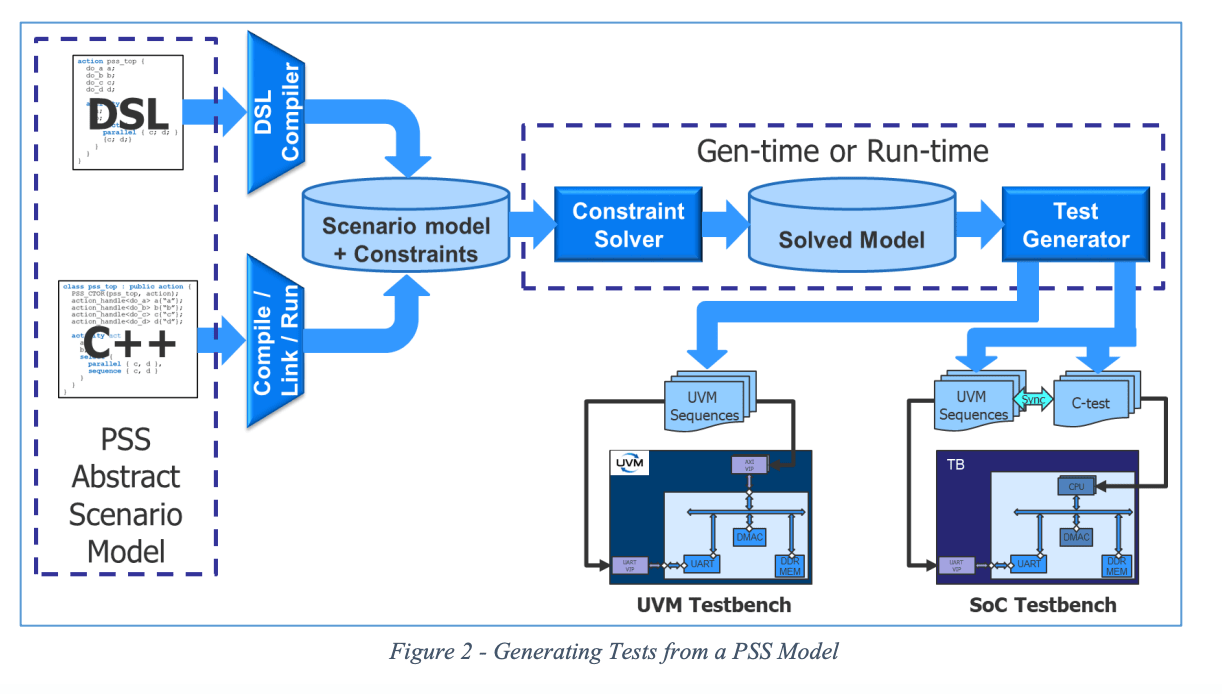

First, a quick recap on PSS. This is a method to define a test (more properly a family of tests) at what we’d generally consider a system level, based on a declarative definition of what we want to accomplish in the test rather than how it will be accomplished. This method provides (in principle) portability of tests between verification levels (IP, subsystem, system) and verification platforms (simulation, emulation virtual platform, etc).

The devil is in the details of how you convert those high-level test representations into something that can run at one of those levels, on one of those platforms. This stage in PSS is called test realization and is implemented in exec blocks using procedure calls or test templates. As I understand it, this is where it gets ugly – in contrast to the beauty of those higher-level declarative descriptions.

The author (Matthew Ballance – product engineer and PSS technologist at Mentor) compares and contrasts the template and procedural interface (PI) approaches and comes down on the side of the PI for flexibility, ease of management and better up-front error-checking. He provides a number of examples, beyond my ability to comment, for a memory to memory DMA transfer showing the basics, and how you might handle environment and event management considerations.

He switches back to a topic where I again feel solid ground beneath my feet: reuse requirements for test realization. On reuse he emphasizes composability of realization interfaces which means they must use a common API to manage concurrency and events. I would imagine that practically you would want a common API for environment data. He talks about supporting multiple instances of realization (since you can instantiate IPs more than once), meaning that each instance needs to maintain context data. He also makes a point about addressability which I confess sailed right over my head.

For me, these points raise a question. How is this all going to work in a design with multiple IP from multiple sources? It seems like we really should have a standardized realization API allowing for all these factors to be managed in a uniform way no matter who provides the IPs you happen to use in your SoC. I’m not aware of such a standard but I’m struggling to see how otherwise PSS reuse would not involve redevelopment or modification of the realization level to some extent for each design. I’m looking forward to having PSS experts set me straight!

If not, I see a real problem because PSS has a lot to offer. In my Innovation in Verification series, we’ve already seen a couple of instances where PSS could significantly help with new kinds of coverage or improved security testing.

You can read the Mentor paper HERE.

Share this post via:

Comments

There are no comments yet.

You must register or log in to view/post comments.