This is another installment covering TSMC’s very popular Open Innovation Platform event (OIP), held on August 25. This event presents a diverse and high-impact series of presentations describing how TSMC’s vast ecosystem collaborates with each other and with TSMC. This presentation is from Alchip, presented by James Huang,… Read More

Artificial Intelligence

Cerebras and Analog Bits at TSMC OIP – Collaboration on the Largest and Most Powerful AI Chip in the World

This is another installment covering TSMC’s very popular Open Innovation Platform event (OIP), held on August 25. This event presents a diverse and high-impact series of presentations describing how TSMC’s vast ecosystem collaborates with each other and with TSMC. The topic at hand was full of superlatives, which isn’t surprising… Read More

Lip-Bu Hyperscaler Cast Kicks off CadenceLIVE

Lip-Bu (Cadence CEO) sure knows how to draw a crowd. For the opening keynote in CadenceLIVE (Americas) this year, he reprised his data-centric revolution pitch, followed by a talk from a VP at AWS on bending the curve in chip development. And that was followed by a talk by a Facebook director of strategy and technology on aspects of… Read More

Cadence Increases Verification Efficiency up to 5X with Xcelium ML

SoC verification has always been an interesting topic for me. Having worked at companies like Zycad that offered hardware accelerators for logic and fault simulation, the concept of reducing the time needed to verify a complex SoC has occupied a lot of my thoughts. The bar we always tried to clear was actually simple to articulate… Read More

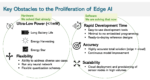

New Processor Helps Move Inference to the Edge

Many of the most compelling applications for Artificial Intelligence (AI) and Machine Learning (ML) are found on mobile devices and when looking at the market size in that arena, it is clear that this is an attractive segment. Because of this, we can expect to see many consumer devices having low power requirements at the edge with… Read More

All-In-One Extreme Edge with Full Software Flow

What do you do next when you’ve already introduced an all-in-one extreme edge device, supporting AI and capable of running at ultra-low power, even harvested power? You add a software flow to support solution development and connectivity to the major clouds. For Eta Compute, their TENSAI flow.

The vision of a trillion IoT… Read More

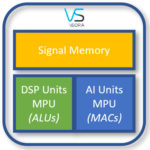

Combining AI and Advanced Signal Processing on the Same Device

A lot has been written and even more spoken about artificial intelligence (AI) and its uses. Case in point, the use of AI to make autonomous vehicles (AV) a reality. But, surprisingly, not much is discussed on pre-processing the inputs feeding AI algorithms. Understanding how input signals are generated, pre-processed and used… Read More

CEO Interview: Ljubisa Bajic of Tenstorrent

Ljubisa Bajic is the CEO of Tenstorrent, a company he co-founded in 2016 to bring to market, full-stack AI compute solutions with a compelling new approach to address the exponentially growing complexity of AI models.

What is Tenstorrent?

Tenstorrent is a next-generation computing company that is bringing to market the first… Read More

DAC Panel – Artificial Intelligence Comes to CAD: Where’s the Data?

Artificial Intelligence (AI) and Machine Learning (ML) are becoming more and more commonplace in our world. We have Siri, Alexa and Google Assistant that understand our voice commands. Vision systems that recognize objects are used for facial recognition, autonomous driving, medical, geographical and many other applications.… Read More

Designing AI Accelerators with Innovative FinFET and FD-SOI Solutions

I had the pleasure of spending time with Hiren Majmudar in preparation for the upcoming AI Accelerators webinar. As far as webinars go this will be one of the better ones we have done. Hiren has deep experience in both semiconductors and EDA during his lengthy career at Intel and now with a pure play foundry. He is intelligent, personable,… Read More

TSMC Process Simplification for Advanced Nodes