I believe I asked this question a year or two ago and answered it for the absolute bleeding edge of datacenter performance – Google TPU and the like. Those hyperscalars (Google, Amazon, Microsoft, Baidu, Alibaba, etc) who want to do on-the-fly recognition in pictures so they can tag friends in photos, do almost real-time machine translation, and many other applications. But who else cares? I’ve covered a couple of Mentor events on using Catapult HLS to build custom accelerators. Fascinating stuff and good insights to the methods and benefits, but I wanted to know more about what kind of applications are using this technology.

I talked to the Catapult group to get some answers: Mike Fingeroff (technologist for Catapult), Russ Klein (Product Marketing for Catapult) and Anoop Saha (Senior manager, strategy and Biz Dev for machine learning and 5G).

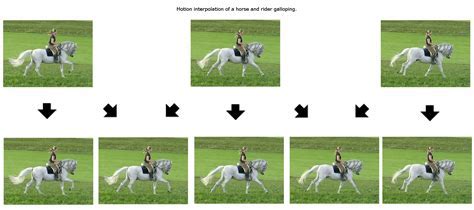

Video Interpolation

Anoop talked about one very cool application – video frame interpolation. You take a video at some relatively low number of frames per second, say 20 fps, but maybe you want to play it back on a 60fps display. Maybe you also want to replay in slow-motion. In either case you have gaps between frames which must be filled in somehow if you don’t want a jumpy replay. The simple answer is to average between frames. But that’s pretty low quality – it looks flickery and unnatural. A much better approach today is AI-based. Train a system with (many) before and after frames to learn how to much more smoothly and more naturally interpolate. The results can be quite stunning.

5G

Anoop added that generally, any case where you have to respond to serious upstream bandwidth and be able to make near real-time decisions to influence downstream behavior, you’re going to need custom solutions to meet that kind of performance. For example, Qualcomm talks about how AI in the 5G network will help with user localization, efficient scheduling and RRU utilization, self-organizing networks and more intelligent security, much of which demands fast response to high volume loads.

Video doorbell

Russ talked about his Ring doorbell. He doesn’t want the doorbell to go off at 3am because it detected a cat nearby. He wants accurate detection at a good inference rate, but it has to be very low power because the doorbell may be running on a battery. I could imagine a similar point being made for an intelligent security system. The movie trope of detectives fast forwarding through hours of CCTV video may soon be over. A remote camera shouldn’t upload video unless it sees something significant, because uploads burn power at the camera and because who wants to scroll through hours of nothing interesting happening?

The advantage of HLS for custom AI accelerators

Fair points, but why not run this stuff on a standard AI accelerator? The Catapult team told me that their customers still see enough opportunity in the rapidly evolving range of possible AI architectures to justify differentiation in power, performance and cost through custom solutions. AI accelerators haven’t yet boiled down to a few standard solutions that will satisfy all needs. Perhaps they never will. A custom solution is even more attractive when you can prototype a system in an FPGA, refine it and prove it out, before switching to an ASIC implementation when the volume opportunity becomes clear.

Russ wrapped up by adding that algorithms are the starting point point for all these evolving AI solutions, which make them natural fit with HLS. Put that together with HLS ability to incrementally refine implementation architecture to squeeze out the best PPA (as Russ showed in an earlier webinar I blogged). Further add HLS ability to support system verification in C against very large data sets (video, 5G streams, etc). Put that all together and Russ sees the combination continuing to reinforce interest in the Catapult solution. Difficult to argue with that.

You can learn more about Catapult HERE.

Share this post via:

Comments

One Reply to “Why Go Custom in AI Accelerators, Revisited”

You must register or log in to view/post comments.