Yield, no topic is more important to the semiconductor ecosystem. After spending a significant part of my career on Design for Manufacturability (DFM) and Design for Yield (DFY), I’m seriously offended when semiconductor professionals make false and misleading statements that negatively affects the industry that supports us.

Continue reading “TSMC 28nm Yield Explained!”

The 2012 International Conference on ENGINEERING OF RECONFIGURABLE SYSTEMS AND ALGORITHMS – ERSA’12

July 16-19, 2012, Monte Carlo Resort, Las Vegas , Nevada , USA

ERSA-News: ERSA-NEWS ERSA’12 Website: http://ersaconf.org/ersa12

FPGAs connect users over TV whitespace

New embedded computing standards always take a while to get traction, and a burning question for innovators is what to do in the period between concept and acceptance. Sometimes, new ideas come when commercial silicon changes direction.

CEO Forecast Panel

This year’s CEO forecast panel was held at Silicon Valley Bank. Bankers live better than verification engineers, as if you didn’t know, based on the quality of the wine they were serving compared to DVCon.

This year the panelists were Ed Cheng from Gradient, Lip-Bu, Aart and Wally (and if you don’t know who they are you haven’t been paying attention) and Simon Segars of ARM (not their CEO, of course). Ed Sperling moderated. Somebody had managed to dig up the fact that at the start of his career he’d been a crime reporter, so that made sure that we only got truthful answers all evening!

The format was completely different from prior years. Instead of each CEO getting up and doing a presentation, instead various questions were discussed. The CEOs had been polled beforehand on their answers and then EDAC members (I’m not sure how many or who, it was a bit unclear) had also been polled. So one of the interesting things was to focus on areas where the CEOs differed from the man-in-the-street. And by ‘man in the street’ I mean design engineers. You’ll get some pretty odd looks even in silicon valley asking a random person in the street when stacking die will go mainstream.

Q1: when will stacking die become mainstream?CEOs were more conservative that their customers, with CEOs going for 2015-2016 and customers with 2013. Although as Ed Cheng pointed out, what does mainstream mean? There are stacked die in most cameras, in most DRAM assemblies and so on already. Everyone agreed that memory on processor, and 2.5D interposer would arrive long before true 3D (meaning punching TSVs through active area all over the die).

Q2: what is the most difficult challenge?CEOs went with integration, but their customers picked software, time-to-market, power and cost. Typing this now I’m not sure if the CEOs meant integration of their tools or integration of IP etc on chips. Certainly in the big EDA companies, integrating tools to work smoothly together now that they have to look forward and back up and down the tool chain is a major challenge.

Q3: what is the most prevalent concern for EDA customers?Everyone picked power, so at least the CEOs understand something about their customers. The CEOs also picked performance but their customers were more concerned with area/cost. Power is an issue right from the architectural level where people are looking for 5-10X reduction (hmm, that’s going to be tough) all the way down to the process where FinFETs are coming, soon if you are Intel, and in a couple of years if you are not.

Q4: what technology challenge will spur the next growth in EDA?Survey says…IP reuse and integration for the CEOs, and hardware/software co-design for the customers. Wally pointed out, as he has several times before, the suprising fact that all the growth in EDA, all of it, comes from new technologies. Something new comes along like place and route, grows fast and then plateaus for a decade while something new builds growth on that base.

Q5: what will EDA look like in 5 years?CEOs picked the same number of major companies but defined differently. Customers predict more startups and more consolidation. Wally noted that the top 3 EDA companies have made up 75% of EDA revenue for 40 years and that will continue although which 3 companies may change (I guess Wally can take pride in being the only member of the DMV, Daisy, Mentor, Valid that survived). Lip-Bu said that some VCs are starting to come back to EDA. I have to say that any companies I’ve been involved with have not seen any signs of this (my feeling is that EDA compaies don’t need enough money to be interesting to most funds, and the exits are too low).

Q6: where will the majority of leading edge designs be done in 5 years?EDA CEOs all picked North America, but about 30% customers picked China. Everyone agreed that on a 10 year timescale, things are a lot less certain. Aart said that a country (think China) goes through 3 stages: competing on cost, competing on competence and finally competing on creativity. China is moving from the cost to the competence era at the moment, I guess.

Q7: who will drive the industry in 5 years?Everyone said system/software companies, but the CEOs also had some love for fabless companies and the customers for foundries and IDMs. To some extent the answer is ‘all of the above’ of course but you can’t ignore system companies, Apple in particular, due to scale. Apple increased its revenue last year by roughly Intel’s revenue. It is an unequal battle.

Q8: will SoCs rely on more cores in a single processor or more processors in 5 years?Pretty much everyone reckoned both, more cores and more processors. The big challenge here is the dreaded dark silicon. We can put the cores on there but can we power them all up?

There were a few questions from the audience. Peggy Aycinena asked that, since this was a forecast panel, what was the forecast for 2012. All the CEOs had given guidance in recent conference calls and Synopsys and Mentor predicted 8.4% and Cadence 8.8%.

Farm Management

Every so often I come across a new company in EDA or one of its neighboring domains, new to me anyway, and new to SemiWiki. One such company is RunTime Design Automation (RTDA). They provide a suite of tools for managing server farms (or internal clouds which seems to be the trendy buzzword du jour). Running a few EDA scripts on a few servers is something that is not too hard to do, but when you are looking at running tens of thousands of jobs on thousands of servers, you have a whole new set of problems. Servers crash or hang. Tools run out of licenses. Jobs depend on each other in a complicated tree. These are the problems that RTDA addresses.

When I was at Ambit we had a Q/A farm of 40 Sun workstations and 20 HP workstations, an unusually large investment at the time. Now this is a trivially small farm in an era when data-centers may house 100,000 servers and literally millions of jobs a day need to be scheduled.

RTDA have four products:

- LicenseMonitor

- NetworkComputer

- FlowTracer

- WorkloadAnalyzer

The simplest product is LicenseMonitor. It pulls license usage data from the license servers used by EDA (and other) tools, such as FLEXlm. This gives a summary of what license usage really is so that a company does not end up either having too few licenses and thus wasting time when running jobs, or having too many licenses and wasting money. It also interfaces with Network Computer to ensure jobs are not scheduled until licenses are available.

NetworkComputer is the highest performance job scheduler to spread a workload over a large server farm. It is similar to the well-known LSF but more powerful and better integrated with EDA license management. It has a GUI that allows a user to keep track of thousands of jobs, which are color coded as they become runnable, start to run, crash, complete and so forth. It can handle millions of jobs on thousands of processors. It can get license usage of, for example, simulation licenses up to very close to 100%.

FlowTracer is a much more sophisticated tool like the Unix make command that manages complex design flows, handing all the dependencies, errors and so on. It handles the entire design flow giving visibility to what is happening and automatically updating dependencies intelligently.

Finally, WorkloadAnalyzer is a server farm simulation allowing efficient compute farm planning, answering questions such as what would happen if additional servers or additional licenses were purchased. It can be used daily for planning or annually to provide data for license renegotiation. It can take information from Network Computer (or its competitors) and analyze how that workload would run under different circumstances and thus make it easier to optimize the investment.

Download the free e-book, The Art of Flows here

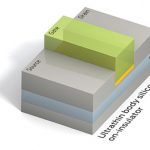

3D Transistor for the Common Man!

The 1999 IDM paper Sub 50-nm FinFET: PMOSstarted the 3D transistor ball rolling, then in May of 2011 Intel announceda production version of a 3D transistor (TriGate) technology at 22nm. Intel is the leader in semiconductor process technologies so you can be sure that others will follow. Intel has a nice “History of the Transistor” backgrounder in case you are interested. Probably the most comprehensive article on the subject was just published by IEEE Spectrum “Transistor Wars: Rival architectures face off in a bid to keep Moore’s Law alive”. This is a must read for all of us semiconductor transistor laymen.

My first pick of talks at this month’s Common Platform Technology Forumwill be given by Dr. Greg Yeric, ARM Consultant Design Engineer, R&D. Greg has 20 years of semiconductor experience beginning with Motorola/Freescale, HPL, which brought him to Synopsys through acquisition, and for the last 4+ years he has been at ARM. Greg’s talk “IP Design and the FinFET Transition”will cover foundry access to finFETs. Here is a reprint of an interview with Greg from the Tech Design Forum Newsletter in case you have not seen it:

TDF: What is the big deal about finFETs and what do they mean for IP?

FinFETs hold the promise of being fundamentally better switches than bulk planar transistors. So, they’ll allow favorable power and performance scaling beyond 20nm. However, they are a new kind of transistor with new issues and limitations. They are different enough that one runs the risk of producing sub-optimum IP without good understanding and planning. But properly executed, they’ll mean that 14nm delivers better power and performance.

How big a change are finFETs?

On one hand, they have the same metal-oxide-semiconductor structure, simply folded up, accordion-style, to provide a higher current density. In that sense, designers will see them behave in familiar ways. The key change will be a sizeable bump in the roadmap for some scaling parameters. There’ll be enough notable differences that the transition should offer an opportunity to assess the scaling of our designs.

What specifically should designers be aware of?

Most everyone has heard about quantization – that the finFET drive strength is varied by the number of discrete fins in parallel. For that reason and others, low power designers will face a different granularity in choices than they’ve been used to. Another potentially more interesting side-effect of quantization is a new fin-metal gear ratio. Designers must plan for the fact that finFETs offer a change in the scaling compared to recent nodes. Delay and power can be improved in aggregate, but their components, represented by CV/I and CV^2f, will scale in different ways. I wouldn’t recommend a lazy extrapolation of past trends.

What other differences might there be?

The variability signature will probably change. finFETs improve some aspects of variability but because they have new process components, I’d expect to see other new variation issues. This shift might foretell a change in the balance between local and global variation that will affect memory and logic differently. Also, scaling to 14nm in and of itself won’t be easy, and all of the finFET issues will have to be folded – no pun intended – into this broader context. I’ll discuss a broader process scaling perspective at the forum.

My big question is parasitic extraction of 3D Transistors, how is that going to work?

2012 semiconductor market could decline by 1% or more

The world semiconductor market grew a slight 0.4% in 2011, according to WSTS. In early 2011, expectations were for growth in the 6% to 10% range. Various natural and man-made disasters lead to weaker than expected growth. The March 2011 earthquake and tsunami in Japan disrupted semiconductor and electronics production. Floods in Thailand in 3Q and 4Q 2011 severely impacted hard disk drive manufacturing. The European financial crisis resulted in weak demand in 4Q 2011.

Recent forecasts for the 2012 semiconductor market are primarily in the range of 2% to 3%. Always optimistic Future Horizons predicted 8% growth. IC Insights projected 7% growth for the IC market. We at Semiconductor Intelligence believe the 2012 semiconductor market will see a slight decline of about 1%. The decline is expected due to the overall economic outlook in 2012 and the quarterly pattern of the semiconductor market. (Cick to enlarge images)

The International Monetary Fund’s (IMF) January 2012 forecast was for World GDP growth of 3.3% in 2012, half a point slower than estimated 3.8% growth in 2011. The U.S. is expected to maintain moderate 1.8% growth in 2012, the same as in 2011. The Euro Area is projected to see a 0.5% drop in GDP in 2011 due to the financial crisis. Japan’s GDP should rebound from a 0.9% decline in 2011 to 1.7% growth in 2012 as it recovers and rebuilds from the 2011 disasters. China remains a major growth driver, with expected 8.2% growth in 2012, one point lower than in 2011. Hong Kong, South Korea, Singapore and Taiwan collectively should see 3.3% growth in 2012, slowing from 4.2% in 2011.

The semiconductor market declined 7.7% in 4Q 2011 from 3Q 2011, due largely to the floods in Thailand and weakness in Europe. The largest semiconductor companies providing guidance generally expect significant revenue declines in 1Q 2012 from 4Q 2011. Intel, Texas Instruments, ST Microelectronics and AMD all gave similar revenue guidance: worst case declines of 10% to 12%, best case declines of 4% to 5%, and midpoint declines of 7% to 8%. Renesas and Broadcom also expect declines in 1Q 2012. Samsung did not give specific guidance, but expects a weak DRAM market in 1Q 2012. A couple of companies expect increases. Toshiba expects a recovery to 15.5% revenue growth for its semiconductor business after a 19% decline in 4Q 2011. The midpoint of Qualcomm’s guidance is for 2.5% growth.

We at Semiconductor Intelligence are forecasting a 5% decline in the semiconductor market in 1Q 2012. With a 5% 1Q 2012 decline following the 7.7% decline in 4Q 2011, it will be difficult for the industry to achieve positive growth for the year 2012. The average quarter-to-quarter growth rate in 2Q through 4Q 2012 would need to be 6.5% just to achieve 0% for the year. Our forecast of a 1% decline in 2012 is based on average growth of about 5% for the last three quarters. Further deterioration in the world economic situation from current expectations could result in a more significant decline in the semiconductor market in 2012 of 5% or more.

Synopsys MIPI M-PHY in 28nm introduction with Arteris

MIPI set of specifications (supported by dedicated controllers) are completed by a PHY function, the D-PHY or the M-PHY function. The D-PHY was the first to be released, and most of the MIPI functions supported in a smartphone we are using today probably still use a D-PHY, but the latest MIPI specifications have been developed based on the M-PHY usage. Which does not means that the D-PHY will disappear any soon, but clearly M-PHY is the future…

At the early days of MIPI introduction, Synopsys was not as comfortable with MIPI as an IP product, as you could not consider the M-PHY as a “one size fits all” traditional IP product: the specification defines M-PHY Type I, Type II, and within these Type, another set of options, High Speed Gear 1, 2 and 3 for Type II and for type I the same, plus Pulse Width Modulator (PWM) 0, 1 to 7. This way to define one product, but with multiple options to be supported, was hurting Synopsys product marketing approach, which is to develop one product, and address a market as wide as possible using a large sales force… this is in fact the definition of an IP business!

The discussion I had last week with Navraj Nandra, Marketing Director for Mixed-Signal IP (including the PHY Interfaces product line), shows that Synopsys has found a way to solve this business issue with an elegant engineering solution: the MIPI M-PHY developed by Synopsys in 28nm is a modular product. If a customer needs to implement the M-PHY Type II supporting Gear 1 to 3, he will integrates this function only. This is the best way to optimize the associated footprint and power consumption, and it is easier than to follow the other potential route which was to engage an IP or design service company to specifically develop the function supporting the part of M-PHY specification to be supported.

Because at Semiwiki we try to do some evangelization, let’s see what are the functional (specific) specifications associated with the M-PHY (agnostic by nature). I strongly engage you to have a look at the above picture!

At first, let’s start with the “pure” MIPI specifications, in that sense that these have been developed by the MIPI Alliance alone:

- DigRF v4 is the specification allowing to interface with RF chip (supporting LTE), can be connected directly to the M-PHY.

- Low Latency Interface (LLI) can also be connected directly to the M-PHY. We will come back later in this paper to LLI, as the release 1.0 has been announced this week, at MWC

- CSI-3, the Camera Interface, has to be connected through UniPro, an “agnostic” controller, to the M-PHY

- DSI-2, the Display Interface, connect to the M-PHY also through UniPro

- Universal Flash Storage (UFS), a specification jointly developed by JEDECand MIPI, to support external Flash devices (Card), also connect through UniPro

- Finally, another specification has been jointly developed, with USB-IF, called SuperSpeedIC (SSIC), allowing to connect two USB 3.0 compatible devices, directly on a board (no USB cable)

To summarize, M-PHY and UniPro are agnostic technical specifications (one mixed-signal, the other digital), and CSI-3, DSI-2, DigRF v4, LLI, SSIC and UFS are function specific specifications, defined by MIPI Alliance alone, or by MIPi Alliance and USB-IF, or JEDEC for respectively the last two.

MIPI has the potential, from a technical standpoint, thanks to the benfits it brings in term of High Speed Serial AND low power Interface, and in term of interoperability, to be used in PC, Media Tablet and Consumer Electronic segments, on top of the Wireless handset segment. MIPI Alliance has the willingness to support such a pervasion, but doing some evangelizationwill be necessary for the MIPI specifications to go to the mainstream, Consumer electronic or OC segments!

LLI Specification has been officially released by the MIPI Alliance, at the occasion of the Mobile World Congress in Barcelona, this week. As indicated by the name, the round-trip latency of the LLI inter-chip connection is fast enough for a mobile phone modem to share an application processor’s memory while maintaining enough read throughput and low latency for cache refills. Sharing the same DRAM device means the wireless handset integrator can save real estate printed circuit board (PCB) space and create a thinner smartphone, or implement additional device, more features to the smartphone, like NFC chip for example. It also means that the OEM will save, on every manufactured smartphone, the cost of one DRAM ($1 to 2$). If you manufacture dozen of million smartphone like some of the leaders, you can see how quickly you will get the return on the initial investment done by the chip makers to acquire the IP!

MIPI Alliance is strongly supportive of LLI, as we can see from this quote: “As active MIPI contributors, Synopsys and Arteris are aiding in the adoption of the MIPI M-PHY and MIPI Low Latency Interface,” said Joel Huloux, chairman of the board of MIPI Alliance. “The early integration and availability of the Arteris and Synopsys solution helps speed time to market for MIPI LLI adopters.” LLI support from Arteris and Synopsys illustrate how important is to build a strong partnership when selling a complementary solution, as the joint solution consists of Arteris’ Flex LLI™ MIPI LLI digital controller IP and Synopsys’ DesignWare® MIPI M-PHY IP. A team of Arteris and Synopsys engineers, formed to facilitate verification and testing of the joint solution, validated its functionality and interoperability.

And for those who love to get insights, you should know that LLI was initially developed by an Application Processor chip maker, who understood that the function, to be successful on the market, has to be marketed and sold by an IP vendor, Arteris was selected as they were already marketing C2C or “Chip To Chip Link” IP, offering exactly the same functionality (sharing a DRAM between Modem and Application Processor), by the means of a parallel Interface in this case, but we will come back soon about this IP.

Eric Esteve – See “MIPI IP Survey & Forecast” from IPNEST

Huawei and Intel Redrawing the New Mobile Playing Field

This time is differentis a book that was released just months before the financial crises in 2008 describing hundreds of historical cases where smart people ended up making disastrous decisions over the span of 800 years that led to government defaults, banking panics etc… In the semiconductor industry, we also tend to think this time is different due to the rapid advancements made possible by Moore’s Law. A year ago the ARM camp (nVidia, TI, Marvell, BRCM) was riding high at the prospects of winning the mobile market and casting Intel aside. Now, I would argue, that Apple’s stunning growth has forced a completely new game on the industry. In this game the phone carriers are in desperate need for an alternative and Intel and Huawei, with their announcements this week, have shown the way to “save the carriers.”

You want to talk about big money, AT&T’s revenue was $127B in 2011 and Verizon’s was $111B. Repeat these numbers with the carriers the world over and it becomes obvious that there exist a big leagues (call it the $100B club), that few suppliers can play in. Apple frightens the carriers because they have to shell out all the Cap Ex to handle the mobile tsunami imposed by Apple’s iPhone and iPADs. Starting with the 4G LTE enabled iPAD 3 in March and followed by a similarly enabled iPhone 5 in the fall, the tsunami data waves will increase in a magnitude that will be more than a step function. What shall they charge customers for the data onrush, no mathematician can foretell. However, whatever it ends up being, one thing is sure: Apple will have a hand in negotiating the business model.

Five years ago Nokia could never have imagined getting away with selling a phone to carriers for an average of $660. With the iPAD 3 with LTE, expect it to go higher. Apple’s roughly 60% gross margin is significantly more than what they receive for the MAC PCs and is a sense causing the carriers to see a future bloodbath. The carriers need an alternative house brand that provides margin relief and counterweight to Apple, who will likely ramp up to at least 50% of their customers. So while they can not live without Apple, they can’t survive on them alone.

Which brings us to this week and the announcement that Huawei is building its own quad core processor for smartphones and that Intel has made agreements with the UK carrier Orange, the Indian carrier Lava and Chinese equipment manufacturer ZTE to build and sell Medfield based smartphones this year. It should be noted that Huawei and ZTE are the #1 and #2 Chinese Telecom and Networking equipment vendors and furthermore they are both in the top 5 in mobile handsets.

The announcement of Huawei building their own processor is another sign that independent silicon suppliers like nVidia, TI, Marvell and Broadcom will see less fertile grounds to plow in the mobile space. In the last earnings call, nVidia CEO Jen Hsun Huang admitted that sales of Tegra 3 would come in much lower than expected for 2012 because Samsung had decided to use their own in house processors. Vertical integration is the trend and it is absolutely necessary.

Looking much further down the road though, Huawei and Intel appear to be on similar tracks with a slight twist. Huawei is going to go completely in house vertical with the smart phones and telecom equipment with some assist from Qualcomm on the 4G LTE in phones and base stations. They will offer a package deal to carriers – buy the telecom and networking equipment and get the phones at a discount. The net is an increase in cash flow to carrier over buying phones from HTC or Samsung and equipment from Huawei, ZTE, Cisco or Ericsson.

ZTE looks like it will rely on Intel for silicon not only for cell phones but I am guessing also for networking and future telecom gear. Huawei is financially more powerful than ZTE and has in fact set up a design center in Silicon Valley staffed with ex-Cisco engineers to build high end ASICs for Routers and Switches. Their footprint is growing.

With what they believe is a 4 year lead in process technology, Intel is now going to execute a strategy of offering what will be lower cost, lower power silicon in the smartphone and tablet space by the time they convert Atom to 22nm in early 2013. On the other side of the house, it appears that they will expand their data center offerings to the point they can deliver the silicon a ZTE needs to be competitive with Huawei. All of this will likely have an “Intel Inside” marketing pull through the carriers.

As mentioned in a previous blog, the era of independent Fabless chip companies who rely on the leading edge is coming to an end. The winners will own Fabs (ie Intel or Samsung), have IP or be vertically integrated like Apple or Huawei who bury the processor in the total BOM of the phone. Both though will be tempted to jump to Intel to gain access to the latest process technology and gain an edge on Samsung.

For the carriers, they see a need to immediately address the bottom line before Cap Ex overwhelms them. For Huawei, ZTE and Intel the doors of opportunity have just opened up. More Importantly, for Intel this time is no different than how they executed in the 1990s PC Market.

Now, with regards to Google….

FULL DISCLOSURE: I am long AAPL, INTC, ALTR, QCOM

HSPICE Users Talking about Their Circuit Simulation Experience, Part 2

Continued from < Part 1 <

Continue reading “HSPICE Users Talking about Their Circuit Simulation Experience, Part 2”