In 1981, Pac-Man was sweeping the nation, the first space shuttle launched, and a small group of engineers in Oregon started not only a new company (Mentor Graphics), but an entirely new industry, electronic design automation (EDA).

Mentor founders Tom Bruggere, Gerry Langeler, and Dave Moffenbeier left Tektronix with a great idea and solid VC funding. To choose a company name, the three gathered at Bruggere’s home, former “world headquarters,” of the fledgling enterprise. Moffenbeier’s choices, ‘Enormous Enterprises’ and ‘Follies Bruggere,’ while witty did not seem calculated to inspire confidence in either potential investors or customers. Langeler, however, had always wanted to own a company called ‘Mentor.’ Later, ‘Graphics’ was added when it was discovered that the lone word ‘Mentor’ was already trademarked by another company. Mentor, now based in Wilsonville, Oregon, became one of the first commercial EDA companies, along with Daisy Systems[SUP]1[/SUP] and Valid Logic Systems[SUP]2[/SUP].

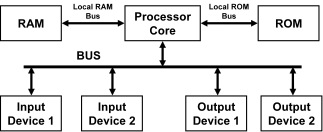

The Mentor Graphics team decided what kind of product it would create by surveying engineers across the country about their most significant needs, which led them to computer-aided engineering (CAE), and the idea of linking their software to a commercial workstation. Unlike the other EDA startups, who used proprietary computers to run their software, the Mentor founders chose Apollo workstations as the hardware platform for their first software products. Creating their software from scratch to meet the specific requirements of their customers, and not building their own hardware, proved to be key advantages over their competitors in the early years. One wrinkle—at the time they settled on the Apollo, it was still only a specification. However, the Mentor founders knew the Apollo founders, and trusted that they would produce a computer that combined the time-sharing capabilities of a mainframe with the processing power of a dedicated minicomputer.

The Apollo computers were delivered in the fall of 1981, and the Mentor engineers began developing their software. The goal was to demonstrate their first interactive simulation product, IDEA 1000, at DAC in Las Vegas the following summer. Rather than being lost in the crowd in a booth, they rented a hotel suite and invited participants to private demonstrations. That is, invitations were slipped under hotel room doors at Caesar’s Palace, but because they didn’t know which rooms DAC attendees were staying in, the invitations were passed out indiscriminately to vacationers and conference-goers alike. The demos were very well received (by conference-goers, anyway), and by the end of DAC, one-third to half of the 1200 attendees had visited the Mentor hotel suite (No record of whether any vacationers showed up). The IDEA 1000 was a hit.

By 1983, Mentor had their second product, MSPICE, for interactive analog simulation. They also began opening offices across the US, and in Europe and Asia. By 1984, Mentor reported its first profit and went public. Long time Mentor employee Marti Brown said it was an exciting time to work for Mentor. The executives worked well together, complementing each other’s strengths, and Bruggere in particular was dedicated to creating a very worker- friendly environment, including building a day care center on the main campus. Throughout the 80s, Mentor grew aggressively (you can see all the companies Mentor acquired on the EDA Mergers and AcquisitionsWiki). As an aside, Tektronix, where the Mentor founders worked previously, also entered the market with the acquisition of a company called CAE systems. The technology was uncompetitive, though, and they eventually sold the assets of CAE Systems to Mentor Graphics in 1988.[SUP]3

[/SUP]

Times got tough for Mentor between 1990-1993, as they faced new competition and a changing EDA landscape. While Mentor had always sold complete packages of software and workstations, competitors were beginning to provide standalone software capable of running on multiple hardware platforms. In addition, Mentor fell far behind schedule on the so-called 8.0 release, which was a re-write of the entire design automation software suite. This combination of factors led some of their earliest and most loyal customers to look elsewhere, and forced Mentor to declare its first loss as a public company. However, learning from the experience, Mentor began to redesign its products and redefine itself as a software company, expanding its product offerings into a wide range of design and verification technology. As it made the transition, the company began to recapture market share and attract new customers.

In 1992, founder and president Gerry Langeler left Mentor and joined the VC world.

In 1993, Dave Moffenbeier left to become a serial entrepreneur. That same year, Wally Rhines came on as president and CEO, replacing CEO Tom Bruggere who also retired as chairman in early 1994.[SUP]4[/SUP] Under the leadership of Rhines, Mentor has continued to grow and thrive, introducing new products and entering new markets (such as thermal analysis and wire harness design), cementing its position as the IC verification reference tool set for major foundries, and reporting consistent profits. In recent years, Mentor has been a target for acquisition (by Cadence, 2008) and an activist investment (by famed corporate pirate Carl Icahn, 2010). Despite those events, Mentor continued to grow its revenue and profitability to record levels by introducing products for totally new markets. Throughout its 31 years, Mentor has been a solid anchor of the EDA industry that it helped to create.

Very Interesting References and Asides

[LIST=1]