I recently read a news article where the author referred to Moore’s Law as a ‘Law of Science discovered by an Intel engineer’. Readers of SemiWiki would call that Dilbertesque. Gordon Moore was Director of R&D at Fairchild Semiconductor in 1965 when he published his now-famous paper on integrated electronic density trends. The paper doesn’t refer to a law, but rather the lack of any fundamental restrictions to doubling component density on an annual basis (at least through 1975). He later re-defined the trend to a doubling of speed every 18 months. [The attribution “Moore’s Law” was coined by Carver Mead in 1970]. As founder and President of Intel Corporation, Gordon Moore led what is now a 40+ year continuation of that trend.

Continue reading “A Brief History of Moore’s Law”

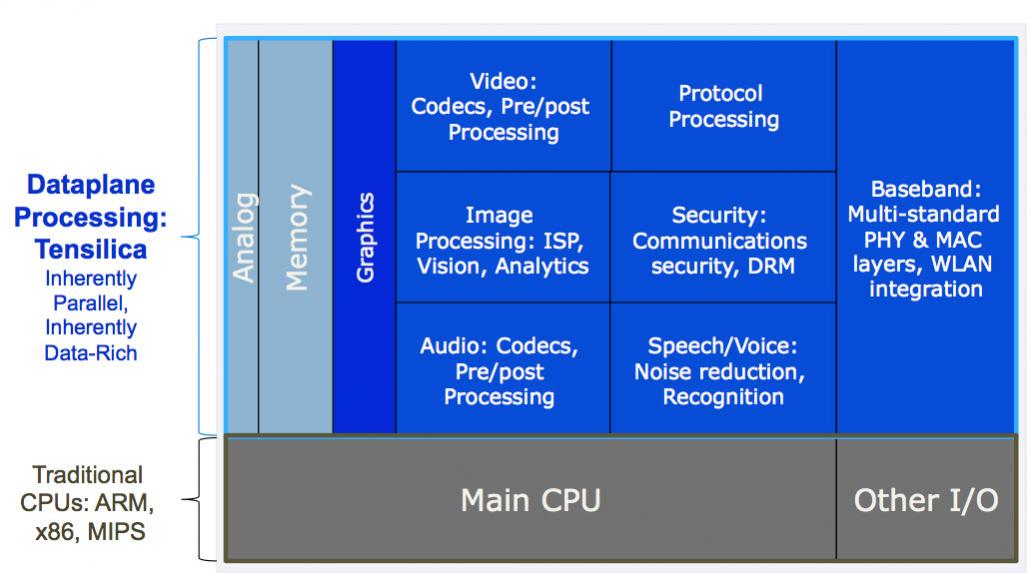

The Protocol Processing Dataplane

At the Linley processor conference this week, Chris Rowen, the CTO of Tensilica presented on the protocol processing dataplane. That sounds superficially like he is talking about networking but in fact true protocol processing is just part of adding powerful compute features to the dataplane. Other applications are video, audio, security, voice-recognition and so on. All of these applications are inherently parallel and data-rich and either are impossible to process on a general purpose control processor such as an ARM (not enough performance) or are extremely power-hungry to use a general purpose processor.

Depending on the application, different kinds of parallelism are required, from single-instruction multiple-data (SIMD) vector processing to homogenous threads (all doing the same thing) or heterogenous threads.

The Tensilica Xtensa dataplane processor units (DPUs) are highly customizable and thus suitable for all these applications. The processors generated range from 11.5K gates up to huge beasts with large numbers of execution units. In addition, they can have a huge range of I/O architectures with FIFOs, lookup tables, or very wide direct connections. After all, a high-performance DPU isn’t much use if you can’t get the data in and out to the rest of the design with high enough bandwidth.

Probably the most demanding application, requiring very high I/O performance and high performance in the compute fabric, is network data forwarding (such as in a high-performance router). The most generic way to do this would be to use a cache-coherent memory system and just put the packets in off-chip DRAM. But Chris has a rule-of-thumb that, since energy is proportional to distance, if a direct wire connect is 1 unit of energy, local memory is 4, on-chip NoC is 16 and going off-chip is 256.

There is thus an enormous difference in energy efficiency to build the best possible fabric on-chip to keep everything fed, rather than building something completely general purpose, as can be seen from the above diagram showing the difference between using a cache-coherent cluster, one where DMA is used to offload the processors and one with direct connect.

The savings are huge using a DPU versus a standard microrprocessor. The pink bars show the efficiency of the Tensilica Xtensa DPU, the blue are ARM and the green is Intel Atom. Higher numbers are good (this is efficiency, Xtensa has been scaled to 1).

To take another demanding example, LTE-Advanced level 7. The block diagram is complex and requires a huge amount, 6.5Gb/s, to be moved around between the blocks. Again, comparing the general purpose solution to building direct connections on-chip shows the enormous difference in efficiency.

ARM in Networking/Communications

I was at the Linley Processor Conference yesterday. There are two of these each year, one focused on mobile and this one, focused on networking and communications (so routers, base-stations and the like). You probably know that ARM is pretty dominant in mobile handsets (and Intel is trying to get a toe-hold although I’m skeptical they will succeed). A story that I haven’t heard before is their potential in the networking and communications space.

So if you look at the architecture share pie chart you can see that PowerPC has the strongest position with about 50% market share, heavily used by Cisco, Ericsson and Alcatel-Lucent. Next is x86 which is not heavily used in routers but in various network appliances that are more like a PC in a box with network connectivity. MIPS is, apparently (and suprisingly) increasing its share and leads in multicore, in use at Cavium, Broadcom/Netlogic, Nokia-Siemens, Huawei (and some Cisco). ARM has a toe-hold and is only used at Marvell today.

So that doesn’t look like much of a story. ARM is a nobody, the other guys together have 95% market share.

But ARM has developed a 64-bit architecture and that has opened new markets at the higher end. There is potential for ARM-based servers replacing general purpose PC-servers in specialized datacenters. If you are Netflix and all you do is pump video then a general purpose PC may not be the most power/cost effective way to do that. Or uploading billions of photos to Facebook. But the jury is still out as to whether that might happen.

But there are some ARM plans. AppliedMicro (PowerPC-based) now developing a system based on ARM called X-gene, which is the world’s first 64-bit ARM server on a chip. Freescale (PowerPC) is developing a hybrid ARM/PPC system called Layerscape. LSI (PowerPC) is developing an ARM version of their Axxia product line going beyond 4 cores. And Cavium, which builds high-count multicore systems called Octeon is developing an ARM-based complement called Thunder.

But these dual architecture strategies are expensive both to build and verify the CPU cores and also to maintain the software on two architectures. So Linley’s prediction is that it won’t endure and there is a good chance that the migration will take place to ARM although it may take more than 5 years. ARM is seen as a safe choice. MIPS has been up for sale for most of the year and whether they can continue to make the engineering investment to remain competitive for the long term has to be in doubt. PowerPC, which originally was a joint development with Apple, Freescale (then still part of Motorola) and IBM, seems also to be gradually losing support. Apple has gone, of course. IBM is still committed to the architecture but really only for their own high-end servers.

x86, of course, is also a safe choice but Intel’s roadmap is driven by the PC and so it tends to require a higher chip-count to build a high-data throughput platform for communications. The requirements for routers and base-stations are very different from general purpose PCs since they have very different latency and data-plane requirements.

During the day, ARM announced two new products CoreLink CCN-504 (CCN is cache-coherent network) which is an ARM-specific network-on-chip (NoC) and CoreLink DMC-520 dynamic memory controller. These two products work together to provide on-chip and off-chip connectivity with a real-world throughput of 1Tbps and peak performance of 1.5Tbps using a 128-bit internal bus. There is support for the existing Cortex-A15 (32 bit) and future 64-bit ARMv8 architecture cores. As always, ARM is focused on power-efficiency and not just raw performance at any cost. These will be available early next year with the first production designs expected in 2014. LSI discussed some work based on CCN-504 where they have been partnering with ARM on interconnect technology requirements, although they didn’t announce any specific products.

So ARM today has pretty much zero market share in communications (outside of mobile, where they are dominant). But they have new technology for the space from 64-bit processor cores to on-chip NoC technology that can link up to 16 cores, and high performance access to off-chip memory. And several of the big players in the networking/communication spaces have ARM-based products that they will bring to market in the near future. The landscape could look very different in two or three years.

Challenges in Managing Power Consumption of Mobile SoC Chipsets: And What Lies Ahead When Your Hand-Held Is Your Compute Device!

Qualcomm VP of Engineering, Charlie Matar, will be keynoting the Apache/ANSYS seminar in Santa Clara next Thursday. Charlie is a great guy and a great speaker so you won’t want to miss this and it’s FREE! I spoke to Charlie, he will be speaking on:

Today’s complex SOC design is driven by the constant demand for high performance capabilities, rich feature sets, concurrency modes and low power requirements. This creates many challenges in delivering on what users want from their devices and these challenges impact the whole SOC eco-system from Design, analysis, sign-off, reliability and time to market. These trends will continue to challenge SOC designers in the future especially now that compute and mobile devices are merging.

My talk will focus on the key challenges that designers are facing especially in key areas like performance, power, thermal and reliability and discusses some of the future trends in the industry that requires a more efficient model between foundries, SOC designers and EDA companies.

Charles Matar is a Vice President of Engineering at Qualcomm. He joined Qualcomm in 2003 and formed a new CPU team the delivered ARM based CPU Cores for Qualcomm’s Mobile SOCs. After that, he was the chip lead that delivered the first 65nm Mobile SOC in 2007 and then he went on to manage the Physical Design Team, Low power Implementation team, Serdes and the foundation IP Design. His responsibility then included delivering all of the SOC tape outs for San Diego’s QCT division and enabling new technology nodes for next generation SOCs like backend infrastructure, IP, methodology and working closely with foundries, design and EDA companies. Presently, he is managing the Graphics Hardware team in Qualcomm responsible for delivering all of Qualcomm’s Adreno GPU cores.

Prior to joining Qualcomm, Charles held multiple positions as a CPU designer and manager. His technical interest is in SOC and Processor Design, Low Power Design and Process Technology.

Charles Matar hold a BSEE from the University of Texas at Austin and an MSEE from Southern Methodist University.

Charlie will be followed by technical tracks/breakout sessions featuring application specific presentations focused on 20-nm low-power designs and high-speed I/O verification. They will include presentations by leading companies such as Samsung-SSIand Texas Instruments sharing their experiences designing for low-power applications.

The 20nm Low-Power IC Design track will discuss tools and methodologies that address power and reliability challenges for advanced low-power designs. For those designing high-speed I/O interfaces such as DDR3/4, a special technical presentation entitled “Chip-Package-System (CPS) Methodology for Giga-hertz Performance Mobile Electronics” should be of key interest.

Learn More

View the full Agenda and Technical Track abstracts.

For more information on the series.

Reserve Your Seat Today!

I will see you there!

Hynix View on New, Emerging Memories

The recent (August) flash memory summit in Santa Clara had a session devoted to ReRAM as well as featuring prominently in the keynote address by Sung Wook Park of SK Hynix. The talk includes a summary of NAND’s well known scaling issues along with approaches to 3D NAND. It turns out that they are working on three different technologies: PCRAM (phase change RAM) with IBM, STT-RAM (next gen MRAM) with Toshiba and the better known ReRAM program with HP. The HP collaboration has been ongoing since 2010, the other two collaborations date publicly at least from earlier this year. While SK Hynix have a vision for the three apparently competing technologies, we were a bit surprised and wondered if the additional collaborations were a reaction to internal concerns about the progress of their longer standing collaboration with HP. Christie Marrian has more over at ReRam-Forum.com

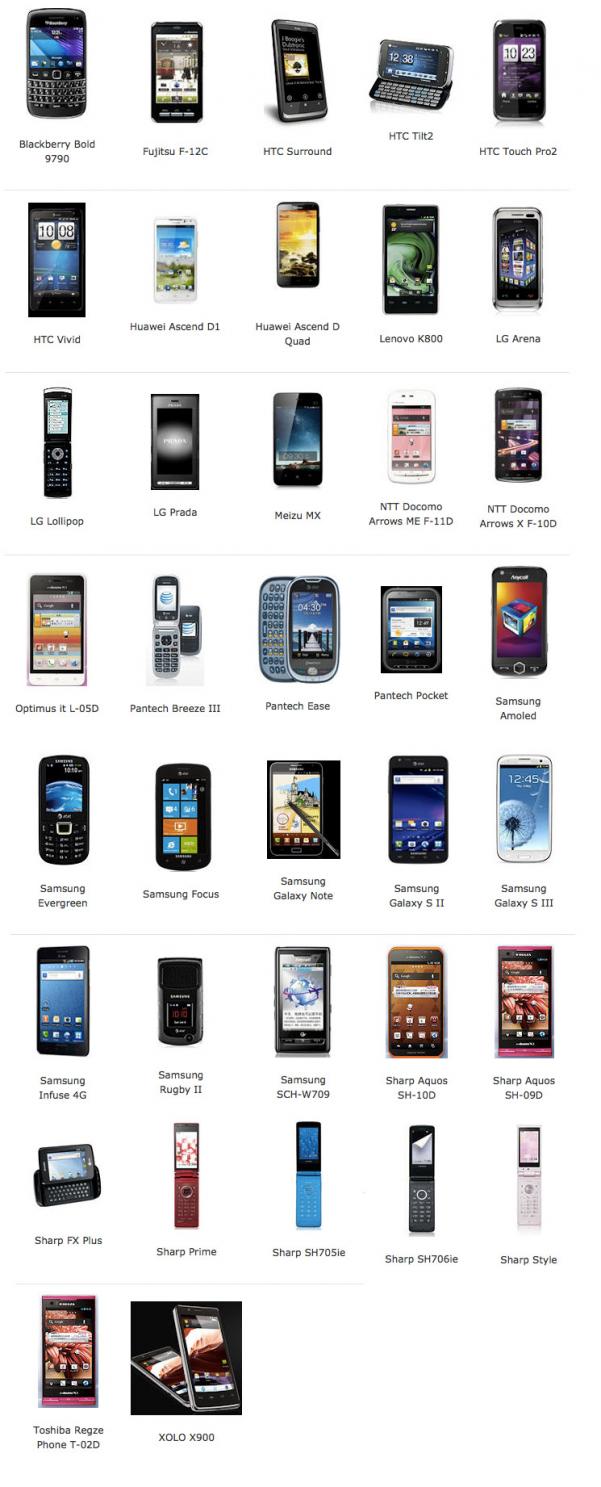

Tensilica Ships 2 Billionth Core

It was in June of last year that Tensilica announced that they (or rather their licensees) had shipped one billion cores. Now they have just announced that they have shipped two billion cores. They are shipping at a run-rate of 800 million cores per year, which is 50% higher than June last year. If business continues to grow they will bet at a run-rate of over a billion cores per year sometime next year. They’ll have to put one of those signs outside that McDonalds used to have about how many billion hamburgers they sold, before the numbers go so big that it became impossible to keep up.

Since Tensilica is still a private company they don’t announce their financials, but they did also announce that their product license revenue is bigger than any other DSP licensing company by about 25% (presumably CEVA is #2) and that they are number 2 in product license revenue for all CPU IP licensing companies, behind ARM at #1 of course.

The accelerating growth in the number of cores is driven by designs ramping to volume in smarphones, digital TV, tablets, personal and notebook computers and storage and networking applications. But the real driver is power: dataplane processor units (DPUs) provide better performance/power/area than classic DSPs and scale from function-specific micro-cores up to large general purpose DSPs. Click on the picture to see a subset of the mobile phones that contain Tensilica DPUs.

New design wins are important for future revenue when royalties start to be paid too. Typically a core will be licensed at the start of a project, and it can be a couple of years before the design is complete and the systems start to ship in high volume.

And for anyone visiting Tensilica’s offices, they will move buildings the weekend of 20th October (ten days time). They are only moving to the two-storey building across the road so if you go to the old offices you are almost in the right place!

The Middle is A Bad Place to Be if You’re a CPU Board

In a discussion with one of my PR network recently, I found myself thinking out loud that if the merchant SoC market is getting squeezed hard, that validates something I’ve been thinking – the merchant CPU board market is dying from the middle out.

Continue reading “The Middle is A Bad Place to Be if You’re a CPU Board”

ICCAD: 30 years

ICCAD is November 5th to 8th in the Hilton San Jose (downtown).

It is very off topic, but if you are British then November 5th is the rough equivalent of July 4th when there are fireworks displays all over the country. Britain is one of very few countries that transitioned from some sort of autocracy to a democracy without having a revolution. There is thus no “national day” to celebrate with fireworks. Instead, Britain has to celebrate a failed revolution when Guy Fawkes in 1605 attempted to blow up the houses of parliament while the king was also there. Except he got caught. In addition to fireworks, bonfires are also lit in Britain and an effigy of Guy Fawkes is burned.

Anyway, back to ICCAD. In all the hoopla that DAC next year is the 50th anniversary I only recently realized that ICCAD is celebrating its 30th anniversary this year. It started in 1982 (coincidentally also the year I immigrated to the US so a sort of 30th anniversary for me too, I guess).

There is a complete technical program, of course. But there are three keynotes that are especially noteworthy:

- On Monday at 9am, John Gustafson of AMD will talk about The Limits of Parallelism for Simulation. As we can put dozens of core and thousands of GPU processing elements on a workstation, how can we scale up problems to take advantage of all this. A simple but powerful speedup model that includes all overhead costs shows how we can predict the limits of parallelism, and in many cases it predicts that it is possible to apply billions of processors to simulation problems that have traditionally been viewed as “embarrassingly serial.”

- At lunchtime on Tuesday, CEDA has invited Alberto Sangiovanni-Vincentelli to talk about ICCAD at Thirty Years: where have we been, where are we going. Of course Alberto has been a key contributor to EDA research throughout that whole 30 year period at UC Berkeley, along with Richard Newton until his untimely death in 2007. He also found time to found both Cadence (where is still on the board) and Synopsys. He is also the recpipient of the EDAC/CEDA Kaufman award in 2001.

- On Wednesday at 9am, Sebastian Thrun of Udacity will talk about Designing for an Online Learning Community.Sebastian a year ago ran a course at Stanford on machine learning that hundreds of thousands of people all over the world followed online, which made him very aware of the power of online education. He since left Stanford and founded Udacity to provide scalable online education. Plus, he is a Google fellow and in his second life he is a key contributor to Google’s driverless car project.

Details of ICCAD, including more about the above keynotes, is here.

How Big is Mobile? Twice as many people use mobile phones than use a toothbrush

How big is mobile? Well, sometime early next year (or maybe even in the Christmas surge) there will be more mobile phones than people. Technically that is subscribers, so some of those “phones” are actually spare SIM-cards in international travelers’ pockets. But even so that is an incredible statistic. Also, next year, we will pass the point where over half of all mobile phones sold will be smartphones.

Tomi Ahonen has his annual book on the industry just published and as part of the publicity for it he has some amazing statistics:There are five times more mobile users than Facebook. Six times more people use mobile phones than have a car. Five times more mobile phone telephone numbers in use than fixed landline phone numbers globally. Four times more mobile users than the total number of personal computers of any kind, desktops, laptops, netbooks and tablets all added together! Three times more mobile phone accounts than total television sets on the planet. Three times more mobile users than internet users (and the internet user number include mobile users). Twice as many people use mobile phones than use a toothbrush on the planet. Mobile phones have 1.5 times more users than FM Radios. Mobile phones are used by hundreds of millions of illiterate people so more people have use of mobile than have use for pen and paper. That is a massive industry indeed.

In 2012, mobile handsets are a $250B industry (average selling price is $143 unsubsidized). There are 5.5 billion mobile phones in use of which about 1.2 billion are smartphones. No other industry comes close to those type of unit volumes, not televisions (1.9B) or computers (1.4B).

There is a big transition going on. The PC is not going away, of course, but the future growth is going to be in mobile (including tablets like iPad). Sometime next year there will be more Android devices out there than Microsoft Windows devices (of all types: PC, phones and tablets). It wasn’t that many years ago that everyone was worried that Microsoft was a powerful monopoly that was unstoppable. Now it is not exactly in trouble so much as irrelevant.

Intel makes most of their money in the PC market. They are trying to get into the mobile market and have a few design wins (Motorola/Google for example has a couple of Intel-based phones) but I see three challenges for them. Firstly, they are starting late to the game and mobile is an ARM/TSMC ecosystem. But secondly, their company is a premium supplier used to very high margins. Mobile chips are not going to give them that. Anyone who has read The Innovator’s Dilemma (and everyone reading this should) knows how poorly high-end companies do at disrupting themselves from below. Digital Equipment and PCs, integrated steel manufacturers and mini-mills, Kodak and camera-phones.

The third challenge is that there just isn’t that much of the market available to them. Even TI, a market leader once, is exiting and focusing on OMAP on other markets. Apple makes their own application processor and uses Qualcomm for the wireless modems (and other suppliers for wireless, bluetooth etc). Samsung makes many of their own chips, in particular the application processor. That may not be the whole industry by unit shipment but it is almost all of the money. For instance, Samsung just announced that smartphones are driving them to record results. HTC announced a drop of 80% in profits. Apple are famous for making over half of all the profit in the entire mobile industry on a relatively small market share. Intel are to Apple/Samsung in mobile chips in the same way as AMD is to Intel in PCs, trying to eke out a living in secondary niches while Intel takes all the profit.

Soft IP Quality Standards

As SoC design has transformed from being about writing RTL and more towards IP assembly, the issue of IP quality has become increasingly important. In 2011 TSMC and Atrenta launched the soft IP qualification program. Since then, 13 partners have joined the program.

IP quality is multi-faceted but at the most basic level, an IP block needs to do two things: it needs to meet its specification (for example, adhering to the protocol standard for a network interface) and it needs to be easy to implement into the design. Ideally, the IP itself does not need to be changed at all, this would be an indication of lack of IP quality and immediately increases the verification cost.

October 16th is the TSMC Open Innovation Platform Ecosystem Forum at the San Jose convention center. Anuj Kumar of Atrenta will discuss the TSMC IP Kit, which is a joint development between TSMC and Atrenta using the SpyGlass platform for IP handoff analysis and validation. The presentation will be at 11am. In particular he will discuss the new version of the IP Kit, TSMC IP Kit 2.0, currently under joint development between Atrenta and TSMC. This version of the kit adds physical analysis of the IP (such as routing congestion) as well as advanced formal metrics the explore the ease of verification of the IP.

Anuj will review the tests that are part of the Kit, show example quality metrics and DataSheet reports, and discuss the kind of design issues that have been uncovered and fixed as a result of the program. He will present the timeline for implementation of IP Kit 2.0 and the results of the testing of IP Kit V2.0 with IP partners.

Information about the TSMC OIP Ecosystem Forum is here. Information about IP Kit is here. As well as Anuj presenting, Atrenta will also be exhibiting at booth #405.