Collaboration in EDA is nothing new, however you may not be aware of how the Dini Group and Tektronix have worked together on an FPGA prototyping platform to address issues like debugging with full visibility across an entire multi-FPGA design. At SemiWiki we’ve blogged a couple of times so far about the new debug approach from Tektronix called Certus 2.0: Continue reading “ASIC Prototyping: Dini Group and Tektronix”

Dear Santa, please bring technology that brings us together

Dear Santa,

It has been many years since I have written you. I was taught never to ask anyone for anything for myself, that it is a presumptuous and selfish thing to do, so this is not for me. I know you are busy filling the wish lists of children everywhere, but wanted to take a moment to ask for your help for everyone.

Continue reading “Dear Santa, please bring technology that brings us together”

CEVA also bid to acquire MIPS… ARM still staying quiet?

We have seen in a previous post that Imagination Technologies has proposed to buy MIPS, in fact “MIPS operating business and certain patent properties, as well as license rights to all of the remaining patent properties”. Translated into understandable language Imagination has offered $60M for MIPS Processor IP core portfolio, plus the patents considered as essential to protect the portfolio, a couple of weeks ago. ARM did not participate to this bid, but the company is leading “Bridge Crossing LLC, a consortium of major technology companies affiliated with Allied Security Trust, which has entered into an agreement with MIPS to obtain rights to its patent portfolio. The MIPS patent portfolio includes 580 patents and patent applications covering microprocessor design, system-on-chip design and other related technology fields. The consortium will pay $350 million in cash to acquire rights to the portfolio, of which ARM will contribute $167.5 million.”

This was the status until a news in the Wall Street Journal came this week, announcing that MIPS has received an unsolicited offer from CEVA, putting $75M on the table to acquire MIPS operating business, bidding against Imagination Technologies for exactly the same asset. In other words, the bid from Bridge Crossing LLC is still valid, and CEVA only bid for MIPS operating business. Although it’s always interesting to see serious people playing “Monopoly” (the game) in real time, there are some interesting strategies being tentatively built here.

Let’s concentrate on this part of the story. Unlike MIPS, CEVA and Imagination Technologies have been successful in the wireless phone application area, CEVA selling DSP IP based solution for the baseband processing (see for example this post), and Imagination providing GPU IP cores to Application Processor chip makers (at least to those not using internal solution like Qualcomm). If CEVA can be considered as the counterpart of ARM, one dominant with DSP IP core when the other is (ultra) dominant with the Processor IP cores, Imagination Technologies is challenged by a couple of GPU IP vendors, even if they have slightly less than 50% market share, one of the challengers being… ARM with MALI GPU.

The smartphone market segment (see this forecast below) is certainly very attractive…

So, the motivation of CEVA and Imagination Technologies appears to be different, even if the target may be the same: the smartphone segment. If ARM becomes more and more successful with MALI, the company will ultimately kick Imagination Technologies out of the smartphone segment, which is certainly the most lucrative in term of (royalties) revenue. The bid from Imagination for MIPS illustrates one of the oldest strategy paradigms: “attack is the best defense” (from Carl von Clausewitz). As a matter of fact, if Imagination is able to propose both the CPU and the GPU IP, this strategy could be efficient to win some new customers against ARM (I don’t think they could displace ARM CPU IP at large accounts where the existing investment is huge), and it will complicate ARM selling process for their core business, the CPU, leaving less time to sell the MALI GPU.

If the bid from CEVA on MIPS is successful, it can be considered at 100% as an offensive (CEVA is not attacked by ARM on his core market), the goal being either to expand the revenue at accounts where CEVA is already present with their DSP IP, either to design-in DSP and CPU IP at emerging chip makers, with the same difficulties that already above mentioned: it will be really challenging to replace ARM CPU IP at accounts being long term ARM customers…

Let’s imagine that CEVA finally win the bid (I would guess that we are only at the beginning of the “Monopoly”, let say the first quarter), then, we may expect an interesting reaction from ARM, like buying one of the (very few) CEVA competitor, for example… (but I did not mention Tensilica).

Eric Esteve – IPNEST

Tanner EDA Tops 1,200 Active Customers!

It is always nice to see when an EDA company grows organically, versus inorganically by acquiring friends and foes. It is also nice to see when an EDA company invests in the fabless semiconductor ecosystem because, as we know, we are all in this together.

Tanner EDA celebrated its 25th anniversary this year by adding 149 new customers which brings the grand total of active customers to more than 1,200 which is an amazing number if you think about it. You can read more about Tanner EDA HEREor visit their website HERE.

“This year marked our concerted effort to advance from interoperability to tighter integration so that we can continue to deliver greater capability to our analog and mixed-signal customers,” said Greg Lebsack, president of Tanner EDA.

Tanner’s product line grew this year with new features and functionality added to HiPer Silicon in version 15.23 to tools such as T-Spice, S-Edit and HiPer DevGen, Tanner EDA’s layout acceleration tool. Additionally, new ecosystem partners were announced:

Tanner and Berkeley Design Automation announced the Tanner Analog FastSPICEsolution, an add-on to Tanner EDA’s front end and full flow A/MS design suites. Analog FastSPICE brings Berkeley Design Automation’s Analog FastSPICE platform to Tanner EDA customers, delivering foundry-certified nanometer SPICE accuracy at speeds 5x-10x faster than any other simulator on a single core as well as an additional 2x-4x performance uptick with multithreading.

“The availability of Analog FastSPICE as an add-on to Tanner EDA’s analog and mixed-signal design tools brings great benefit to Tanner EDA’s customers,” said Ravi Subramanian, president and CEO of Berkeley Design Automation. “Analog/ RF designers can realize the benefits of Tanner EDA’s full-flow analog design suite that is now bolstered by Analog FastSPICE, allowing verification of very complex analog/ RF circuits with nanometer SPICE-accurate results.”

The HiPer Simulation A/MS solution, which offers T-Spice analog design capture and simulation together with Aldec’s Riviera-PRO™ mixed language digital simulator, allows both analog and digital designers to seamlessly resolve A/MS verification problems from one cohesive integrated platform.

“The synergies we have with Tanner EDA span both technology and business,” said Dr. Stanley Hyduke, president and CEO for Aldec. “Both companies have delivered top-notch products and relentless customer service for the last 20 plus years and this collaborative A/MS solution will prove again why both Aldec and Tanner EDA continue to see growth and innovation in our respective areas of expertise.”

And a partnership with Australian Semiconductor Technology Corporation (ASTC).

“Tanner EDA tools, flows and partnership enable the ASTC A/MS Design Services operation to offer new, lower cost, design solutions to a new range of global customers and ASIC segments. Previously cost-prohibitive A/MS ASIC product ideas have now become feasible and profitable propositions both for our customers and for ASTC A/MS Design Services,” says Jay Yantchev, CEO of ASTC.

All in all, a great year for Tanner EDA!

Tanner EDA provides a complete line of software solutionsthat drive innovation for the design, layout and verification of analog and mixed-signal (A/MS) integrated circuits (ICs) and MEMS. Customersare creating breakthrough applications in areas such as power management, displays and imaging, automotive, consumer electronics, life sciences, and RF devices. A low learning curve, high interoperability, and a powerful user interface improve design team productivity and enable a low total cost of ownership (TCO). Capability and performance are matched by low support requirements and high support capability as well as an ecosystem of partners that bring advanced capabilities to AMS designs.

Introduction to FinFET Technology Part III

The preceding two Semiwiki articles in this thread provided an overview to the FinFET structure and fabrication. The next three articles will discuss some of the unique modeling requirements and design constraints that FinFET’s introduce, compared to planar FET technology.

Due to the complexity of FinFET modeling – and Dan’s guidelines to “keep it short, or they won’t read it” 🙂 – this discussion will be divided into three SemiWiki articles. This post will cover modeling the device itself. A subsequent post will discuss modeling the device parasitics. The final post in this thread will discuss some of the CAD flow impacts associated with introduction of FinFET’s.

Spice models

Recently, the Compact Model Council approved the standardization of the BSIM-CMG FinFET model, based upon the outstanding work from the Spice team at UC-Berkeley. I would encourage SemiWiki readers interested in FinFET’s to read the documentation on the BSIM-CMG model:

http://www-device.eecs.berkeley.edu/bsim/?page=BSIMCMG

(Links: “Latest Release”, then “Technical Manual”)

In particular, note that parallel fins are represented in the BSIM-CMG model using a parameter “NFINS = n” – that will have implications in modeling device parasitics and in CAD flows.

Device behavior

This brief discussion of device behavior will be divided into three sections: (1) the subthreshold “off” leakage current (Ioff); (2) the active current; and, (3) the factors that will introduce variation into the device behavior.

[LIST=1]

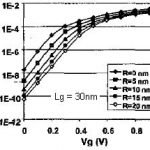

One of the key issues in scaling planar FET technology has been the process engineering required to minimize subthreshold current. With power dissipation (and standby battery life) such a critical factor in product design, the focus on minimizing Ioff is very high.

The subthreshold leakage current is strongly dependent upon the threshold voltage (Vt), gate length (Lgate), temperature, and process fabrication. (Recall that the transistor width of an individual fin is fixed.) There are a number of methods for measuring “Vt” – the most common definition is the input Vgs voltage when a specific device current Ids is present (small Vds voltage difference). Below this Vgs threshold, the device current falls exponentially with input voltage. The process dependence of this exponential relation for Ioff is typically represented as the subthreshold slope parameter, S, which is in units of mV/decade (at a given temperature). A small S value is preferable – a smaller change in gate input voltage will reduce the leakage current by a factor of 10.

The strong electrostatic control of the gate terminal over the FinFET channel results in a smaller S value than comparable planar FET’s, for fins of an appropriate thickness and impurity concentration. The gate-to-channel electric field is concentrated at the fin corners. As a result, as the gate-to-source input voltage increases toward the device threshold, there will be a higher concentration of subthreshold leakage current at the corners of the fin, aka the “corner effect”, as depicted in Figure 1.

Figure 1. Subthreshold leakage current increases for fins with a smaller radius of curvature at the corners. From Doyle, et al, “Tri-Gate Fully Depleted CMOS Transistors”, VLSI Technology Symposium, 2003, p. 133-4.

Recent SemiWiki articles have highlighted that the initial FinFET’s devices in production have a more tapered and rounded profile – in addition to being easier to fabricate, the (sub-threshold) current crowding effect at the corners will be reduced.

[LIST=1]

The BSIM-CMG model has numerous parameters, to accurately model the active FinFET device current. There’s not enough space to go into detail on the characteristics of this model – yet, there are two things to highlight, one included and one noticeably absent in this (first generation) model.

The FinFET thickness is a key manufacturing parameter. (A previous SemiWiki article in the series talked about a “sidewall image transfer” process step, which defines the fin thickness – this will be the same for all devices.)

If the FinFET is too thick, the electrostatic influence of the gate on the sides and top of the fin will be weaker, and the fin body will behave more like a (planar device) bulk substrate, losing the benefits of the FinFET topology.

If the FinFET is very thin, a special phenomenon results – the “density of available electron (or hole) states” is reduced. Briefly, free electrons/holes conduct current in the transistor channel, by having sufficient energy to reside at the conduction/valence energy band edges of the semiconductor material. The electron/hole energy and band levels in the semiconducting silicon are strong functions of the applied voltages and temperature, which are the basis for the FET model. Normally, there is no shortage of available “free states” for energetic electrons/holes at the band edges. However, for very thin fins, there is a quantum phenomenon which will reduce the density of available states at the band edge. As a result, electrons/holes would need more energy to occupy available states higher than the band edge, and be free to conduct device current. The BSIM-CMG model includes corrections for the “quantum density of states” for thin fins. Figure 2 illustrates the reduced free electron concentration and Figure 3 depicts the corresponding device current reduction due to the quantum density of states.

Figure 2. Free electron concentration reduction in fin cross-section, for the quantum density of states (t_fin = 6nm, approx. 25 atomic layers). From Entner, et al, “A Comparison of Quantum Correction Models for Three-Dimensional Simuatltion of FinFET Structures”, 27th Int’l. Seminar on Electronics Technology, 2004, p. 114-7.

Figure 3. Device current correction, accounting for the quantum density of free electron states. Same reference as Figure 2.

Conversely, there is one BSIM-CMG feature that is not yet included. Designers working with recent planar FET technologies and BSIM-4 models are very cognizant of “layout dependent effects” (LDE), and their influence upon the device current. New terms such as the “well proximity effect” (due to well implant impurity dosage scattering) and several “spacing effects” (due to the localized influence of additional “stress” materials and shallow-trench isolation on the channel carrier mobility) have been added to models.

CAD flows for planar technologies offer (early) cell layout automation features to estimate the LDE measures. This automation is applied when designers are still conducting initial feasibility simulations, well before detailed layouts are available for parasitic extraction.

FinFET technology is still in its relative infancy. There is little (published) work on LDE’s with FinFET’s, and as a result, there are no parameters in the BSIM-CMG model. Foundries will need to work very closely with design teams and Spice simulation vendors to define and enable LDE correction factors.

[LIST=1]

There’s not enough space in this brief post to delve into sources of FinFET variation.

There are numerous fabrication steps that will affect the device behavior. As mentioned above, the actual fin geometry defines the main device behavior, and each will have its (local and global) variation tolerances – e.g., fin thickness, height, sidewall profile, sidewall roughness, gate roughness.

There are alternatives for offering multiple device Vt thresholds, each with their own sources of variation – i.e., different metal gate compositions that provide workfunction potential differences and/or impurity implants into the FinFET body.

Suffice it to say that designers will need to understand the derivation of “process corner models” from their foundry. Circuit designs that need a more sophisticated statistical approach – e.g., “weak bit” SRAM or flip-flop stability analysis – will need to work with their foundry on the appropriate parameter distributions and sampling techniques.

A subsequent article will focus on issues related to modeling FinFET parasitics.

As always, thanks for SemiWiki readers for their comments and feedback!

Also read:

Introduction to FinFet Technology Part I

Introduction to FinFet Technology Part II

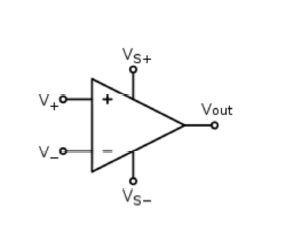

How accurate are AMS Behavioral Models?

You often hear the mantra that Analog Mixed-Signal (AMS) behavioral models are inaccurate. It is repeated so often that it starts to morph into “AMS models are inherently inaccurate”. This is not true.

Source: Wikipedia

Continue reading “How accurate are AMS Behavioral Models?”

Internet of Things, My Bluetooth Headset

A catchy phrase used by bloggers and journalists these days is “Internet of Things“, or IoT if you prefer acronyms. All of this is made possible by EDA tools in the hands of SoC designers to create useful products like my Jawbone ICON bluetooth headset. Tonight I discovered that I could customize my bluetooth headset by installing some software on my MacBook Pro and also on my Android-powered Samsung Infuse smart phone. Continue reading “Internet of Things, My Bluetooth Headset”

Apache on Signal Integrity

Matt Elmore has a two-part blog about the growing complexity of signal integrity analysis, both on the chip itself and the increasingly complex analysis required to make sure that signals (and power) get in and out of the chip from the board cleanly, especially to memory, which requires simultaneous analysis of chip-package-system or CPS. Part1 Part2

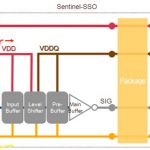

A particular problem is analyzing simultaneously switching outputs (SSO) when using DDR to access off-chip memory. The big challenge in CPS signal integrity simulation is the sheer complexity. It is simply not possible just to take all the relevant parts of the design and throw them into a SPICE simulator. The power grid alone may have millions of nodes. One potential solution is to divide the simulation up into smaller pieces, such as analyzing each byte separately, but that means that interaction between adjacent bytes is assumed to be irrelevant, which might turn out to be a very expensive assumption if wrong. The reality is SSO issues are a global problem (many outputs switching together affect the power supply voltage across all I/Os and indeed the core of the chip).

A better approach is to accurately capture all the relevant characteristics in a considerably reduced model, with enormously shorter runtime. This has been possible for the power delivery network (PDN) for some time but now channel model reduction advances are able to retain the accuracy required while modeling the whole system from end to end: die, package, PCB, memory. Previous generation simulation technology is just inadequate to simulate full I/O b banks (128+ bits) in a reasonable time. So accurate modeling is the key to successful high speed (17Gb/s) interface designs.

I/O buffer performance is highly susceptible to on-die power noise. I/O buffers firing simultaneously will draw current from the battery in sharp increments, resulting in voltage drop and a shared fluctuation of effective supply levels (Vdd-Vss). In order to take this into account, the power/ground routing of the I/O ring must also be modeled. Leveraging a chip power model with power/ground extraction and reduction technology helps create a compact model of the resistive, capacitive, and inductive coupling of the I/O ring power grid.

SPICE is still the de facto simulation engine for DDR signal and power integrity simulation. Using a tool, such as Apache’s Sentinel-SSO solution for example, helps bundle these modeling technologies together in a single interface designed for signal integrity. The netlisting and connection of these models is automated by the tool and fed into the SPICE simulator of choice. After simulation, the timing, jitter, and noise waveforms and metrics are displayed for review. Previously, full-channel SSO simulations of the chip, package, and PCB were unobtainable. However, designers now have advanced modeling technology to sign-off their systems with reasonable turnaround times.

EDS Fair: Dateline Yohohama

Electronic Design and Solutions Fair (EDSF) was held in Yokohama Japan from Wednesday to Friday last week. It was held at the Pacifico Hotel, somewhere I have stayed several times, not far from the Yokohama branch of Hard Rock Cafe and, what used to be at least, the biggest ferris-wheel in the world.

Atrenta was one of the many companies at EDSF2012. The EDS fair consists, for the second year, of a combination of what used to be a pure EDA show and the larger embedded show. Since Japan tends to be in the forefront of systems thinking, with Europe coming next and the US bringing up the rear, this combination is good since it brings in people from across the whole spectrum of design, not just a succession of CAD managers from the usual suspects. This is especially important for Atrenta since they are focused on doing design at a higher level with IP and software IP combined together to form systems.

Atrenta managed to generate a good supply of high-quality leads, each qualified by either an AE or a salesperson (so this doesn’t include students, press etc). Just like SemiWiki, students, press, PR and everyone are all very welcome but in the end it is the real design and embedded engineers who are the most important audience.

One thing that worked very well was the use of iPads as a way for customers to receive a deeper understanding of Atrenta’s products. They ported product presentation pdfs onto 8 iPads. In addition to salespeople and AEs using them as a tool to explain Atrenta’s products, the iPads also provided a venue for customers to get a deeper understanding of their products than they would typically receive from perusing a panel. It proved to be very effective as it allowed them to engaged with more customers than by using demos alone.

In terms of product interest, the highest was with BugScope. We believe this to be a combination of existing customers wanting to know about our newest product as well as designers looking for solutions to their verification challenges. Following closely behind where: SpyGlass CDC, SpyGlass for FPGA (we had a presentation highlighting SpyGlass plus CDC combined with the Xilinx Vivado support), and SpyGlass Power.

Meanwhile, back on this side of the Pacific, Ed Sperling had one of his round tables on the challenges of 3D, new process nodes. Venki Venkatesh of Atrenta was one of the participants. I’ve participated myself in a couple of Ed’s round tables. Basically he records the whole thing and then transcribes it (himself, it is so technical you can’t just give it to a secretary-type), cleans up the ums and ers and publishes it pretty much verbatim. You can find this one here. One of themes, as I’ve been pointing out, is that people are going to stay on 28nm as long as possible, that there is no rush to 20nm since the costs are a challenge (never mind the technical challenges).

There is also a short video version with the same people around the table here.

How much SRAM proportion could be integrated in SoC at 20 nm and below?

Once upon a time, ASIC designers were integrating memories in their design (using a memory compiler being part of the design tools provided by the ASIC vendor), then they had to make the memory observable, controllable… and start developing the test program for the function, not a very enthusiastic task (“AAAA” and “5555” and other vectors), look for the test coverage, and try to be creative to reach the expected 99,99% magic number. I agree that this was long time ago, but when looking back to this old time, you realize how powerful is DesignWare Self-Test and Repair (STAR) Memory System from Synopsys, initially developed by Virage Logic. Today we are talking about the version 5 of the tool. Moreover, since the year 2000’s, most of the ASIC vendors are externally sourcing the SRAM compiler (to Virage Logic at that time…), ASIC designer is taking benefit of faster, denser memories with Built-In-Self-Test (BIST) integrated.

According with Semico, the number of processors integrated into a single SoC is growing (left caption, even if I am not sure that 16 processor per SoC is the standard in 2012 for every SoC, this is certainly the case with Application Processor for smartphone or Set-Top-Box), and, as a matter of fact, processor cache size is growing (middle caption), leading memory to dominate chip area, as we can see on the right of the picture. Leading to an immediate consequence on the SoC yield: it would dramatically decrease, if… the designer don’t use repair capability, like for example this offered by the STAR product (for Self-Test and Repair). Another precision about STAR version 5: unlike the previous version sold by Virage Logic, the tool can be available for a memory generated by a compiler coming from any vendor. Last, but not least precision, STAR Memory System 5 is targeted for designs implemented at 20-nm and below technologies.

You may want to challenge the assertion claiming that SoC yield would be severely impacted when integrating higher SRAM proportion within IC at 20nm and below? Thus, just have a look at the above pictures:

- Double patterning introduces variation from overlay shift,

- Voltage Scaling induces local variation with voltage, and

- Random Dopant Fluctuation generates global and local Vth variation

These process variations become significant at 20nm, causing bit failure. Implementing SRAM in a system (on Chip) is no more a “drag and drop” action, the designer has to also take into account a solid (automated) test generation, as well as to introduce repair capability, as the risk of failure is statistically… a certitude.

STAR Memory System 5 can be implemented for SRAM, as already mentioned, and also for ROM, Register File or Content Addressable Memory (CAM). The designer will implement a specific wrapper interfacing with the various memories, and a real memory sub-system is created, comprising a master and several slaves. The master, SMS Server, is connected to the outside world by a Test Access Port (TAP), and to several slaves (SMS Processors) through an IEEE 1500 normalized Bus. Each of the SMS Processors is connected to various memories (can be Single or Dual Port SRAM or Register Files) through the wrappers. Indeed, the test bench generation and the above described insertion are completely automated by STAR Memory System 5, also allowing performing diagnosis and redundancy analysis.

After running the test program on processed wafers, the failure diagnosis and fault classification will allow implementing redundancy, thanks to the Efuse box (Top right of the SoC block diagram), and dramatically increase the final SoC yield, as we can see per the image below.

To summarize, we have listed some unique features of the DesignWare STAR Memory System 5:

- Performance and area optimized architecture to efficiently test & repair thousands of memories with smaller area and less routing congestion

- Automated IP creation, hierarchical SoC insertion, integration, verification and tester-ready pattern generation

- Hardened STAR Memory System IP with Synopsys’ DesignWare memories enabling faster design closure, higher performance, higher ATPG coverage, smaller area and reduced power.

- Advanced failure analysis with logical/physical failed bitmaps, XY coordinates of failing bit cells and fault classification

Important to notice, this new version offers:

- New optimized memory test and repair algorithms to efficiently address memory defects, including process variation faults and resistive faults, at 20-nm and below

- New hierarchical architecture reducing test & repair area by 30% compared with the previous generation

- Hierarchical implementation and validation accelerating design cycles by allowing incremental generation, integration and verification of test and repair IP at various design hierarchy

- Support for test interfaces of high-performance processor cores maximizes design productivity and SoC performance, see below:

You may want to read the official Press Release from Synopsys about STAR Memory System 5