This tutorial describes how analog IP is becoming more important in any power management strategy and shows the major analog building blocks to manage power and temperature in a SoC on leading edge technology nodes.

The tremendous demand for high-performance computing devices has led to aggressive technology scaling, allowing for millions of transistors to be placed on the chip. This technology scaling, predicted by Moore’s Law in 1965, has led to an exponential growth in dynamic power dissipation due to faster and faster transistors. In sub-nanometer technology nodes, a significant portion of the total power consumption is due to transistor leakage currents. In recent years, we have seen an explosive growth in battery-operated mobile devices such as notebooks, cellular phones and tablets. In these mobile applications, energy consumption is the most critical resource and the transistor leakage currents have a direct impact on the battery life.

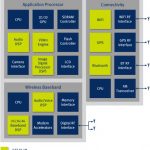

There are various design techniques used for reducing power dissipation in a digital circuit, such as clock gating and power gating. More recent techniques include dynamic voltage and frequency scaling (DVFS). This technique is often used for reducing the supply voltage or clock frequency in parts of the system that does not require peak performance. To effectively deploy DVFS, we need voltage regulators that can respond quickly to changes in the system workload. In state-of-the-art processors, the workload and transient currents can change 100X faster than the external voltage regulators can respond too. Hence, there is an increasing motivation in a system on a chip (SoC) design to implement voltage regulators on the die.

Power dissipation is not the only problem the designer needs to contend with in the SoC. System workloads also introduce hot-spots at different regions of the die and we need to monitor the temperature to ensure that the heat generated by circuits remains below an acceptable thermal limit. Higher temperatures also increase the leakage currents, reduce the circuit performance and can increase the package and cooling cost. Hence, temperature sensors are also an integral part of the overall energy management strategy in a SoC design.

The design of analog circuits on sub-nanometer process nodes is very sensitive to layout and demand careful attention to detail. The analog circuits also suffer from reduced voltage headroom and require careful isolation from noisy switching digital circuits. In addition, the integration of analog blocks requires the foundry to support transistors running at higher voltages and employing thick-oxide transistors with longer channel lengths to help reduce device variability. This usually complicates the validation effort since analog and digital circuits have to be tested together thereby increasing the design cycle time.

In summary, to effectively manage both power dissipation and temperature on the die, modern SoC’s need to integrate an increasing number of analog functions. Digital IP has been readily available from most foundries, but analog IP is usually not. This means analog IP needs to be part of the overall SoC design strategy from the beginning and should not be left as an afterthought.

ARM TechCon

Bal Sandhu | Engineer, ARM Inc.

Session Code: ATC-116

Location: Grand Ballroom F

Date: Tuesday, October 29

Time: 1:30pm-2:20pm

Track: Maximizing Chip Energy Efficiency

Area of Interest: Chip Design, SoC Implementation

lang: en_US