I met with Tom Quan of TSMC and Michael Beuler-Garcia of Mentor last week. Weirdly, Mentor’s newish buildings are the old Avant! buildings where I worked for a few weeks after selling Compass Design Automation to them. Odd sort of déja vu. Historically, TSMC has operated with EDA companies in a fairly structured way: TSMC decided what capabilities were needed for their next process node, specified them and then the EDA companies developed the technology. It wasn’t quite putting out an RFP, in general TSMC wasn’t paying for the development, the EDA companies would recover their costs and more from the mutual customers using the next node.

The problem with this approach is it doesn’t really allow for innovation the originates within the EDA companies. Mentor and TSMC have spend the last couple of years working very cooperatively on a flow for checking ESD (electro-static discharge), latchup and EOS (electrical overstress). All of these can permanently damage the chip. ESD can be a major problem during manufacture, manufacturing test, assembly and even in the field. EOS causes oxide breakdown (some one-time programmable memories use this deliberately to program the bit cells, but when it kills other kinds of transistors it is a big problem). Like most things, it is getting worse from node to node, especially 20nm and 16nm. The gate oxide is getting thinner and so it is simply easier to damage. FinFETs are even more fragile.

Historically TSMC has had layout design rules for these types of issues. But they required marker layers to be added to the cells to indicate which checks should be done in which areas. This causes two problems. Adding the marker layers is tedious and not really very productive work. But worse, if the marker layers are wrong then checks can be omitted and, often, without causing any DRC violations to give a hint that there is a problem. Another issue is that the design rules from 20nm on are sometimes voltage dependent, again a solution that was addressed historically with marker layers. Even then, not all rules could be checked. In fact, previously 35% of rules could not be checked and 65% required marker layers to check.

This is increasingly a problem. It is obviously not life-threatening if the application processor in your smartphone fails (although obviously more than annoying). But medical, automotive and aerospace have fast growing electronic content and they have much higher reliability requirements. If your ABS system or your heart pacemaker fails it is a lot more than annoying.

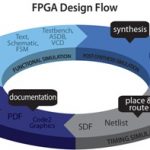

So Mentor and TSMC decided that they wanted a flow for checking that didn’t require marker layers and covered all the rules. It would obviously need to pull in not just layout data, but netlist and other electrical data (voltage dependent design rules obviously require knowing the voltages). The flow is intended for checking IP as part of the TSMC9000 IP quality program.

This is built on top of Mentor’s PERC (programmable electrical rule checker). They focused on 3 areas where these problems occur:

- I/Os (ESD is mostly a problem in I/Os)

- IP with multiple power domains

- analog

Voltage dependent DRC checking is another area of cooperation. Many chips today have multiple voltages. In automotive and aerospace these may include high voltages and, as a general rule, widely separated voltages require widely separated layout on the chip to avoid problems. Again, the big gains in both efficiency and reliability come from avoiding marker layers.

The current status is that Calibre PERC is available for full-chip checking 28nm, 20nm with 16nm in development. As part of the IP 9000 program it is available for IP verification for 20SoC, 16FF and 28nm. Use of Calibre PERC will become a requirement (currently it is just a recommendation) in 20nm, 16nm and below.