Toyota Motor North America CEO James Lentz got a letter from the U.S. Federal Communications Commission (FCC) last week recognizing Toyota’s announced plan to deploy Dedicated Short Range Communications (DSRC) technology on Toyota and Lexus vehicles sold in the U.S. beginning with MY21. The extraordinary letter notes that Toyota’s decision comes 20 years after the FCC allocated spectrum for DSRC technology, but cautions that Toyota ought to weigh such significant capital investments against the emergence of superior competing solutions, most notably cellular-based C-V2X.

Continue reading “Dear Toyota”

China Semiconductor Equipment China Sales at Risk

We have been on a roller coaster ride of on again off again trade talk between China and the US. It is unclear where we are on a day by day basis but of late it appears that we are not seeing a lot of progress and some progress we thought we had made may not have actually happened.

Continue reading “China Semiconductor Equipment China Sales at Risk”

Webinar: IP Quality is a VERY Serious Problem

We just completed a run through of the upcoming IP & Library QA webinar that I am moderating with Fractal and let me tell you it is a must see for management level Semiconductor Design and Semiconductor IP companies as well as the Foundries. Seriously, if you are an IP company you had better be up on the latest QA checks if you want to do business with the leading edge foundries and semiconductor companies, absolutely.

The secret weapon here is presenter Felipe Schneider, Director of Field Operations at Fractal. Felipe will take us through the agenda followed by a demonstration of Crossfire, ending with questions and answers. Felipe is the frontline interface to Fractal customers in North America which includes many of the top semiconductor companies and IP providers so he knows IP QA. Felipe also knows what QA checks are being done at the different process nodes down to 7nm and what new checks are coming at 5nm (crowdsourcing). This alone is worth an hour of your day.

IP & Library QA with Crossfire: Wed, Jun 6, 2018 9:00 AM – 10:00 AM PDT

There is no industry where the need for early bug detection is more paramount than in SoC design. Consequences like design re-spins, missed tape outs and hence missed market opportunities make the cost of late bug detection prohibitive. Where earlier generations of SoC designs could be crafted by a team of limited size that could oversee the entire design process, design in the latest process nodes requires a different strategy.

Designer productivity is lagging behind Moore’s law which drives the increase of transistor density. Thus design teams are becoming larger and are comprised of multiple groups spread over the globe. Outsourcing of design tasks by integrating third-party IP is mandatory to get the job done, but it reduces oversight of the SoC design process and leaves companies at the mercy of the quality strategy implemented by their suppliers. At the same time, modelling of new physical effects using current-driver, variation and electro-migration models paired with an increased amount of PVT corners generate an explosion of data to be analyzed prior to sign-off.

It is clear that QA needs to be a shared responsibility by all partners in the SoC design flow, from library and IP providers to foundry and SoC integrators. Each of these partners needs an integrated QA solution in their part of the design flow. Never should QA be an afterthought to be checked off right before IP delivery. This webinar intends to cover how Fractal Technologies Crossfire solution addresses these QA challenges from both backend and frontend perspectives and why its standardized and scalable QA methodology is superior to homebrew validation solutions.

About Crossfire

Mismatches or modelling errors for Libraries or IP can seriously delay an IC design project. Because of still increasing number of different views required to support a state of the art deep submicron design flow, as well as the complexity of the views themselves, Library and IP integrity checking has become a mandatory step before the actual design can start.

Crossfire helps CADS teams and IC designers in performing integrity validation for Libraries and IP. Crossfire makes sure that the information represented in the various views is consistent across these views. Crossfire improves Quality of your Design Formats.

About Fractal Technologies

Fractal Technologies is a privately held company with offices in San Jose, California and Eindhoven, the Netherlands. The company was founded by a small group of highly recognized EDA professionals.

Welcome DDR5 and Thanks to Cadence IP and Test Chip

Will we see DDR5 memory (device) and memory controller (IP) in the near future? According with Cadence who has released the first test chip in the industry integrating DDR5 memory controller IP, fabricated in TSMC’s 7nm process and achieving a 4400 megatransfers per second (MT/sec) data rate, the answer is clearly YES !

Let’s come back to DDR5, in fact a preliminary version of the DDR5 standard being developed in JEDEC, and the memory controller achieving a 4400 megatransfers per second. This means that the DDR5 PHY IP is running at 4.4 Gb/s or quite close to 5 Gb/s, the speed achieved by the PCIe 2.0 PHY 10 years ago in 2008. At that time, it was the state of the art for a SerDes, even if engineering teams were already working to develop faster SerDes (8 Gb/s for SATA 3 and 10 G for Ethernet). Today, the DDR5 PHY will be integrated in multiple SoC, at the beginning in these targeting enterprise market, in servers, storage or data center applications.

These applications are known to always require more data bandwidth and larger memories. But we know that, in data center, the power consumption has become the #1 cost source leading to incredibly high electricity bill and more complex cooling systems. If you increase the data width for the memory controller while increasing the speed at the same time (the case with DDR5) but with no power optimization, you may come to an unmanageable system!

This is not the case with this new DDR5 protocol, as the energy per bit (pJ/b) has decreased. But the need for much higher bandwidth translates into larger data bus width (128-bit wide) and the net result is to keep the power consumption the same as it was for the previous protocol (DDR4). In summary: larger data bus x faster PHY is compensated by lower energy/bit to keep the power constant. The net result is higher bandwidth!

You have probably heard about other emerging memory interface protocols, like High Bandwidth Memory 2 (HBM2) or GraphicDDR5 (GDDR5) and may wonder why would the industry need another protocol like DDR5?

The answer is complexity, cost of ownership and wide adoption. It’s clear that all the DDRn protocols, as well as the LPDDRn, have been dominant and saw the largest adoption since their introduction. Why will DDR5 have the same future as a memory standard?

If you look at HBM2, this is a very smart protocol, as the data bus is incredibly wide, but keeping the clock rate pretty low (1024 bit wide bus gives 256 Gbyte/s B/W)… Except that you need to implement 2.5D Silicon technology, by the means of an interposer. This is a much more complex technology leading to much higher cost, due to the packaging overcost to build 2.5D, and also because of the lower production volume for the devices which theoretically lead to higher ASP.

GDDR5X (standardized in 2016 by JEDEC) targets a transfer rate of 10 to 14 Gbit/s per pin, which is clearly an higher speed than DDR5, but requires a re-engineering of the PCB compared with the other protocols. Sounds more complex and certainly more expansive. Last point, if HBM2 has been adopted for systems where the bandwidth need is such than you can afford an extra cost, GDDR5X is filling a gap between HBM2 and DDR5, this sounds like the definition of a niche market!

If your system allows you to avoid it, you shouldn’t select a protocol seen as a niche. Because the lower the adoption, the lower the production volume, and the lower the competition pressure on ASP device cost… the risk of paying higher price for the DRAM Megabyte is real.

If you have to integrate DDR5 in your system, most probably because your need for higher bandwidth is crucial, Cadence memory controller DDR5 IP will offer you two very important benefits: low risk and fast TTM. Considering that early adopters have already integrated Cadence IP in TSMC 7nm, the risk is becoming much lower. Marketing a system faster than your competitors is clearly a strong advantage and Cadence is offering this TTM benefit. Last point, Cadence memory controller IP has been designed to offer high configurability, to stick with your application needs.

From Eric Esteve (IPnest)

For more information, please visit: www.cadence.com/go/ddr5iptestchip.

Managing Your Ballooning Network Storage

As companies scale by adding more engineers, there is a tendency to spread across multiple design sites as they strive to hire the best available talent. Multi-site development also impacts startups as they try to minimize their burn rate by having an offsite design center such as India, China or Vietnam.

Both the IoT and automotive companies are becoming dependent on 5G and AI as their key drivers, fueling trend for more heterogeneous design projects. At the heart of this increased design collaborations of multiple companies across different sites is the necessity of addressing how design data creations, sync-ups and handoffs are done. We will look into some critical success factors in this area and discuss their available solutions.

EDA flows and design teams

A typical design flow begins when RTL is developed for a specific design specification and then synthesized to gate level, followed by placement optimization, clocking, routing and parasitic extraction intended for timing signoff. It is common to have many process owners across these implementation stages. Once design development started, each designer tends to copy the entire project data into their workspace and annotated their works to propagate further the design realization closer to layout.

The process gets iterated multiple times, some with smaller loops such as in performing localized placement changes and redoing the route, while others may cover many steps such as doing ECOs, or even may trigger a loop-back to the starting point (for example when upstream input files such as RTL version, tool version or run settings change). During each of these events, more design data get duplicated, ballooning up the design data usage (refer to figure 1a).

In addition, early design targets such as the libraries, developed foundation and design IPs that absorb new technology specifications and precede a system design implementation are subjected to frequent version refresh, triggering downstream updates of design implementation cycle and eventually more large data duplication.

Many instances, temporary measures such as local compression with gzip or tarz commands is attempted as designer has limited time to wrestle with the growing design data. This could become a nuisance and still does not prevent data duplication issue.

A hardware configuration management solution such as ClioSoft SOS7 resolves some of the issues faced with the ballooning network storage by greatly simplifying access to the real time database and efficiently managing the different revisions of the design data during design development as illustrated in figure 1b.

It enables distributed reference and reuse through the use of primary and cache servers. Both servers provide controls for a comprehensive data management across sites, enabling effective team collaboration by allowing data transparency and reducing data duplications. Any design team has several team members who need constant access to certain parts of the design data. SOS7 enables better automation by providing for the notion of a configuration wherein designers can access the necessary files based on the role they play in the team. While this improves the disk space usage by preventing copying unwanted files, it also adds a layer of security by limiting access to all files. It has seamless integration with many other EDA flows and allows GUI driven customization for designers to browse libraries and design hierarchies, examine the status of cells, and perform revision control operations without leaving the design environment or learning a new interface.

Usage of network storage

Design development usually starts with one or two shared disk partitions typically ranging from over hundred to 250Gb. Over several project integration snapshots, new additions to the team and several implementation stages, the network storage assigned to the project could easily reach to several terabytes size. At this stage, design teams often resort to segregating team data into cluster of disks such as for front-end, backend, verification –which may address ownership or usage tracking but does not address the main issue of managing the ballooning network storage usage (figure 2a).

Network storage also grows because of the reluctance of the engineers to discard what is perceived as unwanted data. Most data has a lifespan and becomes irrelevant after some time but both project manager and designers tend to retain most of the generated data including those intermediate ones, until a stable results could be achieved.

The cost of maintaining project data footprint is not limited to supporting the given workarea capacity, but also in providing redundant builds by IT for backup purposes. The growing number of physical disk partitions also affects the overall data access performance and IT maintenance efforts as mountable disks recommended threshold could be exceeded.

The solution

ClioSoft’s SOS7 design management platform addresses the issue of managing the ballooning network by using smartlinks-to-cache to link files which have populated the workareas. All directories and files are essentially treated as symbolic links which ensures that the workspace population is fastly accessible and with minimal disk space usage (illustrated in figure 2b, 2c).

This becomes especially useful when the binaries are large and coupled with a big design hierarchy. It is a key solution metric as other SCM solutions that do not take care of this has to deal with text files –and solutions built on top of these SCMs have to use either hardware solutions or make modifications to the file system to achieve network storage space savings

SOS7 has been architected to ensure high performance between the main server and the remote caches. Any time a user wants to modify the file or a directory, it is quickly dowloaded from the local cache or the main repository with minimal download time. SOS7 also provides for the notion of a composite object which manages a set of files as one entity.

When running the EDA software, a number of files are generated, some of which change with each run. Designers often like to keep the generated data from multiple runs as it enables them to compare the results and revert back to the previous design state if needed. SOS7 manages these files efficiently by treating the generated files as a composite object –without duplicating any files which do not change with each run of the EDA tool– thereby providing the designers with the flexibility they desire and at the same time minimizing the network usage space.

Disk space cost

Measuring disk space costs in dollars per gigabyte only covers half of the equation. One needs to consider additional cost drivers and dispel the common myth about storage total cost of ownership based on total capacity. For example, overhead attributed to reduced capacity due to derating, array-based redundancy and the file system coupled with cost needed for power, cooling and floorspace –all could add between 40-70 percent more on the cost.

Other challenges include the need to provision separate storage systems for data backup. All of these disk capacity demand is becoming prohibitive as limited budget constraint is usually imposed on a project. Hence, resolving network space usage at its root, that is an efficient data creation and management should help ensure adequate project storage capacity and performance.

ClioSoft SOS7 delivers features addressing such a need through enabling design dependencies reuse (such as flexible project partitioning including IP and PDKs), a comprehensive version control mechanisms (such as support to project derivative creation through branching) and previously discussed composite-object referencing.

For more info on SOS7 case studies or whitepaper, click HERE.

Also Read

HCM Is More Than Data Management

IoT SoCs Demand Good Data Management and Design Collaboration

ISO 26262: My IP Supplier Checks the Boxes, So That’s Covered, Right?

Everyone up and down the electronics supply chain is jumping on the ISO 26262 bandwagon and naturally they all want to show that whatever they sell is compliant or ready for compliance. We probably all know the basics here – a product certification from one of the assessment organizations, a designated safety manager and a few other safety folks and some form of safety process. Sound like easy boxes to check? Unfortunately for you the integrator, the basics may no longer be enough to complete your deliverables for your customers with respect to that IP.

Kurt Shuler, VP of Marketing at Arteris IP, sits on the ISO 26262 working group (since 2013), along with partner ResilTech (functional safety consultants, on the WG since 2008), so they’re pretty well tuned to industry expectations. What Kurt observes is that default assumptions about what level of investment is required to fully meet the standard can often fall short.

The ISO standard ultimately wants to validate the safety of systems, not bits of systems, because there’s no guarantee in safety that the whole will be the sum of the parts. But waiting until the car is designed before checking safety would be unmanageable, so a lot of requirements are projected back onto earlier steps in the chain. Each supplier in the chain must demonstrate compliance not only in their product, including all aspects of configuring and implementing safety-critical components, but also in their process and their people. And proof of these safety-related activities must be communicated up the supply chain.

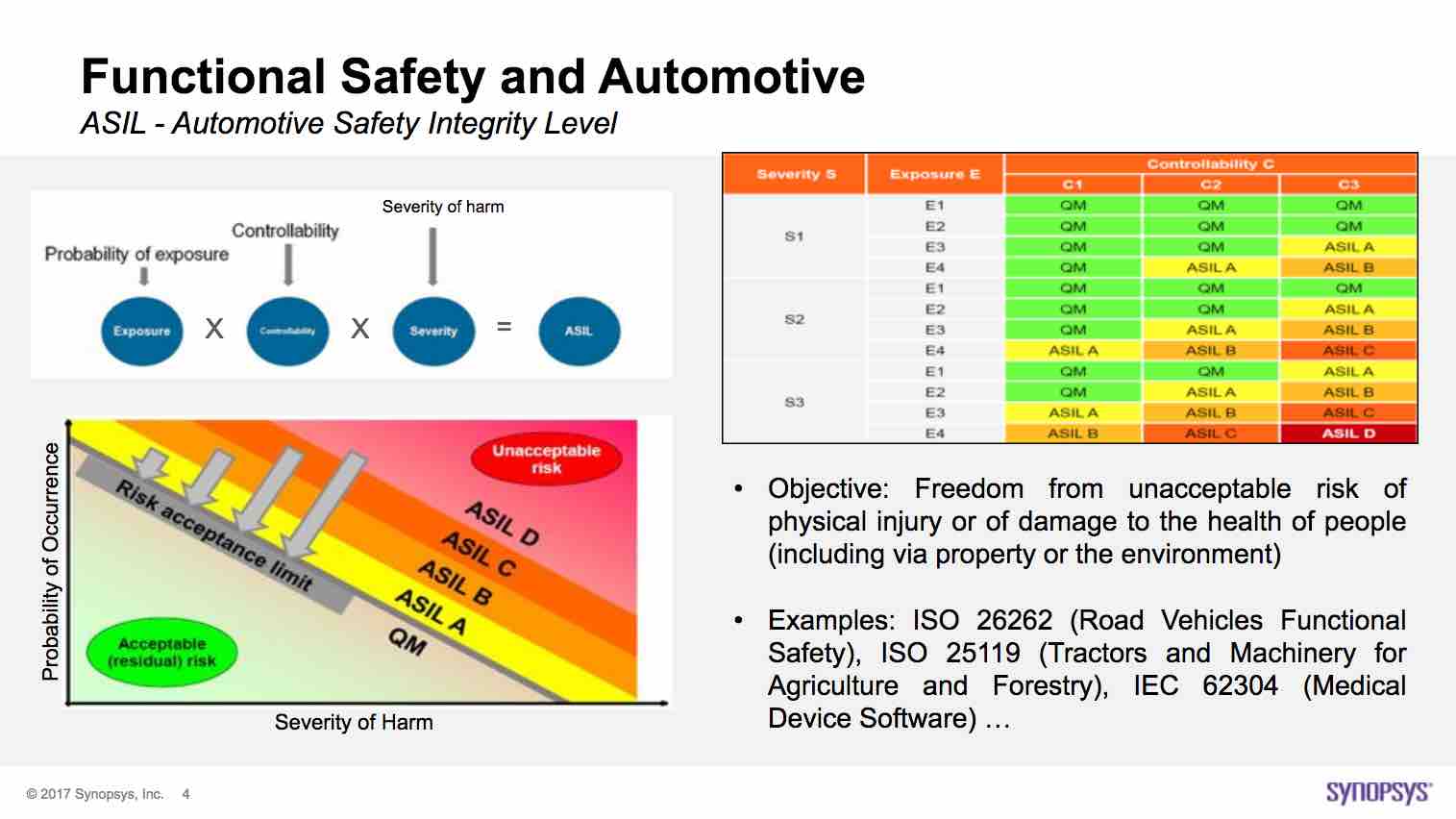

Further ramping expectations, as ADAS capabilities extend and as autonomy continues to advance, the automotive industry is apparently increasingly pushing for ASIL D (the highest level) compliance at the SoC level, which is less expensive in total system cost than using ASIL-B SoCs (the common expectation today) to reach ASIL-D at the system level through duplication. ASIL-D requirements are naturally much more rigorous, tightening the screws not only on the SoC integrators but also on their suppliers.

Kurt calls out three areas where default expectations fall short in what is good enough for compliance. The first is in people alignment with safety. A common view is that you can train or hire a functional safety manager (FSM) and a small number of safety engineers whose role is to ensure that everyone else stays on track. While this may meet the letter of the standard, apparently it doesn’t meet the spirit (a strong safety culture) and the spirit is likely to be a better guide as expectations rise. A more robust approach drives safety training more extensively through the organization, in addition to having an experienced FSM.

The second area is in process where quality management, change management, verification and traceability are all important. This is partly about tools (we all love tools) but more importantly about consistent and continual use of the processes defined around these areas. It is certainly helpful to use certified tools or to get certification for internal tools, but that doesn’t mean you’re done. Your customers will perform independent audits of your processes because they’re on the hook to prove that their suppliers follow the standard in all relevant aspects. Of course you could all learn in real-time and correct as needed but obviously it will be less painful and less expensive to work with partners who are already experienced and proven in prior audits.

The third area is around product compliance. From my understanding, general alignment on expectations may be better here, simply because we have tended to focus primarily on product objectives. The expectations of who is responsible for what between supplier and customer, in terms of assumptions of use, configuring a function, implementation and so on are documented in the Development Interface Agreement (DIA); this needs to be demonstrated as a part of compliance. Assuming these steps are followed, there is one area Kurt feels product teams need to ramp up their investment – in development of the initial failure mode effects analysis (FMEA). I hope to write more on this topic in a subsequent blog; for now, here’s a quick teaser. We engineers are biased to jumping straight to design and verification – concrete and quantitative steps. But FMEA is a qualitative analysis, assessing possible ways in which a design (or sub-design) could fail and the possible effects of those failures. This is the grounding for where you then decide to insert safety/ diagnostic mechanisms and how you measure the effectiveness of those mechanisms through fault analysis.

Kurt also stresses what I think is an important consideration for any SoC supplier when considering an IP solution. Ultimately the SoC company owns responsibility for demonstrating compliance to the standard to their customers. That’s a lot of work and expense so they can reasonably question the IP supplier on how they are going to make that job easier. One aspect is in people training – is the supplier meeting the letter of compliance or comfortably aligned with the spirit? One is in process – using approved tools and flows certainly but also being experienced in audits and already being proven on multiple other engagements. And finally in product, again that the supplier has not only done what the standard requires but goes beyond to simplify the SoC team’s configuration and safety analysis through templates for failure modes and effects and fault distribution guidance.

Food for thought. It seems like some ISO 26262 investments need future-proofing, If you want to read more, check out this link.

Functional Safety is a Driving Topic for ISO 26262

When I was young, functional safety for automobiles consisted of checking tread depth and replacing belts and hoses before long trips. I’ll confess that this was a long time ago. Though even not that long ago, the only way you found out about failing systems was going to the mechanic and having them hook up a reader to the OBD port. Or, worse you found out when the car stopped running or a warning light came on. A lot has changed with the advent of ISO 26262 which defines the standard for automotive electronic system safety.

The most critical systems, which are designated as Automotive Safety Integrity Level (ASIL) D, have many requirements imposed on them to ensure high reliability. This applies to systems where a failure could lead to death or serious injury, e.g. ADAS systems. It is easy to understand that these systems must be carefully designed and documented. While this is true, the safety requirements extend into ensuring that these systems can self-check at startup and also continuously monitor their own health. In fact, ISO 26262 requires that every block in these systems must run a self-test every 100 milliseconds during operation. This can include fault injection too. In effect, it is now necessary to “check the checkers”.

Even the scope of the functional safety self-monitoring tests is impressive. For instance, in the case of memories, in addition to requiring ECC on data, addresses are also protected by ECC. SOC designers for automotive applications are now faced with not only building the system they have specified, they need to build in extensive new on-chip functionality to ensure functional safety that meets the ISO 26262 standard. This process is somewhat familiar to designers, who have been adding BIST and Scan test functionality into their designs for decades.

Fortunately, similar to the model for BIST and Scan, there are commercial IP based solutions and tools chains that address the needs arising from these dramatic changes in system testing. Synopsys has long been a player in both the IP and test markets. It is only natural that they extend and adapt these offerings to create a combined and comprehensive solution. They have done the work to make their solution ISO26262 ASIL-B through ASIL-D ready.

There is a very informative video presentation that gives an overview and then goes into detail on the Synopsys functional safety offerings along with specific customer experience from Bosch in the application of STAR Memory System (SMS) and STAR Hierarchical System (SHS). The presenters are Yervant Zorian, Synopsys Fellow & Chief Architect, and Christophe Eychenne, Bosch DFT Engineer. In the first half, the very knowledgeable Yervant covers the requirements of ISO 26262 systems and then outlines the offering from Synopsys. In the second part Christophe goes through a case study from Bosch.

Here are a few of the interesting things I learned from this video. In the case of memories, each memory is given a wrapper that can perform testing and then implements memory repair. The repair information is saved and reloaded at start up, However, an additional test is performed at every start to fully check each memory. This helps manage aging in memories. Soft error correction in memories is complicated in newer process nodes because of the increased likelihood of multi-bit errors. The SMS is aware of memory internal structure and this helps in error detection and correction.

The SHS wraps interface and AMS blocks, and connects to a sub-server using IEEE 1500. The wrapped memories are tied together using the SMS processor and all of these elements and the digital IPs with DFT scan are then connected to a server which offers the traditional TAP interface. In addition, there are external smart pins that can be used to quickly and easily initiate tests without needing a TAP interface controller. As an added bonus, this entire system can be used to facilitate silicon bring up using with the Synopsys Silicon Browser.

Back when we were changing hoses and belts for long trips, Synopsys was just an EDA tools company. But, like I said, that was a long time ago. Synopsys has evolved and developed impressive and sophisticated offerings in IP, which now includes many of the essential elements to build SOCs and systems for safety critical automotive systems. The video presentation is available on the Synopsys website, if you would like to get the full story behind their latest work in this area.

Webinar: RISC-V IoT Security IP

Disruptive technology and disruptive business models are the lifeblood of the semiconductor industry. My first disruptive experience was with Artisan Components in 1998. The semiconductor industry started cutting IP groups which resulted in a bubble of start-up IP companies including Artisan, Virage Logic, Aspec Technologies, and Duet Technologies. Back then we were getting hundreds of thousands of dollars for a library when all of a sudden Artisan introduced a free royalty based model backed by the foundries. Artisan was later purchased by ARM for $900M, Virage was purchased by Synopsys for $350M, Aspec, Duet and dozens of others went out of business.

I see the same opportunity with RISC-V. I see disruption coming to the CPU IP market. The challenge is the ecosystem and that’s where crowdsourcing comes into play.

RISC-V (pronounced “risk-five”) is a free and open ISA enabling a new era of processor innovation through open standard collaboration. Founded in 2015, the RISC-V Foundation comprises more than 100 member organizations building the first open, collaborative community of software and hardware innovators powering innovation at the edge forward. Born in academia and research, the RISC-V ISA delivers a new level of free, extensible software and hardware freedom on architecture, paving the way for the next 50 years of computing design and innovation.

IoT is one of the biggest markets for RISC-V and security is one of the biggest challenges facing IoT which brings us to the upcoming Intrinsix IoT Security Webinar:

- a brief look at the market for IoT security IP

- component functions of an IoT system-on-chip (SoC)

- hardware acceleration for IoT devices

- secure boot of an IoT device

- benefits of hardware acceleration for IoT security

- use of a dedicated RISC-V security boot processor

- key components of an easily to deploy NSA Suite-B IoT security IP solution

About Intrinsix Corp.

Intrinsix Corp., headquartered in Marlborough, MA USA, is a design services company for advanced semiconductors with clients around the globe. The company has been servicing the needs of electronic product and semiconductor companies for more than 30 years. Intrinsix has the platforms, process and people to ensure first-turn success for industry-leading semiconductors.

IoT security primers:

Jump Start your Secure IoT Design with Intrinsix

by Mitch Heins Published on 12-05-2017 11:00 AM

Have you ever had a great idea for a new product but then stopped short of following through on it because of the complexities involved to implement it? It’s frustrating to say the least. It is especially frustrating, when the crux of your idea is simple, but complexity arises from required components that don’t add to the functionality of the design. Enter the world of an internet-of-things (IoT) device.

IoT Security Hardware Accelerators Go to the Edge

by Mitch Heins Published on 10-23-2017 10:00 AM

Last month I did an article about Intrinsix and their Ultra-Low Power Security IP for the Internet-of-Things (IoT). As a follow up to that article, I was told by one of my colleagues that the article didn’t make sense to him. The sticking point for him, and perhaps others (and that’s why I’m writing this article) is that he couldn’t see why you would want hardware acceleration for security in IoT edge devices. He wasn’t arguing the need for security. He was simply asking why you would spend the extra hardware area in a cost-sensitive device when you could just use the processor you already have in the device to do the work in software.

Intrinsix Fields Ultra-Low Power Security IP for the IoT Market

by Mitch Heins Published on 09-21-2017 05:00 PM

As the Internet-of-Things (IoT) market continues to grow, the industry is coming to grips with the need to secure their IoT systems across the entire spectrum of IoT devices (edge, gateway, and cloud). One need only look back to the 2016 distributed denial-of-service (DDoS) attacks that caused internet outages for major portions of North America and Europe to realize how vulnerable the internet is to such attacks. Perpetrators, in that case, used tens of millions of addressable IoT devices to bombard Dyn, a DNS provider, with DNS lookup requests. Analysts predict that by the year 2020, there will be over 212 billion sensor-enabled objects available to be connected to the internet. That’s about 28 objects for each person on the planet. While the opportunity for disaster seems obvious, the opportunity to make a lot of money on IoT is even bigger, so the industry needs to urgently address the problem. How can you make your IoT SoC devices secure?

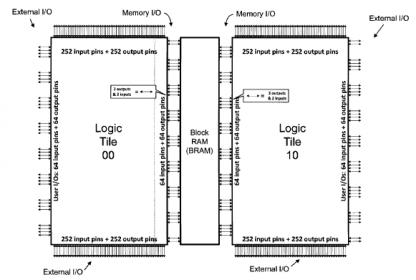

Block RAM integration for an Embedded FPGA

The upcoming Design Automation Conference in San Francisco includes a very interesting session –“Has the Time for Embedded FPGA Come at Last?” Periodically, I’ve been having coffee with the team at Flex Logix, to get their perspective on this very question – specifically, to learn about the key features that customers are seeking to accelerate eFPGA IP adoption. At our recent kaffeeklatsch, Geoff Tate, CEO, and Cheng Wang, Senior VP Engineering, talked about a critical requirement that their customers have.

Geoff said,“Many of our customers are current users of commodity FPGA modules seeking to transfer existing designs into eFPGA technology, to leverage the PPA and cost benefits of SoC integration. One of the constituent elements of these designs is the use of the “Block RAM” memory incorporated into the commercial FPGA part. We had to develop an effective, incremental method to incorporate comparable internal RAM capabilities within the embedded FPGA IP. Flexibility is paramount, as well – we need to be able to accommodate different array types and configurations, with a minimal amount of our engineering team resources.”

Figure 1. Illustration of the integration of memory array blocks in a vertical channel between eFPGA tiles.

Cheng added, “Recall that the fundamental building block of our IP is the tile. eFPGA designers apply our tools to configure their IP as an array of tiles.” (Here’s a link to a previous article.)

“The edge of the tile is developed to provide an appropriate mix of inter-tile connections, balanced clock tree buffering, and the drivers and receivers for primary I/O ports on perimeter tile edges. To accommodate the customer need for memory arrays, we’ve developed an internal methodology to space the normally abutting tiles and introduce banks of memory blocks.”, Cheng continued.

The unused drivers/receivers at the interior tile edges provide the necessary interface circuits to the memory block, driving the loads of the memory inputs and interfacing the memory outputs back to the eFPGA switch fabric. A portion of the clock buffering and balancing resources at each tile edge is directed to the clock inputs of the array.

“Are the internal eFPGA memory blocks constrained to match the Block RAM in a commercial part?”, I asked.

“Not at all.”, Geoff replied. “We have customers interested in a wide variety of array size and port configurations. And, they are extremely cost-conscious. For example, they do not want the overhead of a general, dual-port memory configuration for their single-port designs.”

“To support this flexibility, we had to enhance our compiler.”, Cheng added. “The netlist output of Synplify is targeted toward the Block RAM resources of the commercial parts. When reading that netlist into the eFLX compiler, we re-map and optimize the Block RAM instances into the specific memory block configuration of the eFPGA.”

“What are the signal interconnect design requirements over the memory block?”, I asked.

Cheng answered, “We anticipate the memory arrays in the rows and columns between tiles will be fully blocked up through M4. Our inter-tile connections to the switch fabric are above M4. The power grid design readily extends to support the memory block, as well.”

Figure 2. A recent testsite design tapeout with embedded array blocks between tiles.

Cheng summarized the discussion well. “We have developed a methodology to allow our engineering team to develop an eFPGA design addressing customer requirements for memory blocks, with an incremental amount of internal resources. We are leveraging the tile-based architecture and the tile edge circuitry to simplify the integration task. Our engineering team does the electrical analysis of the final implementation. The customer utilizes the existing eFLX compiler, reading in the additional SDF timing library released for the internal memory array blocks.”

Geoff added, “This interface and implementation method would support the integration of a general macro between tiles, within the overall LUT and switch architecture of the eFGPA.”

So, back to the DAC session question – has the time for eFPGA IP arrived? If designers have the flexibility to quickly and cost-effectively integrate additional hard IP within the programmable logic of the eFPGA, without significant changes to the design flow, that’s pretty significant.

I’m looking forward to the DAC session, to see if others concur that the time is indeed here.

For more information on the Flex Logix RAMLinx design implementation for embedded memory blocks, please follow this link.

-chipguy

Semiconductor, EDA Industries Maturing? Wally Disagrees

Wally Rhines (President and CEO of Mentor, A Siemens Business) has been pushing a contrarian view versus the conventional wisdom that the semiconductor business, and by extension EDA, is slowing down. He pitched this at DVCon and more recently at U2U where I got to hear the pitch and talk to him afterwards.

What causes maturing is saturation – the available market that can be divided among providers doesn’t have much room to grow, or rather will grow only relatively slowly. That triggers consolidation – you eat your competitors, they eat you, or you shrivel up and die because you’re too small to compete. The big guys continue to make money through efficiencies of scale, aka laying off a bunch of people in duplicated functions after a merger. So for some (eg Mark Edelstone at Morgan Stanley in late 2015), the wave of consolidations in semiconductors pointed to a maturing industry.

But Wally says that’s an over-simplified analysis of what’s really happening, and he has a lot of data to back up that viewpoint. Obviously there has been a lot of consolidation, but the best of it has not been winner-take-all across the market but rather winner-take-all in very targeted segments, which he calls specializationrather than consolidation. He cites as examples TI who famously pivoted from wireless/cellular, automotive, ASIC plus analog to a laser focus on analog, also NXP who have specialized in Automotive and Security. Another example he offers is Avago/LSI/Broadcom, now concentrated in wired and wireless infrastructure and enterprise datacenter (especially storage). What’s notable in each of these cases is that profitability has steadily climbed, for TI and Broadcom to ~40% and for NXP to ~20% (each in operating margin as a % of revenue). Contrast this with Intel which has acquired and divested many companies over the years and whose earnings as a % of revenue have remained more or less flat.

Wally took this further. He showed that there is essentially no correlation between operating margin and revenue; in other words, the economies of scale argument doesn’t fly (outside of a few cases – analog, RF, power and memory). But suppose the semis were trying it anyway; would they cut back on R&D? Again, the data doesn’t support that view. R&D in the semiconductor industry has remained pretty flat at 14% over 35 years. Moreover, the cost per transistor shipped continues to drop at 33% per year. Wally said this isn’t really a Moore’s Law effect (though of course process improvements have contributed and continue to contribute). Innovation and demand is driving this curve. So much for the smokestack view of semiconductors; the data simply doesn’t support this being a maturing market.

He also talked about top players in the market and new entrants, noting that combined market share of the top N (pick a number) companies in the industry has remained at best flat, which means there are smaller companies and new entrants grabbing some of the pie. From a western perspective we haven’t seen much VC activity around semiconductors but the picture in China is quite different; over $100B in the 5-year plan, originally concentrated in foundry investment but now increasingly shifting to fabless investments (up to 1500 fabless startups recently). OK, so maybe that doesn’t help us in the west, but we’re seeing, in the west and the east, new systems level entrants. Google, Amazon and Facebook are all building their own devices; not big volumes in some cases, but smart speakers and home automation are likely to change that. Bosch, a well-known Tier1 provider in the auto business, has opened a $1B fab. Who can afford to do this in 7nm? Not a lot of designs have to be that aggressive and for those that do, the FAANG group have deep, deep pockets.

What does this mean for EDA? Wally said in his talk that a lot more of the innovation drive is coming from system companies. This was also true in the early days of EDA; Mentor for example got a healthy share of its business from aerospace and the DoD for example. In fact while we tend to think very SoC-centric, 70% of the development effort (per Wally) in building a complete system is at the system level, not in the IC. At the board, or in the enclosure and across a vehicle you have to consider power integrity, thermal and mechanical stresses, EM interference, wiring harness design, etc, etc. You’ve heard of digital twins – this is where the Tier1s and OEMs are trying to reduce costs and iterations in getting all of this right before they build physical prototypes. He also pointed out something I have been hearing more recently. The Tier1s/OEMs are looking for more opportunities to differentiate through closer connections in design from modules down to die, again looking for analytics and optimization spanning this range.

Which to Wally means that the opportunities for innovation in EDA are richest at this full system integration/analysis level. That presents new challenges because verification complexity rises rapidly with the size of the system. He feels a lot of the innovation here is likely to come from the big EDA companies working with their customers, simply because of the complexity of the problem; it is difficult for a new entrant to make a dent in problems of this scale with point tools. However there could be opportunities in new capabilities in safety and security, also around new device physics/multi-physics around thermal and other system-level analysis domains. Machine learning applications are definitely interesting (qv Mentor’s Solido acquisition) – anything that can reduce analysis time and/or improve optimization.

My takeaway from all of this:

- Semiconductor isn’t maturing, it’s evolving to support new applications and markets

- Semiconductor can only mature if we lose interest in innovation in general. No matter what technology is hot (neuromorphic, DNA computing, nanobots, quantum, …), we will still need to compute, communicate, manage/generate power, .. How else are we going to do that?

- EDA as always will follow semis and will equally evolve. But we EDA types need to follow the puck. The real money will not be in polishing the same old domains.