During the SoC Design Session at the just concluded Linley Spring Processor Conference in Santa Clara, Carlos Macian, Senior Director AI Strategy and Products at eSilicon, held a talk entitled ‘Opposites Attract: Customizing and Standardizing IP Platforms for ASIC Differentiation’.

Standardization is key to IP in modern systems-on-chip (SoC), yet without customization a huge amount (of revenue, performance, area optimization) is left on the table. The spectrum of standard to custom IP goes from common functions turning into standard IP which could evolve into an IP platform that transforms into what eSilicon terms an ASIC chassis and finally consisting of customized IP.

A recent customer design, a machine learning ASIC, included a large amount of IP from 400Mb of embedded SRAM, 48 lanes of 28G SerDes, PCIe SerDes, HBM2 PHY, custom and compiled memories, PLLs, eFuse, analog, PVT monitors to name a few. Standard IP is the opposite of having your special secret sauce, but it is critical to your schedule, cost and efficiency. In a typical 7nm Data Center chip these days, 40-50% of the area and power of the ASIC is related to the IP, 30-50% of the unit cost depends on the IP, before even accounting for royalties. And 30-50% of the NRE development cost is due to IP-specific NRE and is the single highest cost after the mask tooling for 7nm. It is also more expensive than the total design labor cost from RTL to tape-out.

In addition, there is the effort needed in IP integration from test and bring-up to integration/verification interface and interaction with providers. The IP may not be as critical as your secret sauce solution, but it needs to be as cost efficient, as easy to integrate and test and as power/performance/area efficient as you can possibly get. When IP is used, the number one goal is to reduce or eliminate the development and integration effort for the parts of the design that are not critical.

How do standards help? Common interfaces help in interconnectivity and shared functionality allowing easy integration and less interoperability disconnects. Deliverables in standardized form also simplify the tasks. Beyond standardized IP, platforms enable the harmonization of various IPs, so they work well together for a particular node or a market niche. Verification is always needed and for certain applications there is commonality in desired functionality and deployment, and templates can greatly facilitate the deployment and implementation with cost and time savings. IPs, by definition, include overhead since not all use cases have the same functional features, so a common denominator of sorts injects additional circuitry to cover all bases. So, customizing to the needed features can end up saving a lot of power and real estate. Trimming unneeded memory is one example of customization that pays dividends.

Carlos Macian closed with stressing that IP matters, and in summary, using standard IP for standard consistent results, in conjunction with custom IP to increase your market advantage be it through features, performance, power, area, though opposite in means ,are synergistic in ways, the ways of optimal design practice for timely market success at desirable customer value.

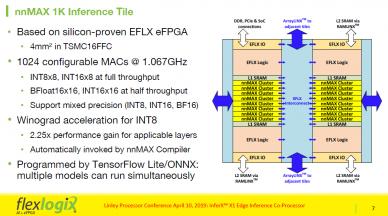

During the Panel discussion at the end of the session, Carlos Macian was asked about eSilicon’s approach to pre-verifying. His approach was to verify the RTL, then netlist operation, followed by the other dimension of going beyond the standalone functionality and verifying the integration with the cores which cannot of course be pre-verified, and that is only possible towards the end when the implementation is complete and this is the responsibility of the customer. But at all the levels, the cycle of verification is stream-lined and more straightforward. Silicon verified IP blocks are mandatory to increase the functionality confidence factor. On the AI front, building blocks are provided to generate the AI tiles. The difference between inference and training is going to affect the functionality you place in the AI tile, but it does not affect what is around it such as the ASIC chassis. When asked about the RISC-V value proposition, eSilicon believes that RISC-V facilitates integration greatly, however other processors are also used in its solutions.

The neuASIC [SUP]TM[/SUP] Platform provides compiled, hardened and verified 7nm functions, greatly simplifying the design imperatives providing fixed functions and streamlined data flow architectures. One of the reasons we are seeing hesitation to optimize to the last degree all the systems is due to the fact the field is evolving rapidly. The semiconductor community at large is conservative and risk averse given the multimillion-dollar cost of advanced silicon nodes, so we are seeing that certain workloads are becoming more mature, better understood and more prevalent. While discovering new workloads and new network models that address those workloads, as those mature workloads are better understood, the incentive of building optimized implementations that will scale extremely well for a larger user base, becomes more attractive. For the newer workloads being discovered, programmability plays a key role, modern accelerators are providing programmability such as RISC-V processors, while sitting next to hardware optimized solutions.00

As far as the deliverables are concerned, the standard list from Verilog model to GDSII, test benches, integration guidelines, data sheets, timing constraints, silicon reports since eSilicon is providing hard IP. The integrity of the overall solution is covered given the eSilicon ASIC heritage, knowing where potential interoperability issues arise, and ongoing communications with the customers occur to make sure their functionality is assured as best as possible. Best in class IP is no longer enough, it is compatible IP and it is an architecture that allows the IP to work together that is in order. Programmability and configurability such as compilable memory provide customization. Configurability is also in the SerDes, with parameterized control in the transmit and receive channels.

IP integration in the ASIC matters and IP customization matters. In order to differentiate your product, you need to take advantage of those two aspects and bring out the value in both dimensions.