Innovation is never ending in verification, for performance, coverage, connection to verification plans and other aspects of DV. But debug, accounting for 40% of the verification cycle, has remained stubbornly resistant to significant automation. Debug IDEs help to visualize but don’t address the core problem: given a failure, what is the most likely root cause for that failure? Fault localization, the common name for this objective, is still more of an art than a science. Some progress has been made through spectrum-based analytics (by test pass/fail, code coverage, suspiciousness, all crossed with failures). These methods help but still provide rather coarse-grained localization. The problem is that fine-grained debug generally requires a chain of reasoning complemented by experiments to effectively isolate a root cause. That makes it a perfect candidate for agentic methods, as ChipAgents are now demonstrating.

The nature of debug and why agents are promising

Debugging hardware or software is hard because the connection between an observed failure and the root cause for that failure is rarely obvious. These may be separated widely in space, in functions/modules that appear very unrelated, or they may be separated widely in time, a root cause planting a trap which is only sprung millions of cycles later. New bugs can pop up during design evolution thanks to seemingly harmless fixes to other bugs, with no indication for why a problem thought to be resolved should suddenly reappear.

Given this reality, debug becomes an iterative trial-and-error process, guided certainly by strong IDEs to visualize waveforms, cross probe with code, and so on. But still the real intelligence in finding a root cause depends heavily on the insight of DV and design engineers, and experiments they run to test their guesses. This discovery process is fundamental to the debug task. Bugs may have more than one root cause or may result from some latent behavior not considered in spec development or test planning. This is why debug consumes so much time and resource in the verification cycle.

At DAC 2025 I talked to Kexun Zhang and Brian Li (both at ChipAgents) to understand their agents-based approach to automating debug. I was impressed. What they are doing is an entirely logical approach to debug, aware of state-of-the-art techniques like spectrum analysis while building on LLM and agent-based methods to advance beyond first-order localization. This looks like a real step forward in debug automation, managing discovery and allowing for expert guidance in the reasoning flow, or full autonomy if you wish.

ChipAgents applied to debug

I’ll start with what these methods can do for debug, then turn to methods and training considerations. I saw a brief demo at the ChipAgents booth, wish I could have seen more though I am usually not a fan of demos. In this case the product consumes a waveform file and a simulation log and then lets you ask natural language questions, just like a chatbot.

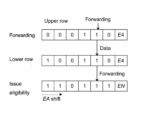

In the demo, an engineer typed in something like “X worked correctly on the first pass but not on the second pass. Tell me why.” “X” was a relatively high-level behavior. This prompt launched an initial analysis, narrowing down first level candidate behaviors in the waveforms to explain the difference. Following this initial analysis the tool offered the expert an opportunity to refine the prompt, or to let it continue to refine further those initial localizations itself. In these subsequent steps it might run one or more simulations, possibly with modifications to the code (in a new branch) to test hypotheses.

This process repeats until the tool has isolated one or several final candidates. Then it’s up to the expert to consider if this is an RTL bug, a test plan bug or a spec bug. Or possibly not a bug but a feature!

Learning and agent methods

Kexun stressed out of the box chat models do not work well in this domain. At ChipAgents they have put significant work into training the system to understand the chip domain much more accurately than could a generalist chat model. They guided training using a combination of synthetic and human annotated data. Which could be an important moat to anyone with plans to copy their approach😀 They have also built tools to parse through giant simulation dump and log files, another must-have you wouldn’t get from a general bot. Agents work with these tools in their analysis and traceback.

On localization methods Kexun had an interesting observation. He said that in earlier agentic approaches to debug in software engineering, agents also used spectrum-based methods and those also proved roughly accurate (20-30% localization). But as models got stronger, agents are now becoming simpler, no longer using spectrum methods explicitly. He added that whether this will also hold for hardware debug is still in debate within the company. I find it intriguing that a combination of simpler agents might become a more powerful approach.

We talked briefly about other sources for analysis – check-ins, specs and testplans for example. All of these are being worked, though the existing debug capability is attracting significant attention for active design teams, an appeal I find totally understandable.

Other development

Brian touched on a few other areas of development. Verification plan generation, driven from specifications running to hundreds or thousands of pages. Checking for disconnects between a spec and an existing test plan. Code generation along the lines of tools like CoPilot. And bug triaging, which is another interesting area where I would like to see if they can add new value over and above automation available today.

Overall a very promising direction. You can learn more about ChipAgents HERE.

Also Read:

ChipAgent AI at the 2025 Design Automation Conference #62DAC

Video EP6: The Benefits of AI Agents for Waveform Debugging with Zackary Glazewski of Alpha Design

AlphaDesign AI Experts Wade into Design and Verification

CEO Interview with Dr. William Wang of Alpha Design AI