Call me Ishmael. Some years ago –in the mid 1990s – having little or no money in my purse and nothing particular to interest me on shore, I thought I would sail the startup ship Cyrix and see the watery part of the PC world. Whenever I find myself grim about the mouth or pause before coffin warehouses, and bring up the rear of every funeral I meet – I think back to the last words that came from Captain Ahab before the great Moby Dick took him under: “Boy tell them to build me a bigger boat!”

You see the great Moby Dick is just not any whale, it is the $55B great white sperm whale that has been harpooned many of times and taken many a captain Ahabs to the bottom of the ocean. It still lives out there unassailable, despite the ramblings of the many new, shiny ARM boats docked on Nantucket Island, a favorite vacation spot of mine from my youth.

Perhaps there could be a great whaling ship constructed out of the battered wood and sails of the H.M.S. nVidia and the H.M.S. AMD. Because the alternative is that they must go down separately. Patience wears thin for ATIC (Advance Technology Investment Company), the Abu Dhabi investment firm that has poured billions of dollars into Global Foundries and AMD with the hope of being the long term survivor in the increasingly costly Semiconductor Wars. To be successful, the company needs a fab driver larger than what nVidia and AMD represent separately.

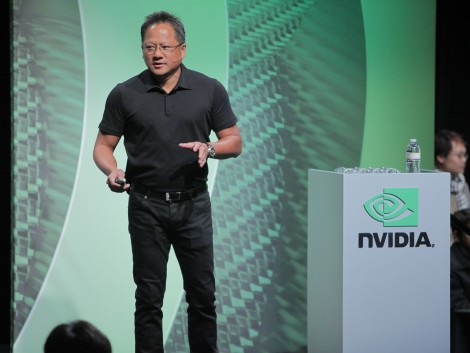

Jen Hsun Huang is the most successful CEO to ever challenge Intel in the PC ecosystem and yet he is not strong enough to overcome the Moore’s Law steamroller that naturally seeks to integrate all the functions of a PC into one chip. Both AMD and Intel have integrated chipsets and “good enough” graphics into their CPU thus limiting his leading revenue generator. He made a strategic move with Tegra to get out in front of the more mobile platforms known as Smartphones and Tablets but they may not ramp fast enough to allow him to make it to the other side of the chasm.

AMD, has pursued Intel forever but now is without a leader that can stop the carnage of a strategy that seeks to be Intel’s me too kid brother. It bleeds with every CPU sold to the sub $500 market. Lately, Intel has been on allocation and given them a profitable reprieve, but don’t count on it lasting forever as Intel eventually moves to the next node and adds more capacity.

There are huge both short term and long term benefits should Jen Hsun decide to merge with AMD. In the short term, nVidia and AMD are in a graphics price war where the AMD sales guy tells the purchasing exec “whatever nvidia bids mark me down for 10% less and see you at the golf links at 4 o’clock.” They have lost key sockets in Apple’s product line as well as other vendors. Merging with AMD raises revenue and earnings in an instant. The merged company would eliminate the duplicate graphics and operations groups.

Next, nVidia could implement the ARM+x86 multicore product strategy for the ultrabook market that I outlined in: Will AMD Crash Intel’s $300M Ultrabook Party? . The market offers high growth, ASPs and margins and is a close cousin of the tablet which nVidia is already targeting with Tegra.

Third, nVidia has made traction in the High Performance Computing (HPC) Market with Tesla. But don’t get confused with HPC = Data Center Servers. The Data Center runs x86 all the time. Intel has a $10B+ business going to $20B in the next 3 years. They are raising prices at will with no competition in sight. nVidia and AMD could team up to offer customers an alternative platform with performance and power tradeoffs between x86 and Tesla.

The icing on the cake is that this can all be financed by ATIC. Back in January when Dirk Meyer was let go as CEO of AMD and the stock was $9, I speculated to a semiconductor analyst that AMD would be bought when it went under $5. Why $5, it’s psychological. The wherewithal to do this is in ATIC’s hands but they have little time to spare.

ATIC owns 15% of AMD and 87% of Global Foundry. Today nVidia is worth $8B and AMD is worth $4.2B. Combined they would be worth significantly more than $12B because the graphics competition would end and the joint marketing and manufacturing operations would consolidate. It is logical for ATIC to take a 20% ownership in nVidia and finance the rest of the purchase in any number of ways. Back in the DRAM downturn of the 1980s, IBM bought a 20% stake in Intel to guarantee they would be around until the 386 hit the market.

Now that the ECB and the Fed have lowered interest rates to 0% and have the printing presses running overtime, why wouldn’t ATIC finance the new H.M.S. Take- No-Prisoners.