You are currently viewing SemiWiki as a guest which gives you limited access to the site. To view blog comments and experience other SemiWiki features you must be a registered member. Registration is fast, simple, and absolutely free so please,

join our community today!

Webinars have been a popular form of communication since even before SemiWiki existed and they are a mainstay in today’s fast-moving semiconductor ecosystem.

In the past, SemiWiki has assisted with more than a hundred webinars. Today SemiWiki can do a complete webinar from start to finish using the GotoWebinar software. SemiWiki… Read More

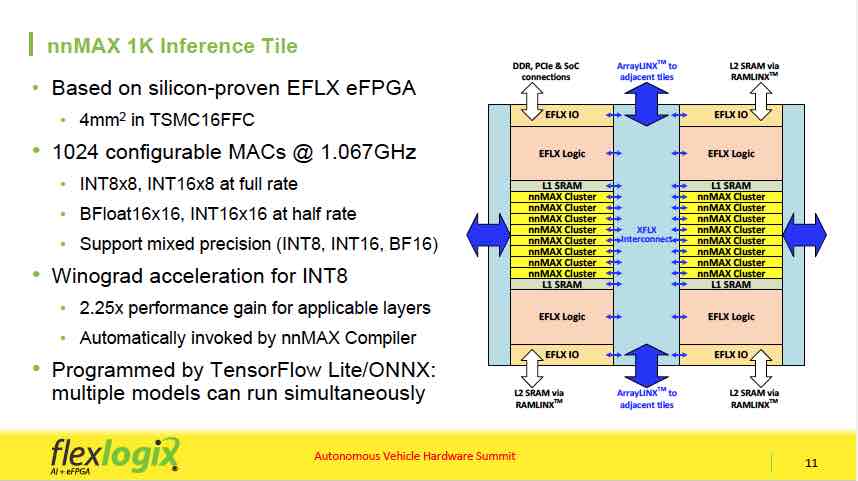

Dr. Cheng Wang, Co-Founder and SVP Engineering at Flex Logix, presented the second talk in the ‘AI at the Edge’ session, at the just concluded Linley Spring Processor Conference, highlighting the InferX X1 Inference Co-Processor’s high throughout, low cost, and low power. He opened by pointing out that existing inference solutions… Read More

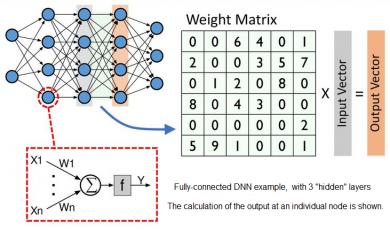

The basic principles used for neural networks have been understood for decades, what have changed to make them so successful in recent years are increased processing power, storage and training data. Layered on top of this is continued improvement in algorithms, often enabled by dramatic hardware performance improvements.… Read More

AI at the Edgeby Tom Dillinger on 12-20-2018 at 7:00 amCategories: AI, eFPGA, Flex Logix, IP

Frequent Semiwiki readers are well aware of the industry momentum behind machine learning applications. New opportunities are emerging at a rapid pace. High-level programming language semantics and compilers to capture and simulate neural network models have been developed to enhance developer productivity (link). Researchers… Read More

Machine learning applications in data centers (or “the cloud”) have pervasively changed our environment. Advances in speech recognition and natural language understanding have enabled personal assistants to augment our daily lifestyle. Image classification and object recognition techniques enrich our social media experience,… Read More

Avionics and Embedded FPGA IPby Tom Dillinger on 10-15-2018 at 12:00 pmCategories: eFPGA, Flex Logix, FPGA, IP

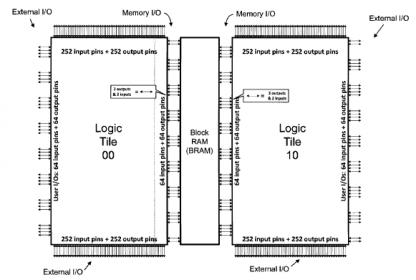

The design of electronic systems for aerospace applications shares many of the same constraints as apply to consumer products – e.g., cost (including NRE), power dissipation, size, time-to-market. Both market segments are driven to leverage the integration benefits of process scaling. … Read More

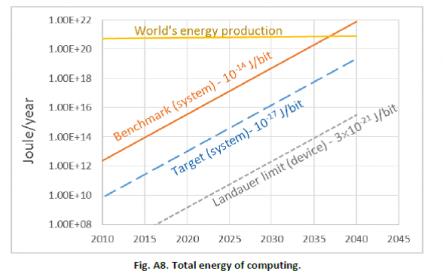

The traditional metrics for evaluating IP are performance, power, and area, commonly abbreviated as PPA. Viewed independently, PPA measures can be difficult to assess. As an example, design constraints that are purely based on performance, without concern for the associated power dissipation and circuit area, are increasingly… Read More

Machine Learning and Embedded FPGA IPby Tom Dillinger on 07-18-2018 at 12:00 pmCategories: eFPGA, Flex Logix, FPGA, IP

Machine learning-based applications have become prevalent across consumer, medical, and automotive markets. Still, the underlying architecture(s) and implementations are evolving rapidly, to best fit the throughput, latency, and power efficiency requirements of an ever increasing application space. Although ML is … Read More

Design IP is going well, with 12% YoY growth in 2017, even if the market is about $3.5B. But Design IP is serving a $400B semiconductor market. Can you imagine the future of the semi market if the chip makers couldn’t have access to Design IP? The same is true for EDA: it’s a niche market (CAE revenues was about $3B and IC Physical Design… Read More

The upcoming Design Automation Conference in San Francisco includes a very interesting session –“Has the Time for Embedded FPGA Come at Last?” Periodically, I’ve been having coffee with the team at Flex Logix, to get their perspective on this very question – specifically, to learn about the key features that customers are seeking… Read More