While I usually talk about AI inference on edge devices, for ADAS or the IoT, in this blog I want to talk about inference in the cloud or an on-premises datacenter (I’ll use “cloud” below as a shorthand to cover both possibilities). Inference throughput in the cloud is much higher today than at the edge. Think about support in financial… Read More

Tag: cloud services

NetApp: Comprehensive Support for Moving Your EDA Flow to the Cloud

With this post, we welcome NetApp to the SemiWiki family. NetApp was founded in 1992 with a focus on data storage solutions. Initial market segments were high-performance computing (HPC) and EDA and their first customers were EDA and semiconductor companies. NetApp has become a primary force in on-premise data management for… Read More

For EDA Users: The Cloud Should Not Be Just a Compute Farm

When EDA users first started considering using cloud services from Google, Amazon, Microsoft, and others, their initial focus was getting access for specific design functions, such as long logic or circuit simulation runs or long DRC runs, not necessarily for their entire design flow. If you choose to use the cloud this way, you… Read More

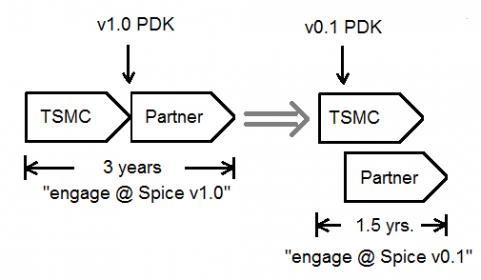

Top 10 Highlights from the TSMC Open Innovation Platform Ecosystem Forum

Each year, TSMC hosts two major events for customers – the Technology Symposium in the spring, and the Open Innovation Platform Ecosystem Forum in the fall. The Technology Symposium provides updates from TSMC on:

… Read More

Mentor takes IoT devices to cloud and back

Walking into the Mentor Graphics booth at ARM TechCon, I was greeted by my friends Warren Kurisu and Shay Benchorin. It was good to see them both again. They were poised in front of a table with a Samsung tablet and a small Wi-Fi-ish box, next to a large Samsung printer. The demonstration was similar to a lobby check-in process, where… Read More