Most of my investments are associated with large changes in the semiconductor industry. These changes create opportunities for new and disruptive technologies. I also look to find solutions that provide a compelling reason to adopt a new technology or approach. When talking about a new approach, it often takes longer to overcome the status quo.

In this thesis, I establish the notion of IC Integrity and the impact that this will have on what has traditionally been viewed as the design verification market. I love getting feedback, and I also love to share that feedback with people, so please let me know what you think. Thanks – Jim

Functional verification is a process for reducing risk associated with bugs in the design escaping into the tape-out of a semiconductor product. Historically, the market segment that the end product was intended for defined the acceptable level of investment put into functional verification. A few years ago, this long-standing equation catastrophically failed. The failure was in the inability to find those bugs largely due to the increasing use of embedded software. At the same time, verification for functional correctness has been increasing as a percentage of the total cost of design.

In addition, the development flow must now consider safety, security, and trust as design requirements. Safety ensures that a design continues to operate in a safe manner in the presence of random faults, while security ensures that its intended behavior cannot be altered by either environmental hazards or malicious activity. Trust ensures that no Trojans are inserted into the design during the development process.

Consider connected systems that are being compromised almost daily, with personal or financial data being stolen. Also of concern are denial of service attacks where thousands of connected devices are taken over and controlled for malicious purposes. These are security vulnerabilities that have been reported, but there may be many others that we don’t hear about.

Massive compute farms want to ensure that the failure of any piece of equipment does not bring the whole system down, instead enabling safe and secure removal of the malfunctioning device and replacement with a spare component while the system continues to operate.

We also hear about the insertion of a Trojan into a chip or system within the production supply chain enabling it to be compromised once deployed. It is essential to verify trust in the final design.

Let’s use the quintessential application driving technology today—autonomous vehicles—to illustrate the challenge. There are high demands for safety, security, and trust to be able to respond to failures in the field and to ensure that a hacker cannot get into them.

These are the realities that a development team has to face today. You cannot get close to mitigating these problems using the tools and methodologies adopted by the majority of the industry for functional verification. This is the IC Integrity problem.

We define IC Integrity to be:

A state in which the design operates correctly, safely, and securely within the intended functional envelope, even under adverse conditions caused by failure or malicious attack, from specification and extending through to deployed product.

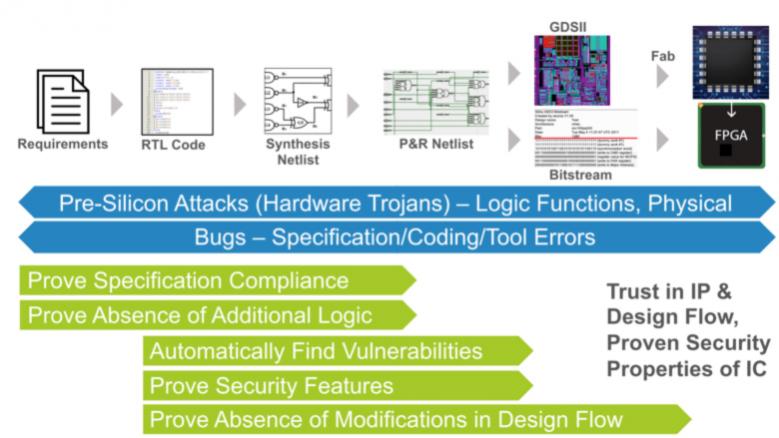

IC Integrity impacts the development process from the SoC level down to implementation and through to deployment. While most development is done at the register transfer level (RTL), a design is specified at higher levels of abstraction and has to go through some notion of physical implementation. There are aspects of integrity that are pertinent to every stage in the development process. For example, IC Integrity ensures that no unintended functionality is added, removed, or modified from verified RTL through to deployment. This may include performing equivalence checking on the bitstream intended to be downloaded onto an FPGA. While IC Integrity does not strictly include software, it is necessary for hardware to ensure that it provides a safe and secure environment in which the software can operate.

There is no one company or technology that can turn all aspects of this concept into reality today. Instead, it requires a number of different approaches across the entire development flow.

In my opinion, OneSpin Solutions represents a company that understands the implications of this in several market segments. This is why I have chosen to work with them. It’s more than formal verification.

The Three Pillars

IC Integrity is supported by three pillars:

- Functional Correctness

- Safety

- Trust and Security

Not all industries view each of the pillars with the same weighting, such that some will see functional correctness and security as being essential for their market whereas others will want to include functional safety requirements. Safety and security are somewhat linked in that a device cannot be safe if it is not secure and devices that are meant to be secure may be compromised if faults are induced in them, thus relying on those faults being detected to prevent the intrusion.

In addition, there are secondary concerns for some industries, such as reliability. While this is fundamentally a different problem addressed by foundries, layout, and other back-end tasks, functional safety plays a key role in being able to detect breakdowns in devices and thus safety becomes an important aspect of reliability.

Functional Correctness

Functional correctness means demonstrating that a design performs all of the intended functions as defined by its functional requirements. Requirements may also define behaviors that must never be present.

Using simulation alone to achieve a defined level of functional correctness is no longer enough for a growing number of markets and applications. The removal of systematic errors from the design, which is increasing in size and complexity, is becoming a growing burden on development teams that have failed to adopt new verification methodologies.

Simulation is no longer adequate for the functional verification task, even when accelerated by hardware or supplemented by emulation or physical prototypes. Simulation capabilities have not scaled since single-processor performance has flattened out and the simulation algorithm is not parallelizable. Acceleration adds parallelism, in the form of custom hardware, but at a very high cost. Prevalent methodologies, such as constrained-random test generation, are becoming increasingly inefficient, especially with no definitive way to ensure coverage closure. Fundamentally, this approach is flawed because these techniques can never guarantee functional correctness and are unable to tackle the safety, security, and trust aspects required by IC Integrity.

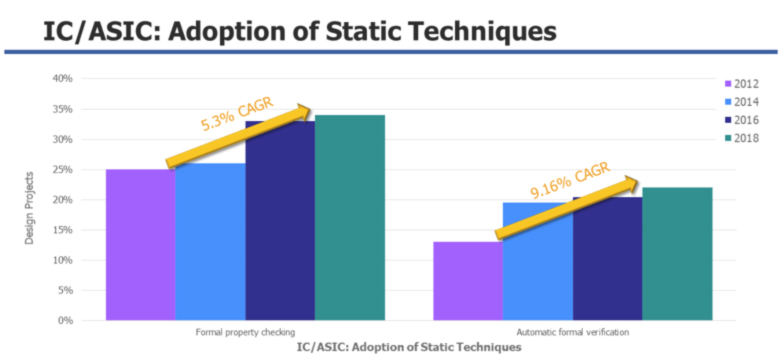

The latest Wilson Research study shows that the number of chip respins is growing, indicating that fewer companies are achieving their functional verification goals. Progressive companies are adopting formal verification technologies at an increasing pace, although only a few companies have progressed to the point where they can achieve signoff using only formal tools.

Fig 1. Growth in usage of formal technologies. Source: Wilson Research Group, 2018

This needs to change. The creation of automatic formal technologies has helped to accelerate adoption, but companies must dedicate the necessary resources to making this a larger part of their overall functional verification methodology so that it can significantly replace the time spent using dynamic verification methodologies such as simulation.

Functional Safety

Functional safety means that a system must be able to detect and properly react to random faults while a system is in operation to prevent failures.

The race towards autonomous cars has heightened the focus on safety. While some industries, such as avionics, have long focused on safety, their approach has been through massive replication. This industry does not consider cost to be a major issue. This is not true for cars where it is estimated that the cost of the electronics will quickly become half the cost of the vehicle. Using crude methods, such as replication, it is relatively easy to show that safety methods are met, but when safety is directly incorporated into a design, much more in-depth analysis is required. It also adds to the complexity of the functional verification task.

The traditional approach to safety verification is through fault simulation. This requires simulating the functional verification suite with every potential fault injected into the system to see if the design can detect the existence of the fault and react appropriately. Given that the regression suites for many chips take days or weeks to run, this is clearly not a viable solution. Techniques have been developed that reduce the size of the testset required and the number of faults that need to be checked, but those numbers still represent huge computational loads.

New technologies are required that reduce or eliminate the usage of fault simulation. SoC-level hardware analysis and failure mode analysis need to be automated as well as complete verification of the safety mechanisms built into the design.

Trust and Security

Trust and Security mean that a system will only perform its intended function, even under situations of duress, and that data that was meant to be held private remains that way and not compromised.

It has become apparent over the past year how much safety has been compromised for performance in processor designs. New vulnerabilities are being found and the fixes for them often mean that processor performance is degraded.

Much of the infrastructure designed to connect devices and enable communications was defined before security was a concern. This basically means that every device connected to the Internet is vulnerable to attack. We have seen many examples of this being utilized to gain access to corporate networks and steal data, even though technologies such as firewalls have been incorporated to prevent such intrusion.

Within a chip, it is necessary to ensure that the software that is run on the processors is also protected. This not only means ensuring that the software can be trusted, but privileged software needs to operate within an environment that cannot be accessed by user-level software. A failure of these mechanisms means that a malicious user could compromise the device and everything that trusts it.

The semiconductor industry relies on the reuse of components, some of which may have been developed internally and others that come from third-party companies. Questions are being raised about the trust that can be placed in some third-party devices, especially when they are developed in, or owned by, foreign nations. Trust needs to be maintained throughout the design and implementation process.

We are also hearing more about side-channel attacks where data is extracted from a system by looking at secondary characteristics including power and radiated information.

Unlike functional correctness and safety, verification for security and trust is not about demonstrating that something works correct, but instead about showing the absence of something. This is a task that can never be performed using dynamic techniques such as simulation. Security and trust can only be assured through formal technologies.

Reaching IC Integrity

A lot happens between the point where a design team says they have completed and verified a design and the point when it is deployed in the field.

Fig 2. Vulnerabilities from requirements to chips. Source: OneSpin Solutions

There are two aspects to this: integration and implementation.

When IP is used in a design, the transfer of that IP block and its integration into a large system could compromise efforts that have been made within any of the three pillars if that block is not connected correctly or legally. The verification of chip assembly often involves millions of connections and complete verification of these is not possible using dynamic verification.

Many transformations happen to the design as it goes through implementation. Each of those transformations can also compromise any of the three pillars.

The insertion of test logic could compromise security. The insertion of power reduction circuitry may impact both functional correctness and safety. Tools may include errors that add or remove behaviors. Even place and route may not fully understand the implications that a certain layout may have on enabling side-channel attacks. These are just a few examples of accidental changes that can compromise a system.

There are also malicious actions that may happen. It recently became clear that some printed circuit boards had rogue components added into them that enabled remote access and data theft. Industries are becoming increasingly concerned about the usage of sub-standard or counterfeit parts. And how do you know that a piece of IP that you are using is trustworthy?

FPGAs add another level of vulnerability. The bitstream that is downloaded into the device has to be protected. This requires securing the entire development flow and lifecycle of the bitstream.

Thought Example

To further explain some of the problems, it is worth considering an emerging example in the industry. The rapid rise of the open source RISC-V instruction set architecture (ISA) is creating a lot of interest throughout the semiconductor industry. Some companies many choose to develop their own implementation of the processor, others may buy an implementation from a third-party company. How do you know that your core is a faithful representation of the ISA and that it can be trusted?

The RISC-V foundation is developing a compliance suite, but this only demonstrates that an implementation matches the ISA specification under a limited number of conditions. It is thus an existence demonstration—nothing more.

The development of a full verification suite is extremely expensive. Many have defined this as being the majority of the “tax” they pay for buying a commercial core. But how does a user know if their verification is sufficient? How do you trust that the core does not contain a Trojan? How do you know if it has gone through the necessary procedures to ensure safety and security?

A RISC-V core developer has to show that the core matches the ISA specification and that it does nothing but what is specified. If the development of the verification suite is a significant part of the value of a commercial core, then they are unlikely to make that public. How does the provider demonstrate that their core is trustworthy? Can a third-party RISC-V integrity solution provide the answer?

Conclusions

Functional verification is tough and getting tougher. Companies continue attempting to use existing entrenched technologies that are becoming increasingly inefficient and ineffective. Additionally, functional correctness is now only one of the verification tasks that many companies face. The addition of safety, security, and trust adds layers of complexity that are impossible to solve with older technologies. IC Integrity is becoming a mandatory requirement for a growing number of industries including embedded, cloud, infrastructure, and automotive.

Superior technologies exist to tackle many aspects of these problems. New technologies are being developed and deployed by the most advanced development teams. As an increasing number of companies realize that safety, security, and trust become product differentiators, demand for these solutions will grow.

It is my belief that this will be an interesting and fruitful area for OneSpin and others to invest time and money. I would encourage the reader to agree or disagree with my thoughts, but please share your ideas.

Thanks Jim.

Share this post via:

Comments

There are no comments yet.

You must register or log in to view/post comments.