The euphoria of NCAA March Madness seems to spill over into the tech world. The epicenter of many tech talks this month spanning from GPU conference, OCP, SNUG to CASPA has evolved around an increased AI endorsement by many companies and its integration into many silicon driven applications. At this year CASPA Spring Symposium, many prominent industry and academic leaders shared their perspectives on the proliferation of AI augmentation into the Internet of Things and the impact of AI of Things (AIoT) on the future of semiconductor industry.

Following is the excerpt of a presentation given by Dr. Steve Woo, Fellow and Distinguished Inventor at Rambus Inc. on ‘AI in the Era of Connectivity’. Since joining Rambus, Steve has worked in various roles leading architecture, technology, and performance analysis efforts as well as in marketing and product planning roles, leading strategy and customer related programs.

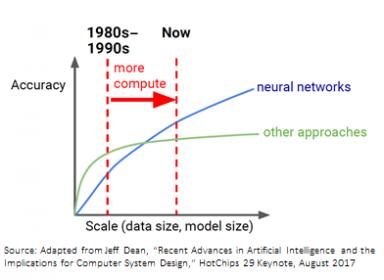

Steve was very upbeat about the current transformation we are living in. Driven by the recent advances in neural networks and the rise of powerful computing platforms, AI’s reach and impact on our society has broadened. “In our interconnected world, the needs of data centers, edge computing and mobile devices are continuing to evolve as the role of AI in each vertical segment is evolving as well,” he said. However, the critical challenges remain for enabling higher performance, more power efficient and secure infrastructures supporting AI –all of which offer opportunities for the semiconductor industry.

Digital Data Growth

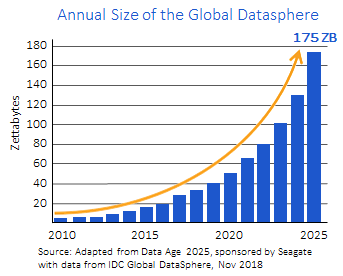

He added that while the industry demand for performance and capabilities due to the digital data surge is trending up, the primary tools that the industry has relied on for decades, Moore’s Law and Dennard scaling, are slowing or no longer available. A critical challenge will be figuring out how to continue providing more performance and power efficiency in their absence. The infrastructure itself is important as it has incredible values in managing data.

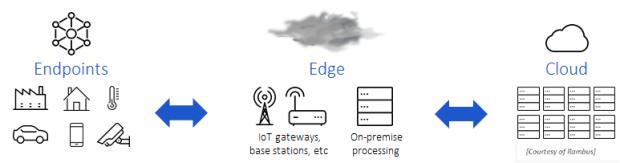

With the amount of growing digital data globally, the memory, link and storage performances have to keep-up in moving data to the processor engine, while both compute and I/O power efficiency must continue to improve as well. The emergence of edge computing has also imposed high performance and low power need for training and inference.  He highlighted several steps to mitigate such a demand. This includes at the cloud level: to analyze broad behaviors and pushing the models to the edge and endpoints; at the edge/endpoints to be more selective in communicating processed and higher value data instead of raw data; and having a cloud-processing closer to data (at or near endpoints) –thus, improving the data latency, bandwidth and energy use as well as reducing stress on the underlying network.

He highlighted several steps to mitigate such a demand. This includes at the cloud level: to analyze broad behaviors and pushing the models to the edge and endpoints; at the edge/endpoints to be more selective in communicating processed and higher value data instead of raw data; and having a cloud-processing closer to data (at or near endpoints) –thus, improving the data latency, bandwidth and energy use as well as reducing stress on the underlying network.

AI Needs Memory Bandwidth

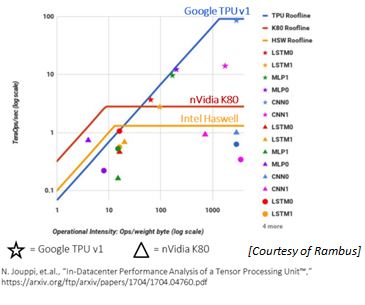

The memory bandwidth is a critical resource for AI applications. Inference tasks on older, general purpose hardware such as Haswell, K80 performs well as applications may benefit from the compute and memory optimizations. On the other hand, inferencing on newer silicon such as the Google TPUv1 built for AI processing is largely limited by the memory bandwidth.

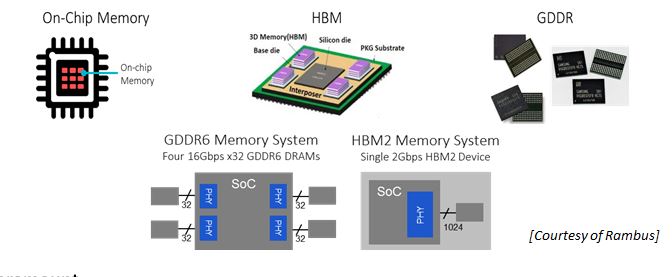

There are three well known options to common memory systems for AI applications: On-Chip memories, HBM (High Bandwidth Memories), and GDDR (Graphics Double Data Rate).  On-Chip memory offers highest bandwidth and power efficiency as implemented in Microsoft’s BrainWave and Graphcore’s IPU. HBM implementation in products such as AMD Radeon Rx Vega 56, Tesla V100 offers very high bandwidth and density. The additional interposer/substrate and new manufacturing/assembly methods may require additional risk to be dealt with as it is not as well understood as DDR or GDDR cases. The third option, GDDR as found in products such as nVidia GeForce RTX2080Ti delivers good tradeoff between bandwidth, power efficiency, cost, and reliability. The current mainstream refresh for HBM is HBM2 and GDDR is GDDR6, respectively.

On-Chip memory offers highest bandwidth and power efficiency as implemented in Microsoft’s BrainWave and Graphcore’s IPU. HBM implementation in products such as AMD Radeon Rx Vega 56, Tesla V100 offers very high bandwidth and density. The additional interposer/substrate and new manufacturing/assembly methods may require additional risk to be dealt with as it is not as well understood as DDR or GDDR cases. The third option, GDDR as found in products such as nVidia GeForce RTX2080Ti delivers good tradeoff between bandwidth, power efficiency, cost, and reliability. The current mainstream refresh for HBM is HBM2 and GDDR is GDDR6, respectively.

Security is paramount

Security around data is also becoming a concern as cyber related attacks are increasingly targeting infrastructures, not just the individual users. Both usage sharing of cloud hardware and the growing system complexity have expanded the surface vulnerability area to exploits. His take is to not compromise security in lieu of a good performance gain. “Security must become a first‐class design goal, we cannot treat security as an afterthought and attempt to retrofit it into silicon and systems,” he stated.

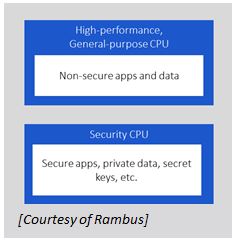

Recent exploits like Spectre, Meltdown, and Foreshadow have shown that security can be compromised through unexpected interactions between features in the processor. Processors are becoming more complex, meaning the number of interactions is growing exponentially. This has led the industry to conclude that new approaches are needed for providing secure computing. One such approach that Steve discussed is siloed execution. In this scenario, physically distinct CPUs are utilized that process secure operations and secret data on a security CPU, while non-secure applications and data can be processed on a general-purpose CPU. The general-purpose CPU can be as performant and feature-rich as necessary, while the security CPU can be simple and optimized for security. Segregating processing and data in this manner also allows secret data to remain secret even if the general-purpose CPU is hacked.

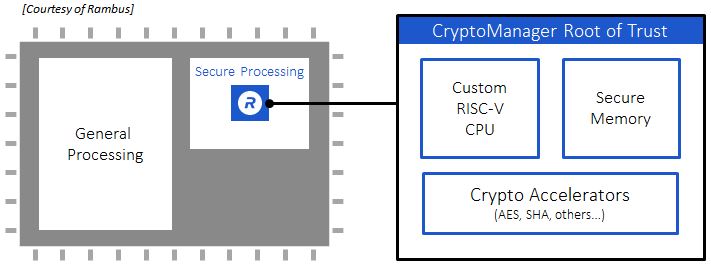

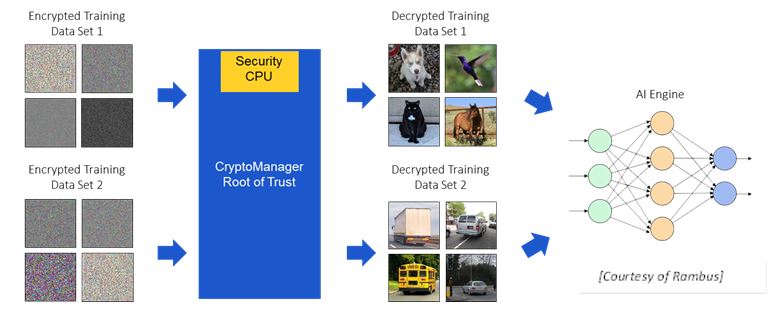

Steve elaborated more on Rambus security solution called CryptoManager Root of Trust, which is comprised of customized RISC-V CPU and a Crypto Accelerators and secure memory components. It provides secure boot, remote attestation, authentication with various encryption modes (such as AES, SHA) and runtime integrity. The following diagram illustrates how it may be augmented into an AI system capable of operating in cloud environments by decrypting multiple user training sets and models using different keys, and running them on cloud AI hardware.

To recap, AI is driving a renaissance in computer architecture and memory systems. The conflict between performance and security is more pronounced as data and insights are increasingly more valuable. Along this line, design teams need to ensure addressing the security aspects on top of design complexity as it only takes one vulnerability for hacker to compromise the entire system and data security.

For more info on Rambus, please check HERE

Share this post via:

Comments

There are no comments yet.

You must register or log in to view/post comments.