Data compression is a critical element of many systems. Thanks to trends such as AI and highly connected systems there is more data to be stored and processed every day. Data growth is staggering. Statista recently estimated that 90% of the world’s data was generated in the last two years. Storing and processing all that data demands ways to reduce the space it takes.

Data compression takes two basic forms – lossless and lossy. Lossy data compression can result in significantly smaller file sizes, but with the potential loss of some degree of quality. JPEG and MP3 are examples. Lossless compression also produces smaller files, but with complete fidelity to the original. Some data cannot tolerate loss during compression — such as text, code, and binaries — while for other data the maximum, original quality is essential — think medical imaging or financial information. GIF, PNG and ZIP are lossless formats.

So lossless data compression is quite prevalent. That’s why a new IP core from CAST has such significance. Let’s look at how CAST advances lossless data compression speed with a new IP core.

Lossless Compression Basics

As discussed, lossless compression doesn’t degrade the data, and so the decompressed data is identical to the original, just with a somewhat smaller file size. Lossless compression typically works by identifying and eliminating statistical redundancy in the information. This can require additional computing time, so ways to speed up the process are important.

There are many algorithms that can be applied to this problem. Two popular ones are:

LZ4 – which features an extremely fast decoder. The LZ4 library is provided as open-source software using a BSD license.

Snappy – is a compression/decompression library. The focus of this approach is very high speeds and reasonable compression. The software is provided by Google and is used extensively by the company.

The CAST Announcement

With that primer, the recent CAST announcement will have better context.

New LZ4 & Snappy IP Core from CAST Enables Fast Lossless Data Decompression.

This announcement focuses on a new IP core from CAST that accelerates the popular LZ4 and Snappy algorithms. The part can be used in both ASIC or FPGA implementations and delivers a hardware decompression engine. Average throughput is a peppy 7.8 bytes of decompressed data per clock cycle in its default configuration. And since it’s an IP core, decompression can be improved to 100Gbps or greater by instantiating the core multiple times.

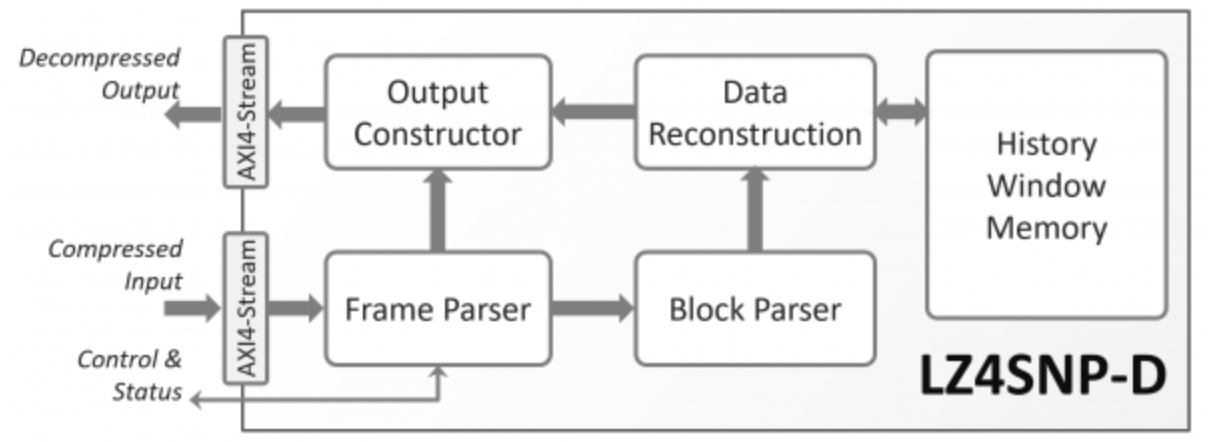

Called LZ4SNP-D, CAST believes it is the first available RTL-designed IP core to implement LZ4 and Snappy lossless data decompression in ASICs or FPGAs from all popular providers. Systems using the core benefit from standalone operation, which offloads the host CPU from the significant tasks of decompressing data.

The core handles the parsing of the incoming compressed data files with no special preprocessing. Its extensive error tracking and reporting capabilities ensure smooth system operation, enabling automatic recovery from CRC 32, file size, coding, and non-correctible ECC errors.

My Conversation with the CEO

At DAC, I was able to meet with Nikos Zervas, the CEO of CAST. I found Nikos to be a great source of information on the company. You can read about that conversation here. So, when I saw this press release, I reached out to him to get some more details.

It turns out lossless data compression isn’t new for CAST. The company has been offering GZIP/ZLIB/Deflate lossless data compression and decompression engines since 2014. These engines have scalable throughput, and there are customers using them to compress and decompress at rates exceeding 400Gbps.

Applications include optimization for storage and/or communication bandwidth for data centers (e.g., SSDs, NICs) and automotive (for recording sensor data). For other applications, the delay and power for moving large amounts of data between SoCs or within SoCs is optimized. I can remember several challenging chip designs during my ASIC days where this kind of problem can quickly become a real nightmare.

An example to consider is a flash device’s inline decompression of boot images. Flash memories are slow and power-hungry. Both the latency (i.e., boot time) and energy consumption for loading a boot image can be significantly reduced by storing a compressed file for that boot image and decompressing it on the fly during boot. Other use cases involve chipsets exchanging large amounts of data over Gbp-capable connections, or parallel processing platforms moving data from one processing element to the next.

It turns out CAST has tens of customers ranging from Tier 1 OEMs in the networking and telecom equipment area to augmented reality startups that use GZIP/ZLIB/Deflate lossless cores.

I asked Nikos, why introduce new lossless compression cores now? He explained that LZ4 and Snappy compression may not be as efficient as GZIP, but they are less computationally complex, and this attribute makes LZ4 and Snappy attractive in cases where compression is at times (or always) performed in software. The lower computational complexity also translates to a smaller and faster hardware implementation, which is also important, especially in the case of high processing rates (e.g., 100Gbps or 400Gbps) where the size of the compression or decompression engines is significant (in the order of millions to tens of millions of gates).

CAST had received multiple requests for these faster compression algorithms over the past couple of years. Nikos explained that the company listened and responded with the hardware LZ4/Snappy decompressor. He went on to say that a compressor core will follow. This technology appears to be quite popular. CAST had its first lead customer signed up before announcing the core.

To Learn More

The new LZ4SNP-D LZ4/Snappy Data Decompressor IP core is available now. It can be delivered in synthesizable HDL (System Verilog) or targeted FPGA netlist forms and includes everything required for successful implementation. Its deliverables include:

- Sophisticated test environment

- Simulation scripts, test vectors, and expected results

- Synthesis script

- Comprehensive user documentation

If your next design requires lossless compression, you should check out this new IP from CAST here. And that’s how CAST advances lossless data compression speed with a new IP core.

Share this post via:

Comments

There are no comments yet.

You must register or log in to view/post comments.