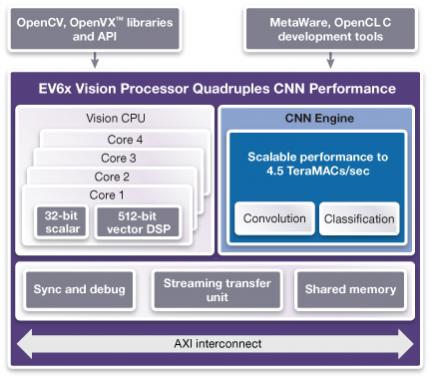

When Synopsys bought Virage Logic in 2010, ARC processor IP was in the basket, but at that time ARC processor core was not the most powerful on the market, and by far. The launch of EV6x vision processor sounds like Synopsys has moved ARC processor core by several orders of magnitude in term of processing power. EV6x deliver up to 4X higher performance on common vision processing tasks than the previous generation, delivering up to 4.5 TMAC/s in 16nm process.

In fact, even if EV6x is part of ARC CPU IP family, this vision processor is a completely new product, defined to address high throughput applications such as ADAS, video surveillance and virtual/augmented reality. If an architect is still hesitating between CPU, GPU, H/W accelerator or DSP based solution, both the performance, power efficiency and flexibility of such solution like EV6x should greatly ease the decision.

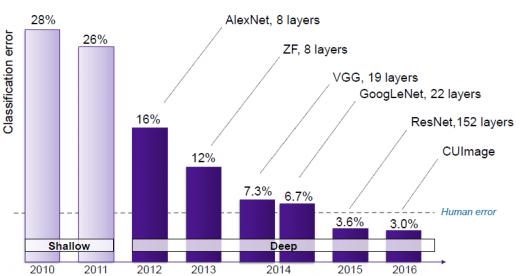

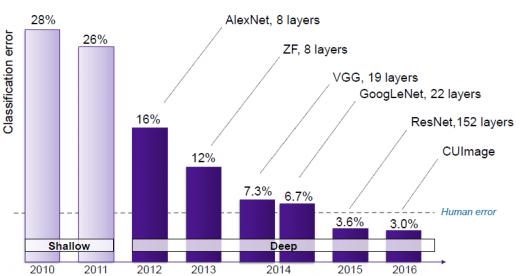

Why does Convolutional Neural Network (CNN) becoming key part of a vision processor? Because CNN is supporting deep learning and this approach outperforms other vision algorithms. Attempting to replicate how the brain sees, CNN recognizes objects directly from pixel images with minimal pre-processing. If we look at the relative performance of the various algorithm since 2012 and compare the result with human error, we notice two important points. Since 2014, Deep Convolutional Network algorithm are giving better result than human, moreover, the deeper the network, the better is will be, see ResNet with 152 layers. We can also derive from this evolution across time the fact that for such very fast-moving technology, flexibility is mandatory and that customer designed solutions based on H/W accelerator are quickly become obsolete…

But the latest vision requirements need increasing computational bandwidth and accuracy. Image resolution and frame rate requirements have moved from 1MP at 15 fps to 8MP at 60 fps, and the neural network complexity is greatly increasing, from 8 layers to more than 150 layers. That’s why the new EV6x has been boosted, delivering up to 4.5 TMAC/s, keeping in mind the power consumption. For CNN, the power efficiency is up to 2,000 GMAC/s per Watt in 16nm FinFET technology (worst case conditions). Because performance is key, architects can integrate up to four vision CPU for scalable performance and vision CPU supports complex computer vision algorithms including pre -and post- CNN processing.

Synopsys has used techniques to reduce data bandwidth requirements, and decrease power consumption. For example, the coefficient and feature map are compressed/decompressed. The EV6x solution include CNN engines using 12-bit computations, leading to less power and area but with the same accuracy as 32-bit floating point. This is a wise choice, if you consider that a 12-bit multiplier is almost half the area of a 16-bit multiplier! To be ready for the next technology jump, the EV6x supports neural networks trained for 8-bit precision… and we have seen that CNN vision is a fast moving domain.

For vision, CNN can be used to process multiple tasks, like image classification, search for similar images, or object detection, classification and localization. These tasks are supporting automotive ADAS systems, for example, but not only. EV6x vision processor will support surveillance application as well as drones, virtual or augmented reality, mobile, digital still camera, multi-function printers, medical… and probably more to come!

Availability

The DesignWare EV61, EV62 and EV64 processors are scheduled to be available in August 2017. The MetaWare Development Toolkit and EV SDK Option (which includes the OpenCV library, OpenVX runtime framework, CNN Graph Mapping tools and OpenCL C compiler) are available now.

From Eric Esteve from IPnest

Share this post via:

Comments

There are no comments yet.

You must register or log in to view/post comments.