The CCIX consortium has developed the Cache Coherent Interconnect for Accelerators (X) protocol. The goal is to support cache coherency, allowing faster and more efficient sharing of memory between processors and accelerators, while utilizing PCIe 4.0 as transport layer. With Ethernet, PCI Express is certainly the most popular protocol in existing server ecosystems, in-memory database processing or networking, pushing to select PCIe 4.0 as transport layer for CCIX.

But PCIe 4.0 is defined by the PCI-SIG to run up to 16Gbps only, so the CCIX consortium has defined extended speed modes up to 25Gbps (2.5Gbps, 8Gbps, 16Gbps, 25Gbps). The goal is to allow multiple processor architectures with different instruction sets to seamlessly share data in a cache coherent manner with existing interconnects, boosted up to 25Gbps to fulfill the bandwidth needs of tomorrow applications, like big data analytics, search machine learning, network functions virtualizations (NFV), video analytics, wireless 4G/5G, and more.

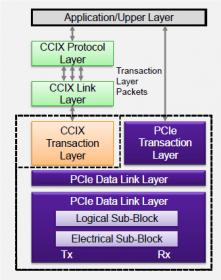

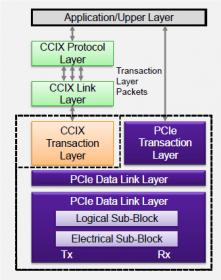

How to implement cache coherency in an existing protocol like PCIe? By inserting two new packet based transaction layers, the CCIX Protocol Layer and the CCIX Link Layer (in green in the above picture). These two layers will process a set of commands/responses implementing the coherency protocol (think MESI: Modified, Exclusive, Shared, Invalid and the like). To be noticed, these layers will be user defined, Synopsys providing the PCIe 4.0 controller able to support up to 16 lanes running at 25Gbps. And the PCI Express set of command/responses will carry the coherency protocol command/responses, acting as a transport layer.

The internal SoC logic is expected to provide the implementing portion of the coherency, so the coherency protocol can be tightly tied to CPU, offering opportunities for innovation and differentiation. Synopsys consider that their customers are likely to separate data path for CCIX traffic vs “normal” PCIe traffic, and the PCI Express protocol offers Virtual Channels (VC), these can be used by CCIX.

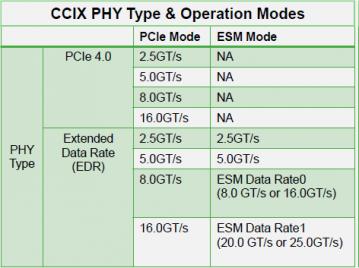

The PHY associated with the CCIX protocol will have to support the classical PCIe 4.0 mode up to 16GBbps (2.5GT/s, 5GT/s, 8GT/s, 16GT/s) and also Extended Speed Modes (ESM), allowing Extended Data Rate (EDR) support. ESM Data Rate0 (8.0GT/s or 16.0 GT/s) and ESM Data Rate1, defined for 20.0 GT/s or 25.0 GT/s.

ESM can support four operations:

1PCIe compliant phase (the Physical Layer is fully compliant to PCIe spec

2Software discovery, the System Software (SW) probes configuration space to find CCIX transport DVSEC capability

3.Calibration: components optionally use this state to calibrate PHY logic for upcoming ESM data rate

4 Speed change: components execute Speed Change & Equalization according with the same rules as PCIe specification

The CCIX controller proposed by Synopsys gets all features of the PCIe controller, supporting all transfer speeds from 2.5G to 16G and ESM to 25G. The digital controller is highly configurable, supporting CCIX r2.0, PCIe 4.0 and Single Root I/O Virtualization (SR-IOV), being also backward compatible with PCIe 3.1, 2.1 and 1.1. The controller supports End Point (EP), Root Port (RP), Dual Mode (EP and RP) and Switch, with x1 to x16 lanes.

On the application side, the customer can select Native I/F or AMBA I/F, and dedicated CCIX Transmit and CCIX Receive application interfaces. The interface between the controller and the PHY is PIPE 4.4.1 compliant with CCIX extensions for ESM-capable PHYs. To support 25G, Synopsys proposes the multi-protocol PHY IP in 16nm and 7nm FinFET, compliant with Ethernet, PCI Express, SATA and the new CCIX and supporting for chip-to-chip, port side & backplane configurations.

Because CCIX/PCIe 4.0 solution targets key applications in the high-end storage, data server and networking segments, reliability is extremely important. The IP solution offers various features to guarantee high Reliability, availability and serviceability (RAS), increasing data protection, system availability and diagnosis, including memory ECC, error detection or statistics.

I am familiar with the PCI Express protocol since 2005, when I was marketing director for an IP vendor selling the controller (at that time PCIe 1.0 at 2.5 Gbps), and Synopsys was already the leader on the PCIe IP segment. Twelve years later, Synopsys is claiming 1500 PCie IP design-in!

If we restrict to the PCIe 4.0 specification, Synopsys is announcing 30 design-in in various applications, like enterprise (20 in Cloud Computing/Networking/Server), 3 in digital home/digital office, 5 in storage and 2 in automotive. Nobody would have forecasted the PCIe penetration in automotive 15 years ago, but it shouldn’t be surprising to see that after mobile (with Mobile Express) and Storage (NVM Express), the protocol is selected to support cache coherent interconnect for accelerator specification.

By Eric Esteve from IPnest

More about DesignWare CCIX: DesignWare CCIX IP Solutions

Share this post via:

Comments

There are no comments yet.

You must register or log in to view/post comments.