I mentioned some time ago (a DVCon or two ago) that Accellera had started working on a standard to quantify IP security. At the time I talked about some of the challenges in the task but nevertheless applauded the effort. You’ve got to start somewhere and some way to quantify this is better than none, as long as it doesn’t deliver misleading metrics. Now they’ve released a white paper and setup a community forum for discussion.

The working group is unquestionably worthy: multiple people from Intel and Qualcomm, along with representatives from Cadence, Synopsys, Tortuga and OneSpin. I didn’t see Rambus among the list of authors, a curious omission since they’re pretty well known in this space.

The white paper is somewhat reminiscent (to me at least) of the Reuse Methodology Manual (RMM), not in length but in the general approach. This is a very complex topic, so even with a lot of smart people working on it you can’t expect an algorithmic process laid out with all the i’s dotted and t’s crossed plus a definitive metric at the end.

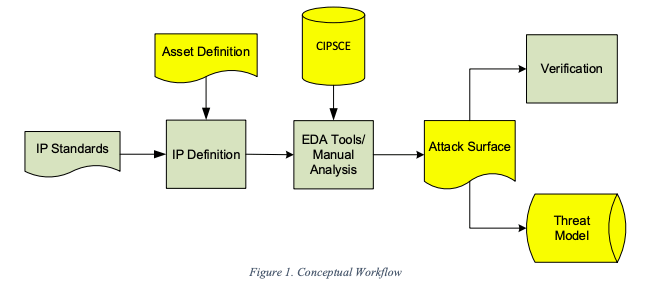

The overall standard is called IP Security Assurance (IPSA) and in this first incarnation primarily defines collateral to be provided with an IP along with an evolving and generally available database of common IP security concerns (CIPSCE). The latter is modeled on the MITRE Common Weakness Enumeration, widely accepted as the reference for software security weaknesses. CIPSCE aims to do the same for hardware security, providing a standard, shared and evolving set of known weaknesses. This really has to be the heart of the standard.

Starting with the asset definition and the database, the IPSA process requires building, either through a tool or manually, an attack surface consisting of those signals though which an attack might be launched into the IP or privileged information might be read out of the IP. Adding CIPSCE associations to this info produces a threat model. Here the flow becomes a little confusing to me, no doubt to be further refined. It seems you do verification with the attack surface but the threat model is primarily documentation for the integrator to aid in mitigating threats, rather than something that might also be an input to verification (at both IP and integration levels)?

A CIPSCE database does not yet exist, however the paper illustrates an analysis using some example weaknesses for a couple of demonstration OpenCores test cases. The paper suggests that some of the attack surface signals may be discoverable by an EDA tool whereas some others might require hints to be added manually through attributed if the tool fails to discover certain potential problem signals. OK, an obvious safety net, though I’m not sure how much we want to be depending on safety nets in this area.

Hence my reference to RMM (maybe draft rev). This is an outline of a methodology with some very sensible components, especially the CIPSCE concept, but still largely an outline so too early to debate the details. Also as a standard it’s agnostic to automation versus manual discovery and documentation, as it should be. Still that creates some questions around what can and cannot be automated, as currently defined. But it is a start.

You can read the white paper HERE.

Share this post via:

Comments

There are no comments yet.

You must register or log in to view/post comments.