Three-year old CacheQ, founded by two former Xilinx executives and a clever group of engineers, produces a distributed heterogenous compute development environment targeting software developers with limited knowledge of hardware architecture.

The promise of compiler tools for heterogeneous compute systems intrigued me when I first read about CacheQ back at the end of 2019. The Xilinx reference was even more intriguing because I worked for a hardware emulation scale-up company that powered its platform with high-performance Xilinx FPGAs. My relationship with Xilinx was a positive one, so I’m rooting for CacheQ.

All through last year, it was quietly selling its FPGA-based computing platforms for life sciences, financial trading, government, oil and gas exploration and industrial IoT platforms. It also began expanding its reach outside of the FPGA space and recently announced a new feature of the CacheQ Compiler Collection for software developers to develop and deploy custom hardware accelerators for heterogeneous compute systems including FPGAS, CPUs and GPUs. The advantage is no manual code rewriting and there’s no need for threading libraries or complex parallel execution APIs. This gives software developers the ability to work on their algorithms and let the compiler extract the parallelism needed to run on those cores.

According to the news release, the compiler generates executables using single-threaded C code that can run on CPUs, taking advantage of multiple physical x86 cores with or without hyperthreading and Arm and RISC-V cores. Code is produced for multicore processors on the same or different architectures and benchmark usage with runtime variables. A flexible environment means that hardware for performance and power usage can be added or the number of cores reduced and other processes allocated for better performance per watt of power consumed.

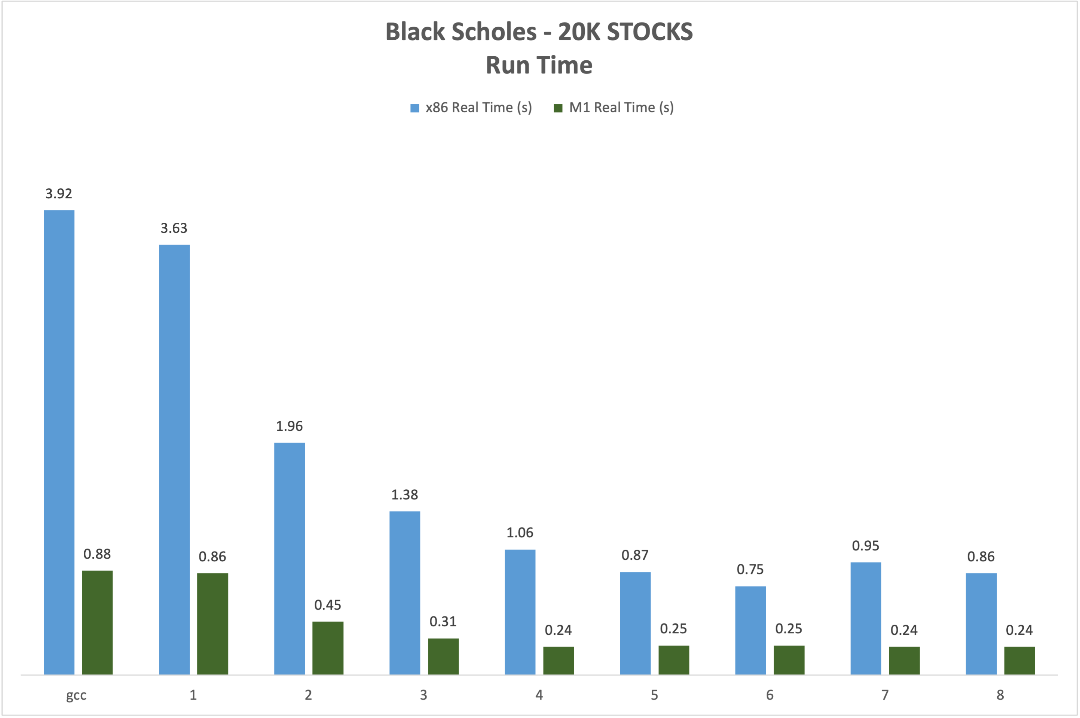

The compiler’s results are impressive, showing a speedup of more than 486% over single-thread execution on X86 processors with 12 logical cores. An Apple M1 processor with eight Arm cores is 400% faster than the single-threaded gcc. The benchmarks come from the Black Scholes financial algorithm that simulates human behavior in stock trading.

Caption: The graph highlights the execution time showing the Black Scholes algorithm running a simulation of 20,000 stock option trades on single thread compiled with gcc. A comparison of the same code compiled without modification for one to eight threads on an Intel i7 x86 CPU with 12 logical cores and Apple M1 silicon with eight cores.

Source: CacheQ

The idea for the CacheQ Compiler Collection, says CacheQ’s CEO Clay Johnson, was something he and co-founder and CTO Dave Bennett talked about for more than 10 years. While distributed processing offers numerous performance advantages, programming continued to be a daunting challenge. They agreed the market was ready for a fast, intuitive and easy-to-use compiler targeting embedded software developers who were not hardware designers.

And that’s what CacheQ delivered. The CacheQ Compiler Collection is modelled after the gcc tool suite with a user interface like common open-source compilers. It requires limited code modification, shortening development time and improving system quality.

In addition to the compiler, analysis tools help software developers understand bottlenecks for performance that report which loops might not be threadable due to things such as loop carry dependencies, for example. The collection of tools contains a compiler, partitioner for assigning code to heterogenous compute elements, linting, profiling and performance prediction, everything that does not exist with OpenMP, the primary competitive technology.

CacheQ’s website includes a video that explains how the compiler works.

Hardware emulation continues to be my expertise and chip designs for those platforms aren’t a good fit for now. Nonetheless, CacheQ’s new compiler looks like a winner for embedded software developers to who need help mastering parallel processing.

Share this post via:

The Name Changes but the Vision Remains the Same – ESD Alliance Through the Years