I’ve spent many years in the ASIC business, and I’ve seen my share of complex chip tapeouts. All of these projects share one important challenge – compute requirements explode when you get close to the finish line. Certain tools need to run on the full-chip layout for final verification and the run times for those tools can get excessively long. The story is probably quite familiar to many. There is a fixed compute capacity available in any on-premise datacenter. That capacity is designed to handle typical workloads for the company and most of the time that works OK.

Near tapeout, run times for some key tools start to explode however, thanks to the massive amount of data to be processed for the final full-chip runs. Adding more processors and memory helps a lot. Going from 2,000 to 8,000 cores for example. But who has 6,000 cores sitting idle when the whole compute farm is provisioned at around 2,000 cores? You get the picture.

Mentor’s Calibre DRC is one such tool that is a key part of the full-chip tapeout process. That’s why a recent white paper from Mentor entitled “Mentor, AMD and Microsoft Collaborate on EDA in the Cloud” caught my attention. This white paper presents a thoughtful and complete analysis of how to tame the peak load demand problem using cloud computing to access essentially unlimited compute power when needed.

The white paper is written by Omar El-Sewefy, a technical lead at Mentor who has been working on their advanced products for almost 12 years. Omar presents a thoughtful analysis of how to reduce long run times by exploiting the cloud-ready capabilities of Caibre’s physical verification technology. The analysis is a collaboration with AMD for processing power and Microsoft Azure for cloud infrastructure, so the results are based on mainstream, “available now” technology. The punch line is that a speed-up of 2X or more in physical verification cycle time can be achieved on a 7nm design.

While that’s an eye-catching statistic, the piece offers a lot more insight into how to achieve that improvement and how to even exceed it. A key part of the analysis is finding the right mix of compute resources for optimal, cost-effective improvement. More isn’t always better, and when you’re using the essentially infinite resources offered by the cloud knowing what to ask for is very important.

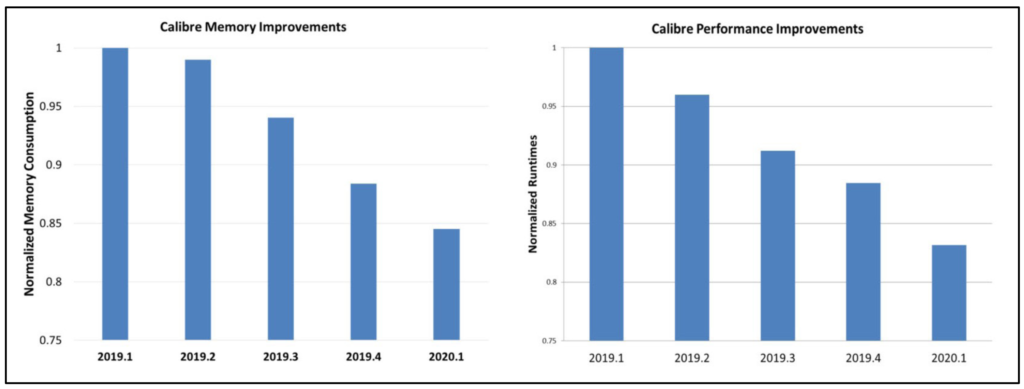

The best practices for using Calibre in the cloud presented in the white paper were done using AMD EPYC™ servers running on the Azure cloud service environment. The latest foundry-qualified rule deck was also used to ensure the latest technology was applied. I mentioned Calibre’s cloud-readiness. Mentor has been steadily improving Calibre’s processing and memory efficiency to facilitate better results in the cloud. The figure below illustrates normalized memory improvements on the left and normalized run time improvements on the right for Calibre releases over the past year.

The analysis presented in the white paper used various configurations of AMD’s EPYC 7551 processors running in the Azure cloud. The 2019.2 release of Calibre was used, with Calibre nmDRC™ providing hyper-remote distributed computing capability up to 4,000 cores. The results in the white paper focus on the optimal balance of processing power and memory to deploy for the case used. This is quite important. The resources offered by the cloud are essentially unlimited. This means the user must be thoughtful about what resources are used or project budgets can go out of control.

The white paper provides a lot of good details about how to achieve this balance and I highly recommend you download it to see the details for yourself. To whet your appetite, here are a few key observations:

- Regarding the number of cores, it turns out there is a “knee” in the scaling curve where the “best value for money” is achieved. For the design and node that was run, the knee was reached between 1,500 and 2,000 cores

- Regarding memory usage, RAM requirements per remote core are reduced by increasing the total number of remote cores, which aligns with the overall scaling strategy

To put all this in perspective, the original run time for this case could be 24 hours using a typical on-premise datacenter. By increasing cores to 2,000 in the cloud, the run time can be reduced to 12 hours, allowing twice as many runs per day. I mentioned that the Calibre 2019.2 release was used for these experiments. What if the latest release was used? That experiment yielded an addition 3-hour reduction with 2,000 cores. More improvements are possible by increasing the cores to 4,000 of course. That becomes a cost/benefit decision, one that is only possible when you are using the cloud. You can download the whole story here.

Share this post via:

Comments

There are no comments yet.

You must register or log in to view/post comments.