You are currently viewing SemiWiki as a guest which gives you limited access to the site. To view blog comments and experience other SemiWiki features you must be a registered member. Registration is fast, simple, and absolutely free so please,

join our community today!

WP_Term Object

(

[term_id] => 6435

[name] => AI

[slug] => artificial-intelligence

[term_group] => 0

[term_taxonomy_id] => 6435

[taxonomy] => category

[description] => Artificial Intelligence

[parent] => 0

[count] => 776

[filter] => raw

[cat_ID] => 6435

[category_count] => 776

[category_description] => Artificial Intelligence

[cat_name] => AI

[category_nicename] => artificial-intelligence

[category_parent] => 0

[is_post] =>

)

On Star Trek when they asked the computer to do something, they never heard it say “Sorry, you have no photon torpedoes connected to your account”. However, this sort of thing is something that happens at my house when I forget the exact name of a specific light. How did I get here?

I was reluctant to buy a “home assistant” for all the reasons… Read More

I’ve noticed hybrid solutions popping up recently (I’m reminded of NXP’s crossover MCU released in 2017). These are generally a fairly clear indicator that market needs are shifting; what once could be solved with an application processor or controller or DSP or whatever, now needs two (or more) of these. In performance/power/price-sensitive… Read More

In the normal evolution of specialized hardware IP functions, initial implementations start in academic research or R&D in big semiconductor companies, motivating new ventures specializing in functions of that type, who then either build critical mass to make it as a chip or IP supplier (such as Mobileye – intially)… Read More

Let The AI Benchmark Wars Begin!by Michael Gschwind on 01-01-2019 at 7:00 amCategories: AI

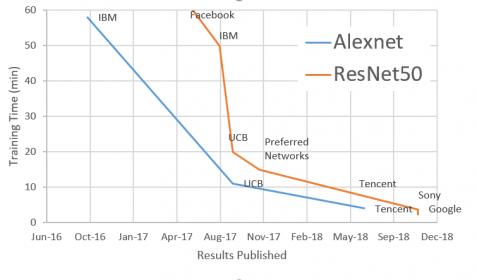

Why benchmark competition enables breakthrough innovation in AI. Two years ago I inadvertently started a war. And I couldn’t be happier with the outcome. While wars fought on the battle field of human misery and death never have winners, this “war” is different. It is a competition of human ingenuity to create new technologies … Read More

One of the more entertaining things I get to observe in the semiconductor ecosystem is competitive customer evaluations of tools and IP. Seriously, this is where the rubber meets the road no matter what the press releases say.

This time it was emulators which is one of the most interesting EDA market segments since there is no dominant… Read More

AI at the Edgeby Tom Dillinger on 12-20-2018 at 7:00 amCategories: AI, eFPGA, Flex Logix, IP

Frequent Semiwiki readers are well aware of the industry momentum behind machine learning applications. New opportunities are emerging at a rapid pace. High-level programming language semantics and compilers to capture and simulate neural network models have been developed to enhance developer productivity (link). Researchers… Read More

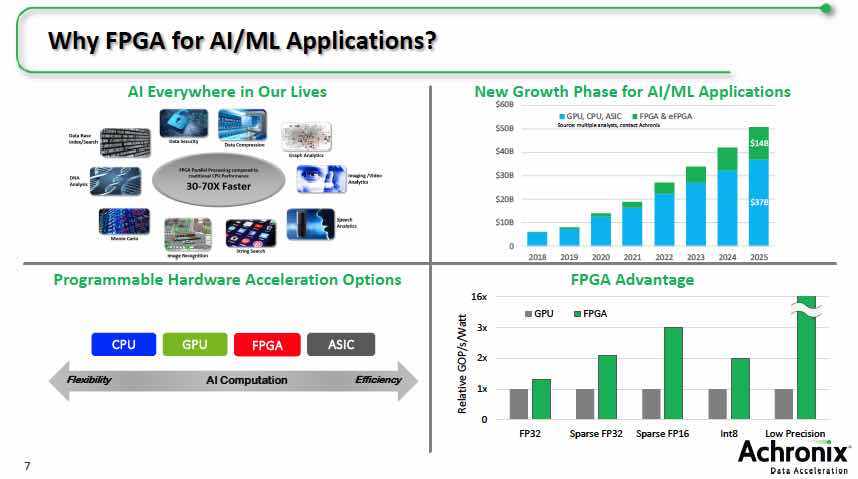

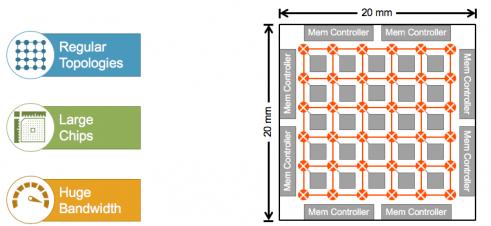

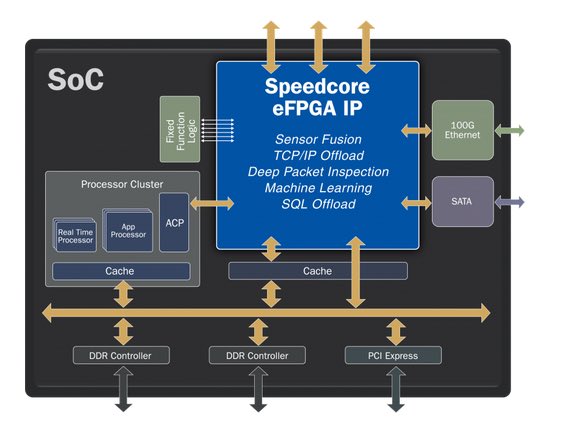

With their current line-up of embeddable and discrete FPGA products, Achronix has made a big impact on their markets. They started with their Speedster FPGA standard products, and then essentially created a brand-new market for embeddable FPGA IP cores. They have just announced a new generation of their Speedcore embeddable… Read More

I wrote a while back about some of the more exotic architectures for machine learning (ML), especially for neural net (NN) training in the data center but also in some edge applications. In less hairy applications, we’re used to seeing CPU-based NNs at the low end, GPUs most commonly (and most widely known) in data centers as the workhorse… Read More

In every semiconductor related field, innovation is the name of the game. Academic, non-profit and government research has been a consistent source of innovation. Look back at the US space program, basic science research and even military programs to see where much of the foundation of our current technological age came from.… Read More

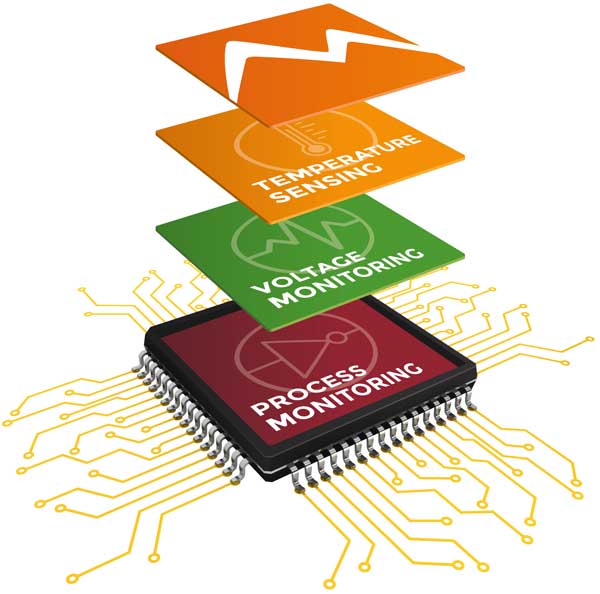

PVT – depending on what field you are in those three letters may mean totally different things. In my undergraduate field of study, chemistry, PVT meant Pressure, Volume & Temperature. Many of you probably remember PV=nRT, the dreaded ideal gas law. However, anybody working in semiconductors knows that PVT stands … Read More

CEO Interview with Aftkhar Aslam of yieldWerx