My dog yawns every time I say Semiconductor or Semiconductor Supply Chain. Most clients say, “Yawn…. Don’t pontificate – pick the Nasdaq winners for us!”

Will Nvidia be overtaken by the new AI players?

If you follow along with me, you might gain some insights into what is happening in AI hardware. I will leave others to do the technology reviews and focus on what the supply chain is whispering to us.

The AI processing market is young and dynamic, with several key players, each with unique strengths and growth trajectories. Usually, the supply chain has time to adapt to new trends, but the AI revolution has been fast.

Nvidia’s data centre business is growing insanely, with very high margins. It will be transforming its business from H100 to Blackwell during this quarter, which could push margins even higher. AMD’s business is also growing, although at a lower rate. Intel is all talk, even though expectations for Gaudi 3 are high.

All the large cloud providers are fighting to get Nvidia’s AI systems, but they find them too expensive, so they work on developing their chips. Outside the large companies, many new players are popping up like weeds.

One moment, Nvidia is invisible; the next moment, they will lose because of their software.. or their hardware… or …

The only absolute is that everything is absolutely rotating around Nvidia, and everybody has an opinion about how long the Nvidia rule will last. A trip into the AI basement might reveal some more insights that can help predict what will happen in the future of AI hardware.

The Semiconductor Time Machine

The Semiconductor industry boasts an intricate global supply chain with several circular dependencies that are as fascinating (yawn) as they are complex. Consider this: semiconductor tools require highly advanced chips to manufacture even more advanced chips, and Semiconductor fabs, which are now building chips for AI systems, need AI systems to function. It’s a web of interdependencies that keeps the industry buzzing.

You have heard: “It all starts with a grain of sand.” That is not the case – it starts with an incredibly advanced machine from the university cities of Northern Europe. That is not the case either. It begins with a highly accurate mirror from Germany. You get the point now. Materials and equipment propagate and circulate until chips exit the fabs, get mounted into AI server systems that come alive and observe the supply chain (I am confident you will check this article with your favourite LLM).

The timeline from when a tool is made until it starts producing chips can be extended. In the best case, it can take a few quarters to a few years. This extended timeline allows observation. It is possible to see materials and subsystems propagate through the chain and make predictions of what will happen.

Although these observations do not always provide accurate answers, they are an excellent tool for verifying assumptions and adding insight to the decision-making process.

The challenge is that the supply chain and the observational model are ever-changing. Although this allows me to continue feeding my dog, a new model must be applied every quarter.

The Swan and the ugly ducklings

I might lose a couple of customers here, but there is an insane difference between Nvidia and its nearest contenders. The ugly ducklings all have a chance of becoming Swans, but not in the next couple of years.

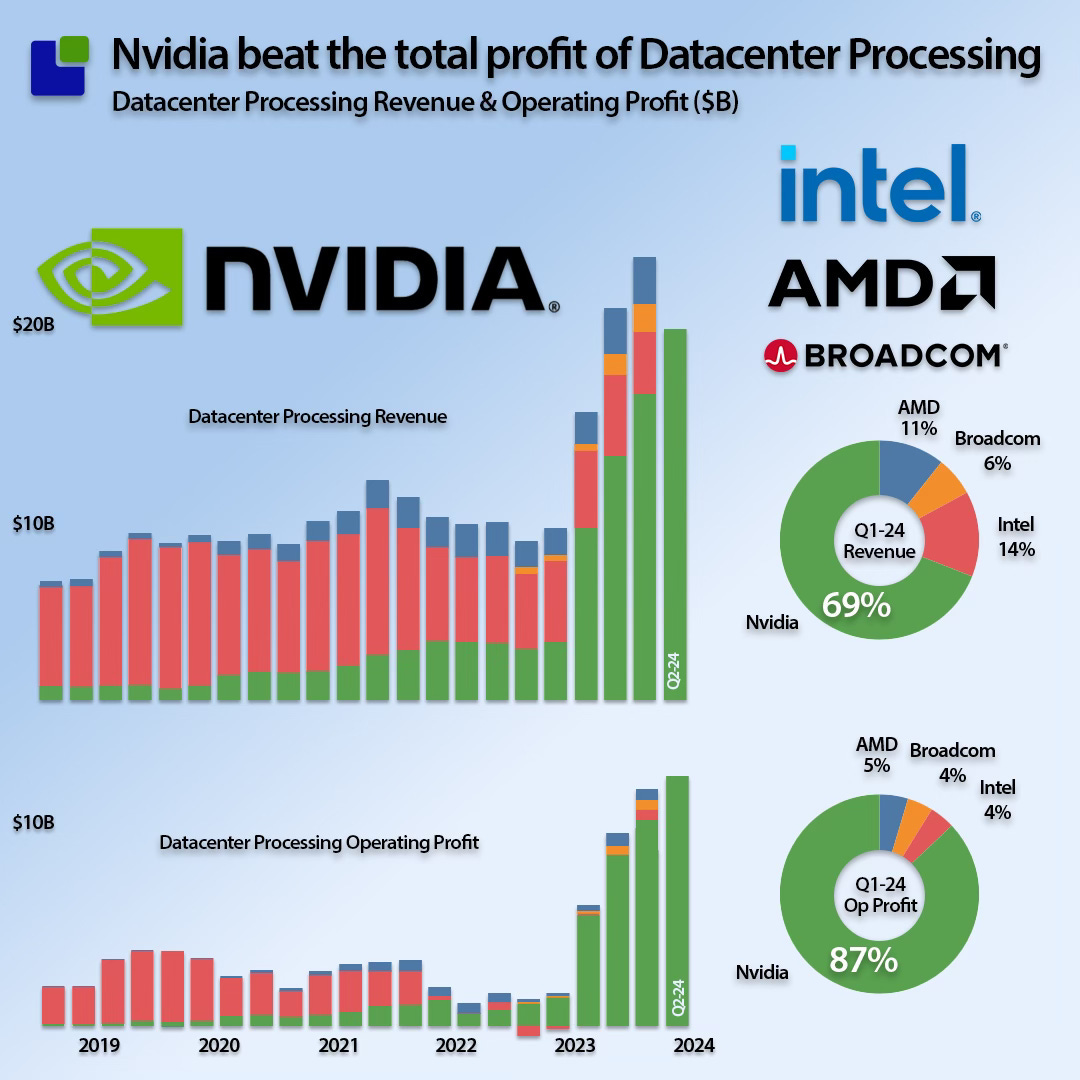

The latest scorecard of processing revenue can be seen below. This is a market view not including internally manufactured chips that are not traded externally:

This view is annoying for AMD and Broadcom but lethal for Intel. Intel can no longer finance its strategy through retained earnings and must engage with the investor community to obtain new financing. Intel is no longer the master of its destiny.

The Bad Bunch

These are some of Nvidia’s critical customers and other data centre owners who are hesitant to accept the new ruler of the AI universe and have started to make in-house architectures.

Nvidia’s four largest customers each have architectures in progress or production in different stages:

- Google Tensor

- Amazon Inferentium and Trainium

- Microsoft Maia

- Meta MTIA

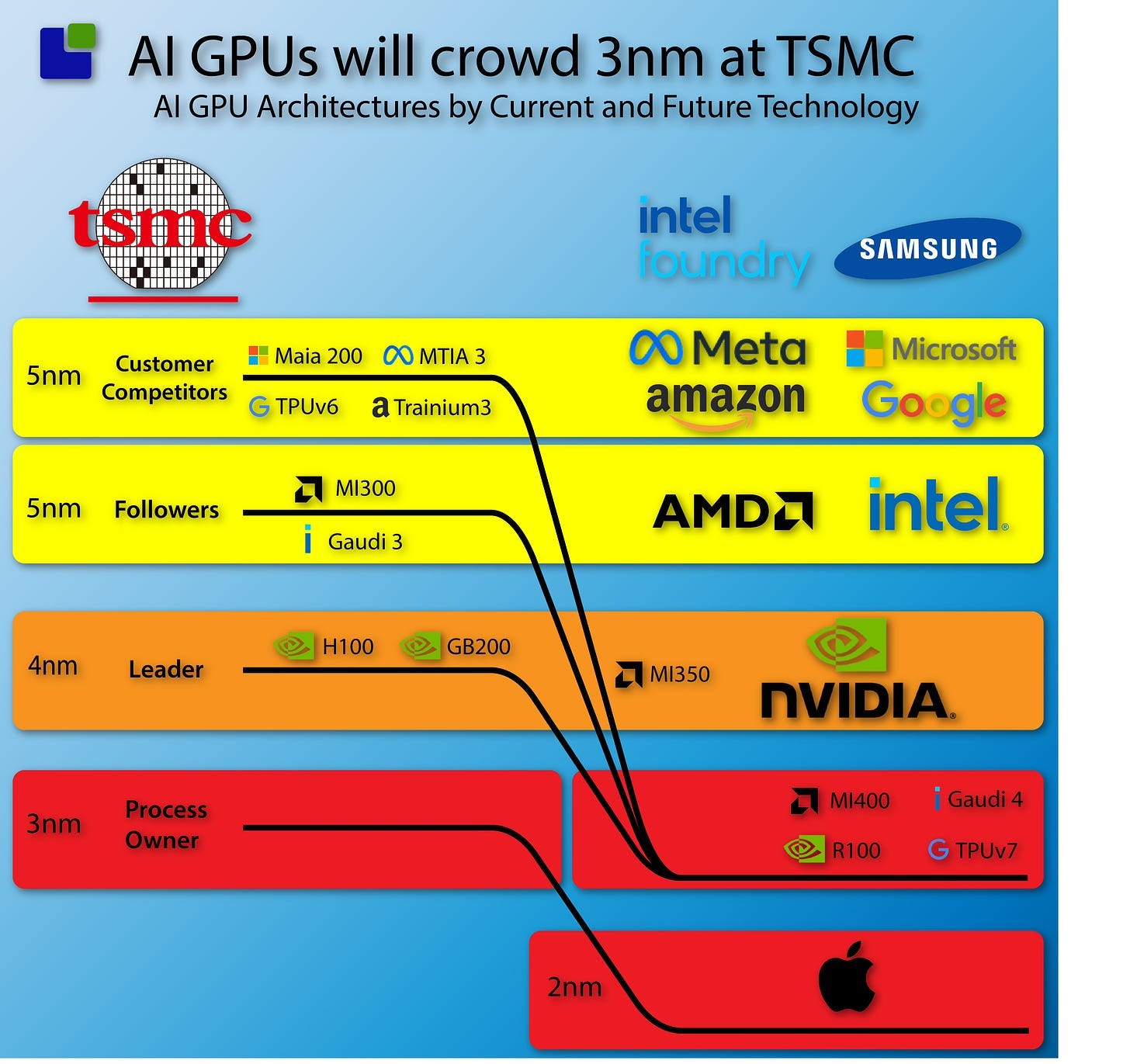

A short overview of the timing of these architectures can be seen here

Unlike established chip manufacturers, Google only has real manufacturing traction with the TPU architecture. This research shows that there are rumours that it is more than ordinary traction.

Let’s go and buy some semiconductor capacity.

As the semiconductor technology needed for GPU silicon is incredibly advanced, it is evident that all the new players will have to buy semiconductor capacity. An excellent place to start is TSMC of Taiwan. Later, TSMC will be joined by Samsung and Intel, but for now, TSMC is the only show in town. Intel is talking a lot about AI and becoming a foundry, but the sad truth is that they currently get 30% of their chips made outside, and it will take some time before that has changed. Even when Intel gets the manufacturing capacity, they still need customers to switch which is not an easy or cheap task. With new ASML equipment in Ireland and Israel, they are like the first intel locations to go online.

The problem for the new players is that access to advanced semiconductor capacity is a strategic game based on long-term alliances. It is not like buying potato chips.

TSMC’s most important alliances

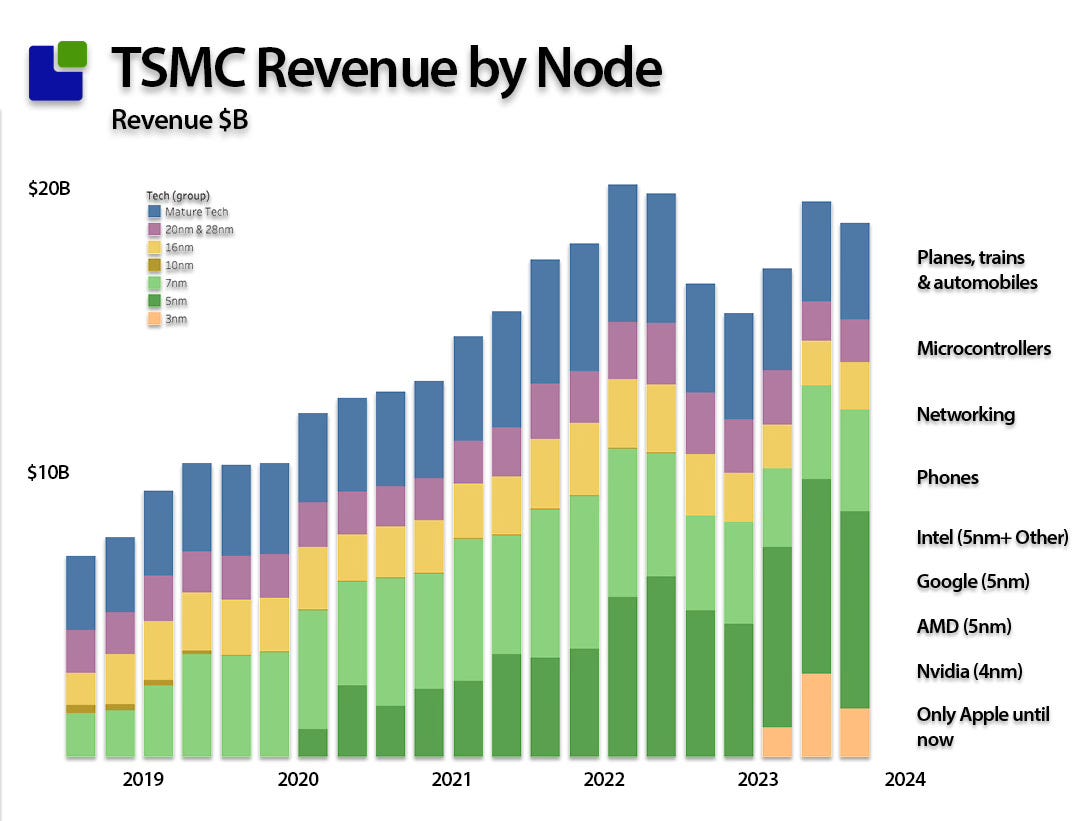

The best way to understand TSMC’s customer relationships is through revenue by technology.

TSMC’s most crucial alliance has been with Apple. As Apple moved from dependence on Intel to reliance on its home-cooked chips, the coalition grew to the point that Apple is the only customer with access to 3nm, TSMC’s most advanced process. This will change as TSMC introduces a 2nm technology, which Apple will try to monopolise again. You can rightfully taunt the consumer giant for not being sufficiently innovative or having lost the AI transition, but the most advanced chips ever made can only be found in Apple products, and that is not about to change soon.

As a side note, it is interesting to see that the $8.7B/Qtr High Performance Computer division is fuelling the combined revenue of the datacenter business approaching $25B and all of the MAC production of 7.5B$ plus some other stuff. TSMC is not capturing as much of the value as the customers are.

Nvidia and TSMC

The relationship between Nvidia and TSMC is also very strong and if Nvidia is not already TSMC’s most important customer, it will happen very soon. The prospects of Nvidia’s business is higher than those of Apple’s business.

Both the Apple and Nvidia relationships with TSMC are at the C-level as they are of strategic importance for all the companies. It is not a coincidence that you see selfies of Jensen Huang and Morris Chang eating street food together in Taiwan.

Like Apple has owned the 3nm process at, Nvidia has owned the 4nm process. Although Samsung is trying to attract Nvidia, it is not likely to be successful as there are other attractions to the TSMC relations as we will dive into later.

TSMC and the rest

With a long history and good outlook, the AMD relationship is also strong, while the dealings with Intel are slightly more interesting. TSMC has a clear strategy of not competing with their customers, which Intel certainly will when Intel Foundry Services becomes more than a wet dream. Intel gets 30% of its chips made externally, and although the company does not disclose where it is not hard to guess. TSMC manufactures for Intel until they are sufficiently strong to compete with TSMC. Although TSMC is not worried about the competition with Intel, I am sure they will keep some distance, and Intel is not first on TSMC’s dance card.

The Bad Bunch is also on TSMC’s customer list, but not with the same traction as the Semiconductor companies. However, they will not have a strong position if Foundry capacity becomes a concern.

As Apple moves to 2nm, it will release capacity at 3nm. However, this capacity is still unknown, with revenue around a modest $2B/Q, and needs to be expanded dramatically to cover all of the new architectures that plan to move into the 3nm hotel. Four companies are committed to 3nm, but the rest will likely follow shortly.

TSMC expects the 2024 3nm capacity to be 3x the 2023 capacity. Right now, there is sufficient capacity at TSMC, but that can change fast. Even though Intel and Samsung lurk in the background, they do not have much traction yet. Samsung has secured Qualcomm for its 2nm process, and Intel has won Microsoft. It is unclear if this includes the Maia AI processors.

TSMC’s investments

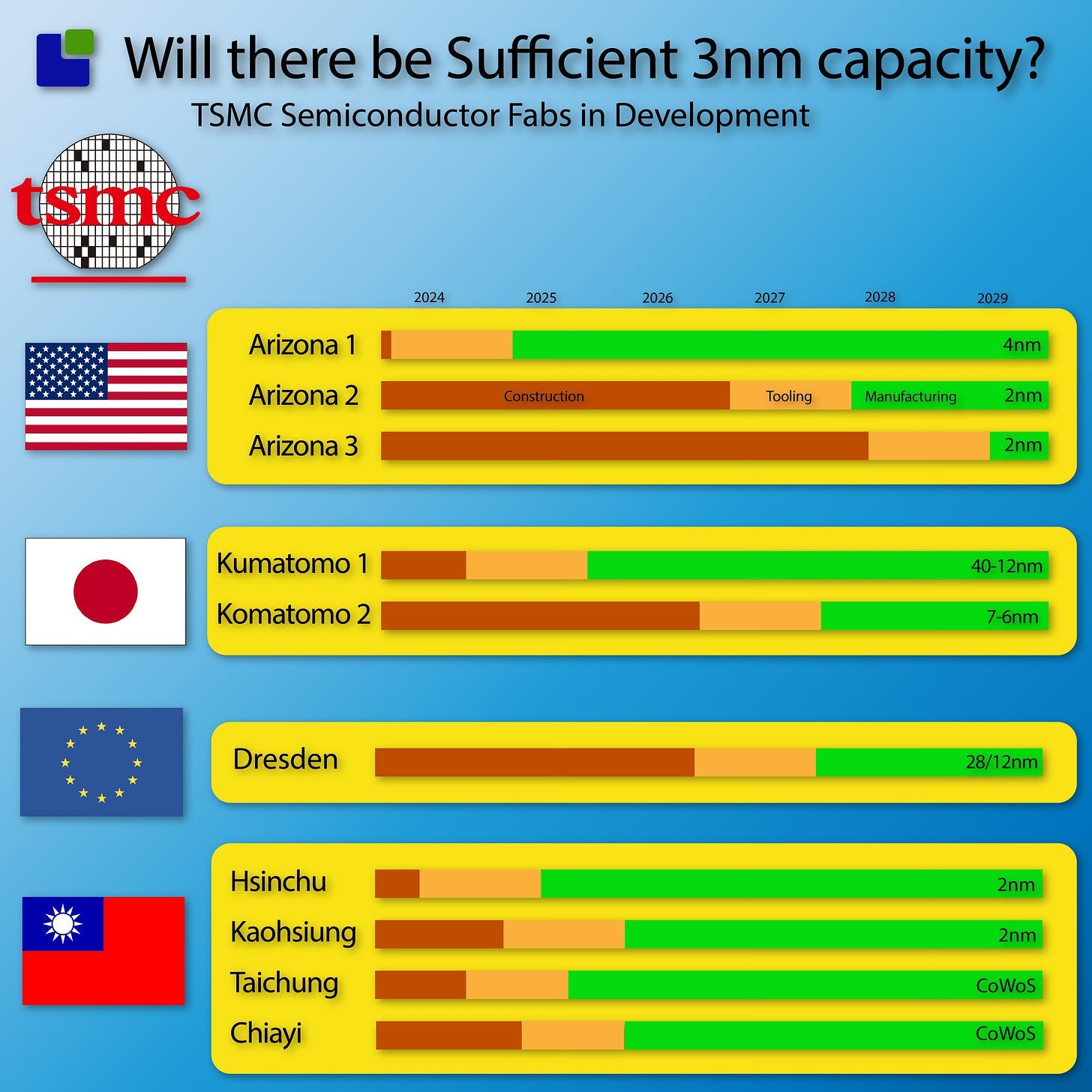

TSMC is constantly expanding its capacity, so much so that it can be hard to determine whether it is sufficient to fuel the AI revolution.

These are TSMC’s current activities. Apart from showing that Taiwan’s Chip supremacy will last a few years, they also show that the new 2nm technology needed to relieve 3nm technology is over a year away.

There are other ways of expanding capacity. It can be extended by adding more or faster production lines to existing fabs. A dive into another part of the supply chain can help understand if TSMC is adding capacity.

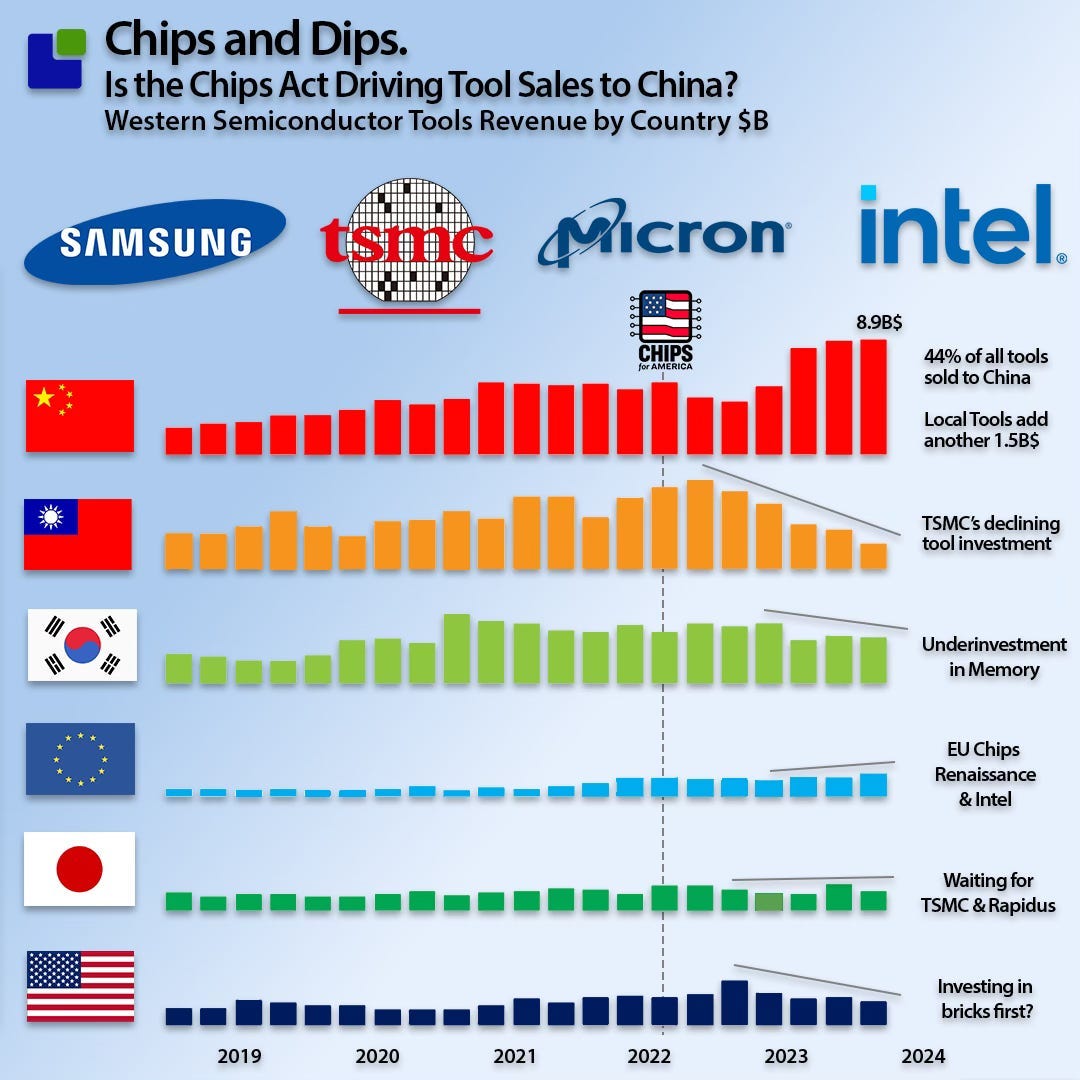

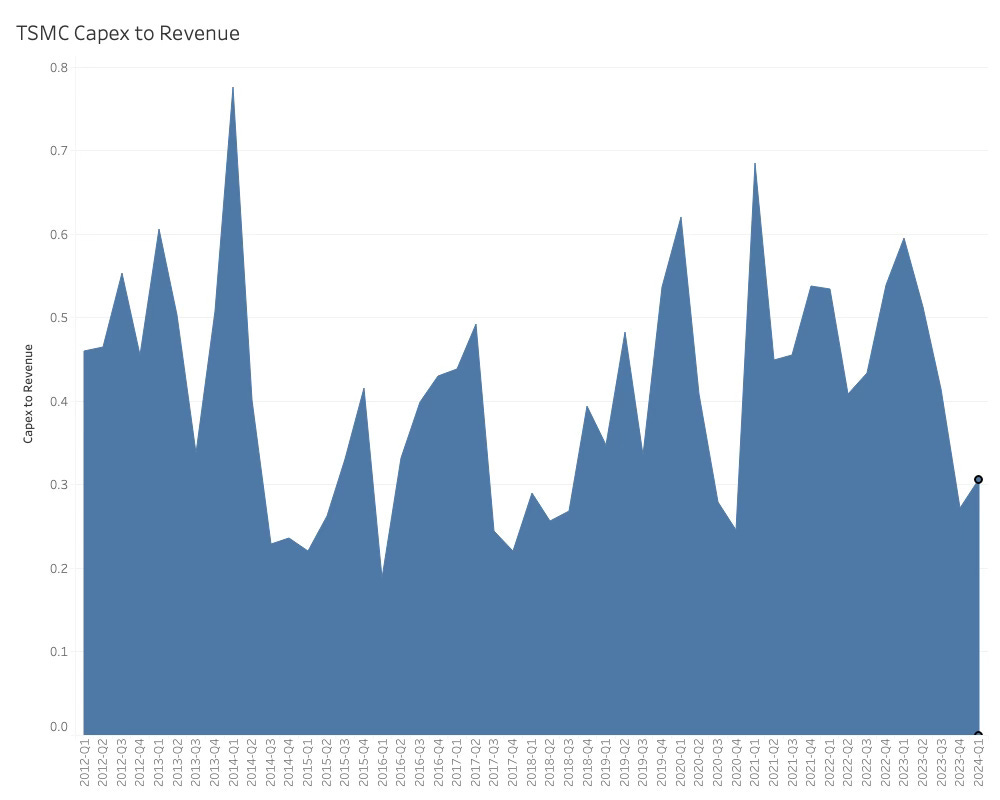

The combined tool sales are down, mostly in TSMC’s home base, Taiwan, and the other expansion area for 2nm, USA. This matches TSMC’s CapEx to Revenue spend (how much of revenue is spent on Capital Investments—new tools & factories).

Although TSMC is adding a lot of capacity, it might be too late to allow all the new players to get the capacity they need for their expansion. The low tool sales in Taiwan suggest that short-term capacity is not on the TSMC agenda; rather, the company is focusing on the Chips Act-driven expansion in the USA, which will delay capacity.

Samsung is not attracting attention to its foundry business, and Intel is some time away from making a difference. Even though the long-term outlook is good, there are good reasons to fear that there are not enough investments in short-term expansion of leading edge Semiconductor capacity at the moment.

A shortage can seriously impact the new players in AI hardware.

The current capacity limitation

It is not silicon that is limiting Nvidia’s revenue at the moment. It is the capacity of the High-Bandwidth Memory and the advanced packaging needed in the new AI servers.

Electrons and distance are not friends

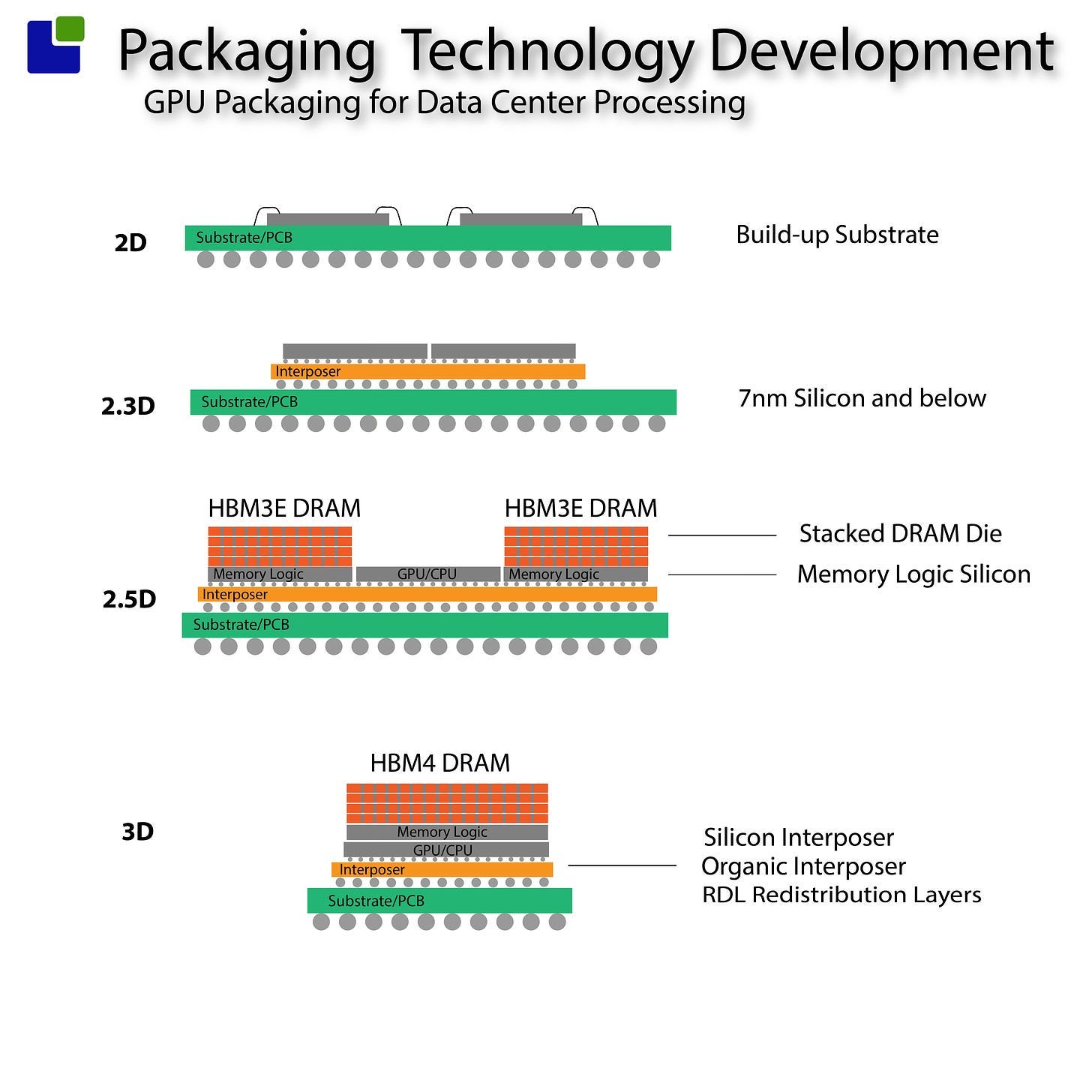

The simplest way of describing this is that electrons and distance are not friends. If you want high speed, you need to get the processors close to each other and close to a lot of high-bandwidth memory. To do so, semiconductor companies are introducing new ways of packaging the GPUs.

The traditional way is to place dies on a substrate and wire them together (2D), but this is not sufficiently close for AI applications. They are currently using 2.5D technology, where stacks of memory are mounted beside the GPU and communicate through an interposer.

Nvidia is planning to go full 3D with its next-generation processor, which will have memory on top of the GPU.

Well, as my boss used to say, ” That sounds simple—now go do it!” The packaging companies have as many excuses as I have.

Besides having to flip and glue tiny dies together and pray for it to work, DRAM must be extremely close to the oven – the GPU.

“DRAM hates heat. It starts to forget stuff about 85°C, and is fully absent-minded about 125°C.”

Marc Greenberg, Group Director, Cadence

This is why you also hear about the liquid cooling of Nvidia’s new Blackwell.

The bottom line is that this technology is extremely limited presently. Only TSMC is capable of implementing it (CoWoS—Chip-on-Wafer-on-Substrate in TSMC terminology).

This is no surprise to Nvidia, which has taken the opportunity to book 50% of TSMC’s CoWoS capacity for the next 3 (three?) years in advance.

Current AI supply chain insights

Investigating the supply chain has allowed us to peek into the future, as far as 2029, when the last of the planned TSMC fabs goes into production. The focus has been on the near term until the end of 2025, and this is what I base my conclusion on (should anybody be left in the audience). Feel free to draw a different conclusion based on the facts presented in this article:

- Nvidia is the only show in town and will continue to be so for the foreseeable future.

- Nvidia is protected by its powerful supplier relations, built over many years.

- AMD will do well but lacks scale. Intel.. well.. it will take time and money (they don’t have – If they pull it off, they will be a big winner)

- The bad bunch like Nvidia systems but less so the pricing, so they are trying to introduce home cooked chips.

- The current structure of the AI supply chain will make it very difficult for the bad bunch to scale their chip volumes to a meaningful level.

- The CoWoS capacity is Nvidia’s Joker – 3 years of capacity ensured, and they can outbid anybody else for additional capacity.

Disclaimer: I am a consultant working with business data on the Semiconductor Supply Chain. I own shares in every company mentioned and have had them for many years. I don’t day trade and don’t make stock recommendations. However, Investment banks are among my clients, using my data and analysis as the basis for trades and recommendations.

Also Read:

Tools for Chips and Dips an Overview of the Semiconductor Tools Market

Oops, we did it again! Memory Companies Investment Strategy

Nvidia Sells while Intel Tells

Share this post via:

TSMC vs Intel Foundry vs Samsung Foundry 2026