Something is brewing in automotive electronics. Within a one-month window most of the product announcements and pitches to which I am being invited are on automotive topics. Automotive markets have long been one of the primary targets for suppliers to system designers, but this level of alignment in announcements seems more than a coincidence. Pulin Desai (Group Director, Product Marketing and Biz Dev for Tensilica Vision and AI DSPs at Cadence) helped me understand some of the motivation. He sees two key drivers in automotive: demand for a consolidated view of software development across a diverse set of platforms (central, zonal and edge) and demand to limit hardware development cost. The Cadence Tensilica group has expanded its product line to meet these trends, particularly in support of advanced perception around the car and in the cabin.

Managing “smarter everywhere” versus software complexity and cost

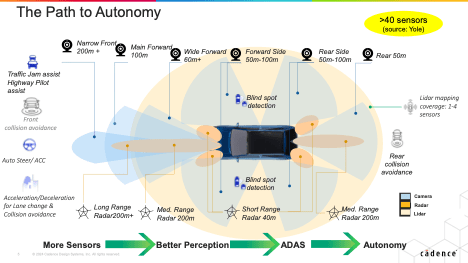

Intelligence is touching everything in the car. Emerging trends are in intelligent backup camera and rear-view mirrors, now with object recognition to detect a child or a dog behind the car. Inside the cabin for driver management systems, sensing when the driver is not paying attention. For occupancy monitoring systems (OMS) detecting if you left a child in the car when you leave. Interestingly both DMS and OMS use a combination of video and radar monitoring.

For perception outside the car, we’re already familiar with forward-facing video paired with object detection to sense collision risks and for lane keeping. These now routine safety features are being coupled with 4D imaging radar (4DR), adding a rich and less weather-sensitive complement to video perception. Video plus 4DR will offer new levels of capability and safety such as adaptive cruise control, essential to further advances in ADAS and autonomy.

Combined, these are all appealing features, but OEMs/Tier1s are eventually stuck with integrating everything in software to manage control through the cockpit screen and other controls, for navigating, playing high quality audio, saving power, handling safety, activating AC, etc, etc. Making that integration manageable demands unified software-defined across all these distributed functions.

On the hardware front, while there is a lot of buzz around AI suppliers, AI parts alone are far from enough to build what the OEMs need: signal processing for object recognition before AI, specialized accelerators to handle 4D point clouds, efficient and cost-effective inferencing for the non-generative AI tasks. Add to that all the other necessary compute functions (CPU cluster, memory management, communication, etc). With the performance and low power delivered by the most advanced semiconductor processes, integrated in single package solution to keep costs down.

How do OEMs square this circle? Pulin says he sees the leaders each pushing their own common differentiated platform architectures, hosting distributed compute around the car, across model lines, and into the future. While also reducing cost and keeping car prices reasonably in line with our expectations. Very interesting.

Stepping up to the trend

Think about vision+radar applications as an illustrative example. However partitioned, the system starts with a camera for image capture followed by a complex chain of image signal processing functions (DSP-based) – before any AI operations can begin. The radar starts with an antenna (maybe 16×16 receive/transmit) followed by an equally complex chain of signal processing to generate a 4D radar point cloud, again before AI-based recognition.

Radar signal processing uses complex Fourier analysis, an algorithm manageable on a DSP for 1D or 2D radar with relatively low field of view and resolution. However, 4D imaging radar has a higher field of view and higher resolution, generating huge amounts of data per frame for which additional hardware assistance is needed to achieve acceptable frame rates.

Cadence has just announced their Tensilica Vision 331 and Vision 341 DSPs to support signal processing for computer vision and radar imaging together with entry-level AI (such as driver face-id) and sensor fusion. Both cores deliver improved power, performance and area for high-end multi-sensor processing. They also offer a radar boost mode through instruction set optimizations, delivering up to 4X performance improvement for Vision 331 and up to 6X performance improvement for Vision 341. Plugging in an optional Vision 4DR accelerator for big radar data cubes delivers a further 4X in performance for the 341 core (together with a 6X area advantage) and up to 7X performance boost for the 331 core. The DSP cores also extend AI performance up to 80 TOPS when paired with a single (Cadence) Neo NPU.

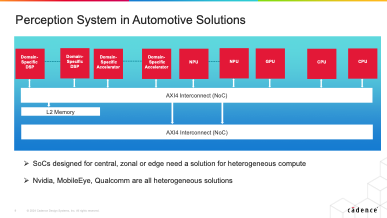

How do such options help OEMs reduce chip types while supporting scalability? Before we even get to chiplets and multi-die solutions, consider a single-chip heterogeneous IP-based design. Routine stuff for those of us in the SoC design world. Unfortunately, it seems media saturation coverage on a certain GPU for AI has led some folks outside the chip world to believe that GPUs are the necessary, maybe even sufficient minimum for any automation. Not realizing that outside of datacenters, heterogeneous design is king (just look at smartphones).

Heterogeneous design (image above) builds around a mix of multiple core functions. Multiple DSPs to handle the signal processing for perception-heavy applications representative of the bulk of new and emerging auto electronics. Domain-specific accelerators to ensure high frame-rate throughput. Compact and cost-effective neural accelerators (not GPUs) to handle the bulk of convolutional recognition tasks. A GPU if needed for high-end (transformer) AI models, communication IPs, CPUs for control and memory management.

This general approach to system-on-chip (SoC) is already well proven in many proprietary and off-the-shelf chips, including those from automotive semiconductor suppliers. It’s not a big leap to imagine that OEMs could design (or outsource design for) their own unique system architectures following a similar approach. Providing them with one multi-purpose design whose cost they can amortize across most applications in the car, excepting perhaps central compute (big, expensive devices) and drivetrain MCUs (tiny, very low cost devices).

What about a consolidated view of software?

For an OEM software developer, if almost every chip in a car is an instance of their common platform chip this problem is largely solved. Of course, the underlying IPs should share common, standards-based APIs: OpenCL for both DSPs and GPUs, Simulink for connection to MATLAB, together with the usual AI model interfaces (ONNX, TensorFlow and PyTorch). Exactly what the whole Tensilica IP line supports together with their NeuroWeave software compiler toolchain to map from the standard trained networks (TensorFlow, MXNet, PyTorch, Caffe2 and Jax) to the target device.

I can’t help thinking that the auto OEMs must be drawing inspiration from Amazon AWS success with their Graviton platform, now distributed widely across their datacenters and a unified focus for innovation in following generations (a trend also happening in other hyperscalers). Common heterogenous architecture auto platforms will be quite different in some ways, but the long-term value of a common platform is not so different.

You can learn more about the latest Tensilica release HERE.

Share this post via:

Comments

There are no comments yet.

You must register or log in to view/post comments.