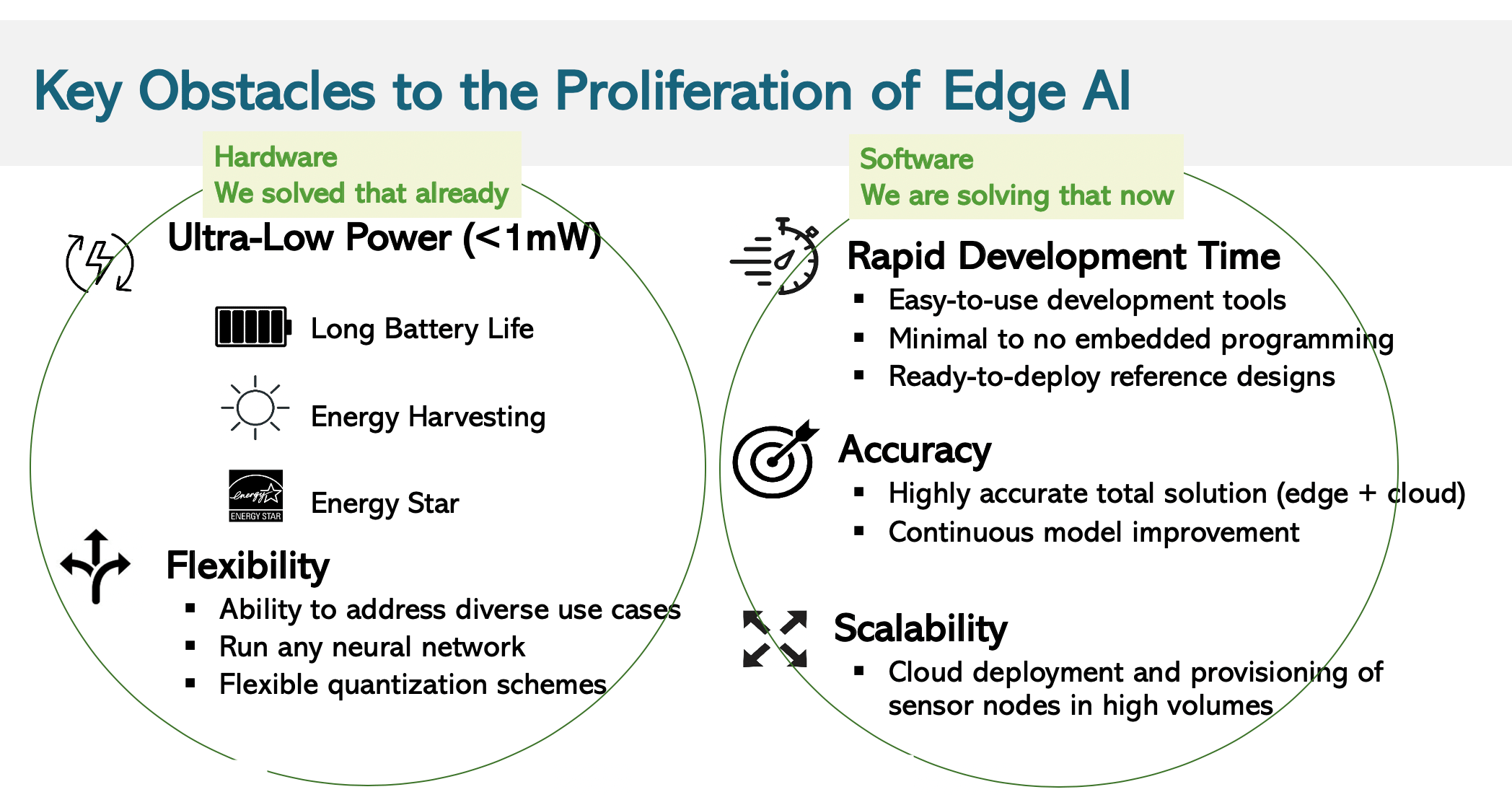

What do you do next when you’ve already introduced an all-in-one extreme edge device, supporting AI and capable of running at ultra-low power, even harvested power? You add a software flow to support solution development and connectivity to the major clouds. For Eta Compute, their TENSAI flow.

The vision of a trillion IoT devices only works if the great majority of those devices can operate at ultra-low power, even harvested power. Any higher, and the added power generation burden and field maintenance make the economics of the whole enterprise questionable. Alternatively, reduce the number of devices we expect to need and the economics of supplying these devices looks shaky. The vision depends on devices that are be close to self-sufficient in power.

Adding to the challenge, we increasingly need AI at the extreme edge. This is in part to manage the sensors, to detect locally and communicate only when needed. When we do most of what we need locally, there’s no need to worry about privacy and security. Further, we often need to provide real-time response without the latency of a roundtrip to a gateway or the cloud. And operating expenses go up when we must leverage network or cloud operator services (such as AI).

All-in-one extreme edge

Eta Compute has already been leading the charge on ultra-low power (ULP) compute. They do this building on their proprietary self-timed logic and continuous voltage and frequency scaling technology. In partnerships with Arm for Cortex-M and NXP for CoolDSP, they already have established a multi-core IP platform for ULP AI at the extreme edge. This runs typically below 1mW when operating and below 1uA in standby. It can handle a wide range of use cases – image, voice and gesture recognition, sensing and sensor fusion among other applications. They can run any neural networks and support the flexible quantization now commonly seen in many inference applications.

TENSAI Flow

Semir Haddad (Sr Dir Product Marketing) told me that Eta Compute’s next step along this path is to provide a full-featured software solution to complement their hardware. This is designed to maximize ease of adoption, requiring little to no embedded programming. They will supply reference designs in support of this goal.

The software flow (in development) is called TENSAI. Networks are developed and trained in the cloud in the usual way, eg through TensorFlow, reduced then through TensorFlowLite. The TENSAI compiler takes the handoff to optimize the network to the embedded Eta Compute platform. It also provides all the middleware: the AI Kernel, FreeRTOS, hardware abstraction layer and sensor drivers. With the goal, as I said before, that not a single line of new embedded code should be needed to bring up a reference design.

Azure, AWS, Google cloud support

Data collection connects back to the cloud through a partnership with Edge Impulse (who I mentioned in an earlier blog). They support cloud connections with all the standard clouds – Azure, AWS and Google Cloud (he said they see a lot of activity on Azure). Semir stressed there is opportunity here to update training for edge devices, harvesting data from the edge to improve accuracy of abnormality detection for example. I asked how this would work, since sending a lot of data back from the edge would kill power efficiency. He told me that this would be more common in an early pilot phase, when you’re refining training and not so worried about power. Makes sense.

Semir also said that their goal is to provide a platform which is as close to turnkey as possible, except for the AI training data. They even provide example trained networks in the NN Zoo. I doubt they could make this much easier.

TENSAI flow is now available. Check HERE for more details.

Share this post via:

Flynn Was Right: How a 2003 Warning Foretold Today’s Architectural Pivot