Mentor have pushed the advantages of virtualized verification in a number of domains, initially in verifying advanced networking devices supporting multiple protocols and software-defined networking (SDN), and more recently for SSD controllers, particularly in large storage systems for data centers. There are two important components to this testing, the first being that simulation is clearly impractical; testing has to run on emulators simply because designs and test volumes are so large. The second factor is that the range of potential testing loads in these cases is far too varied to consider connecting the emulator test platform to real hardware, the common ICE model for testing in such cases. The “test jig” to represent this very wide range must be virtualized for pre-silicon (and maybe even some post-silicon) validation.

Jean-Marie Brunet, Dir. Marketing for Emulation at Mentor, has now released another white paper following this theme for 5G, particularly the radio network infrastructure underlying this technology. This makes for a good yet quick read for anyone new to the domain, in part on what makes this technology so complex. In fact “complex” hardly seems to do justice to this standard. Where in simpler times we’ve become familiar with mobile/edge devices connecting to base stations and from there to the internet/cloud through backhaul, in 5G there are more layers in the radio access network (RAN).

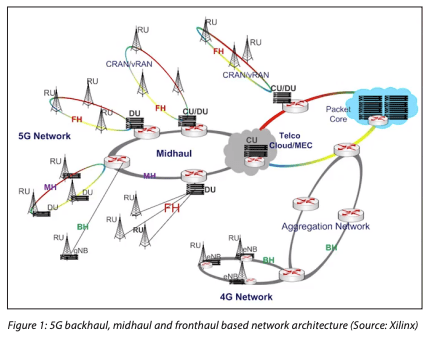

These are not only for managing aggregation and distribution. Now backhaul to the internet connects (typically through fiber) to the central unit (CU) which handles baseband processing. The CU then connects to distribution units (DUs) and those connect to remote radio units (RRUs), which are possibly small cells. The CU to DU connection is known as midhaul and the DU to RRU connection is known as fronthaul. (More layers are also possible.) This added complexity has been designed to allow for greater capacity with appropriate latency in the fronthaul network, for example ultra-low latencies are only possible if traffic can flow locally without need to go through the head node.

With this level of layering in the network it shouldn’t be surprising that operators want software-defined networking, in this domain applied to something called network slicing to offer different tiers of service. It also shouldn’t be surprising to learn that more compute functionality is moving into these nodes, known here as Multi-Access Edge Computing (MEC), more colloquially as fog computing. If you don’t want to take the latency hit of going back to the cloud for everything, you need this local compute. And I’m guessing the operators like it because this can be another chargeable service.

Then there’s the complexity of radio communication in ultra-high-density edge-node environments. This requires support for massive MIMO (multi-input-multi-output) where DUs and possibly the edge nodes themselves sport multiple antennae. The point of this is to allow communication to adaptively optimize, through beamforming, to highest available quality of link. Some indications point to that link adaptation moving under machine-learning (ML) control since look-up table approaches in LTE are becoming too complex to support in 5G.

ML/AI will also play a role in adaptive network slicing, judging by publications from a number of equipment makers (Ericsson, Nokia, Huawei et al). This obviously has to be robust around point failures in the RAN, and it also needs to be able to adapt to ensure continued quality of service in guaranteed latency provisions. I also don’t doubt that if ML capability is needed anyway in these RAN nodes, operators will be tempted to add access to that ML as an additional service to users (perhaps for more extensive natural language processing for example).

So – multi-tiered RANs, software-defined virtual networks through these RANs, local compute within the network, massive MIMO with beamforming and intelligently adapted link quality, machine learning for this and other applications – that’s a lot of functionality to validate in highly complex networks in which many equipment providers and operators must play.

To ensure this doesn’t all turn into a big mess, operators already require that equipment be proven out in compliance-testing labs to be allowed to sit within and connect to sub-5G networks. This concept no doubt continues for 5G, now with all of these new requirements added. Unit validation against artificially constructed tests and hardware rigs is a necessary but far from sufficient requirement to ensure a reasonably likelihood of success in that testing. I don’t see how else you could get there without virtualized network testing against models running on an emulator.

You can read the Mentor white-paper HERE.

Share this post via:

Comments

There are no comments yet.

You must register or log in to view/post comments.