As I have been watching the developments in EDA and Semiconductor industry, it is apparent that we remain fragmented unless pushed to adopt a common standard mostly due to business reasons. Foundries are dictating on the rules to be followed by designs, thereby EDA tools incorporating them. Also, design companies needed to work with tools from different vendors. As a result various exchange formats appeared at various levels – layer, device, block, design and so on; first to define and satisfy the rule and second to exchange the information between different tools. Yet, the industry needed a common database for tighter integration and interoperability between various tools, eliminating data translation between formats. OpenAccess is an open standard database, owned and distributed by SI2 ; available at least after about 8-10 years of wait.

There are multiple advantages of using OpenAccess database. I would not go into the details of its data exchange, capacity and performance benefits, but how it tremendously improves productivity in terms of design and tool development and robustness of the flow. SI2 provides database along with its access APIs; so any tool developed in-house or by 3[SUP]rd[/SUP] party on this database can be tightly integrated, thereby enabling a robust design flow based on multiple tools but working on the same database. This also eliminates multiple data translations through various formats which in turn eliminate unwanted errors and troubleshooting overheads. While talking to Brady Logan (Director of Business Development at SpringSoft), he showed me a demo of Laker environment. This reflects true use and advantage of OpenAccess.

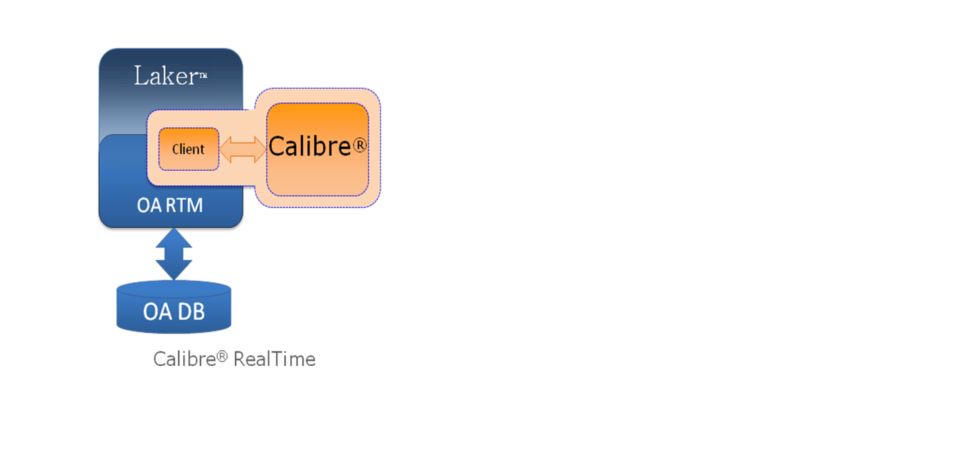

The Laker (from SpringSoft) and Calibre (from Mentor Graphics) Realtime Runtime Model enables two tools to run concurrently on the same data base to provide users with real time sign off quality feedback on edits and DRC fixing. The use model’s elimination of Stream In and Stream Out has a proven 10X productivity gain over older methodology. Brady tells me that this use model has proven value at advance nodes where edits and fixes can create numerous violations of complex conditional rules. It’s obvious that all of the time consuming cycles through Stream during edit-verify-fix iterations go away.

Overall flow in Laker system based on OpenAccess looks like this –

Profitability – Imagine the amount of resources being spent by every company in maintaining every format and its translation to other formats and databases. Also, increase in design cycles due to formats. If all that expense can be saved, it will definitely increase in the bottom line of the whole industry as such and the resources can be deployed at other top-end challenges. OpenAccess enables saving that unnecessary cost. It must be noted that Cadence invested significantly in the making of this database though.

Further Extensions – In one of my last article, I talked about design level analog constraints to be standardized. If OpenAccess database can be extended to include these constraints, then analog designs, even at the level of schematics and layouts can be shared with other parts of designs and tools from different vendors on the same database, thereby easing integration, edit and verification of the complete design together. Another good extension would be for 3D-IC to assimilate designs from various sources, specifically for information about TSVs, partitioning at different planes, power distribution networks and stress (thermal and mechanical) analysis; and tools for different usage working on the same database. For power distribution, CPF (Common Power Format) and UPF (Unified Power Format) formats are available, but they need to be unified in a single format first for greater interoperability.

By Pawan Kumar Fangaria

EDA/Semiconductor professional and Business consultant

Email:Pawan_fangaria@yahoo.com