Recently, one of those very restrained press releases – in this case, Mentor Graphics and Imagination Technologiesextending their partnership for MIPS software support– crossed my desk with about 10% of the story. The 90% of this story I want to focus on is why Mentor is putting energy into this partnership

Continue reading “What’s in your network processor?”

Cutting Debug Time of an SoC

The amount of time spent debugging an SoC dwarfs the actual design time, with many engineering teams saying that debug and verification takes about 7X the effort as the actual design work. So any automation to reduce the amount of time spent in debug and verification would directly impact the product schedule in a big way.

An example Mobile Applications Processor block diagram is shown below and there are a few dozen IP blocks used in this Samsung Exynos 5 Dual along with the popular ARM A15 core.

I’m guessing that a large company like Samsung is likely using IP from third parties along with their own internal IP re-use to get to market quickly.

Virtual models can be used during design, debug and verification phases in order to accelerate the simulation speeds. An approach from Carbon Design Systems called Swap & Play allows an engineer to start simulating a virtual prototype with the fastest functional models, and then swap to a cycle-accurate model at some hardware or software breakpoint. So I could simulate a mobile device booting the operating system using fast functional models, and then start debug or analysis using more detailed models at specific breakpoints:

This Swap & Play feature allow the software driver developers to independently code for their IP blocks using the 100% accurate system model without waiting to boot a cycle accurate system. Another benefit is that after the OS is quickly booted you can do performance optimization profiling on your applications or benchmarks.

Carbon Design and ARM developers have collaborated to make this Swap & Play technology work with the latest ARM processor, interconnect and peripheral IP blocks. For Semi IP blocks like fabric and memory controllers that don’t already have a fast functional model, you have several choices:

- Create a SystemC or other high-level model.

- Use a cycle-accurate model, aka Carbonized model. Carbon automatically inserts the adapters needed to go from a Fast Model based system to an accurate model and since most peripherals aren’t big bottlenecks in the actual system, the impact of executing them accurately only when needed is not traditionally a big impact on virtual prototype performance

- Automatically create a fast functional model from a Carbonized model using the SoCDesigner Plus tool.

- Use a memory block. Often you need a place to read and write values.

- Use a traffic generator or consumer model – to look for traffic on the system bus or a sink for traffic.

I see a lot of promise in the third approach because it creates a fast functional model automatically.

For fabric and interconnects the SoCDesigner Plus tool can reduce the logic to a simple memory map, and then create the fast functional model directly from the Carbonized model.

Since memory controllers have configuration registers and other logic which must be modeled, even in the fast functional models, a different approach is needed. Here, SoCDesigner Plus creates a fast functional model which incorporates the Carbonized memory controller to handle configuration accesses which also providing a direct path to memory contents for fast accesses. Since the registers in the memory controller are infrequently changed, using a Carbonized model doesn’t slow down the overall system simulation times much and the vast majority of accesses go directly to memory.

Summary

Using fast, un-timed virtual models is one technique to reduce the debug and verification time on SoC projects. If your SoC design has an ARM core, then consider using the virtual modeling approach from Carbon Design Systems. Bill Neifert blogged about this topic in more detail on July 16th.

Further Reading

- Swap and Play Extended To Chip Fabric and Memory Controllers

- ARM Partners with Carbon on Cortex-A57

- Carbon CEO on Advanced ARM based SoC Design!

- Using Virtual Platforms to Make IP Decisions

lang: en_US

A Brief History of Magillem

Founders

Cyril Spasevski is the President, CTO and founding engineer at Magillem, bringing a team of engineers, all experts with an SoC platform builder tool. In 2006 Cyril and his team met a seasoned business woman, and decided to form Magillem. Design teams were struggling with different tools at different stages of the flow, redoing the same configurations at ESL and RTL to assemble their virtual platforms.

Cyril Spasevski, President and CTO

Continue reading “A Brief History of Magillem”

A Brief History of TSMC’s OIP part 2

The existence of TSMC’s Open Innovation Platform (OIP) program further sped up disaggregation of the semiconductor supply chain. Partly, this was enabled by the existence of a healthy EDA industry and an increasingly healthy IP industry. As chip designs had grown more complex and entered the system-on-chip (SoC) era, the amount of IP on each chip was beyond the capability or the desire of each design group to create. But, especially in a new process, EDA and IP qualification was a problem.

See also Part 1.

On the EDA side, each new process came with some new discontinuous requirements that required more than just expanding the capacity and speed of the tools to keep up with increasing design size. Strained silicon, high-K metal gate, double patterning and FinFETs each require new support in the tools and designs to drive the development and test of the innovative technology.

On the IP side, design groups increasingly wanted to focus all their efforts on parts of their chip that differentiated them from their competition, and not on re-designing standard interfaces. But that meant that IP companies needed to create the standard interfaces and have them validated in silicon much earlier than before.

The result of OIP has been to create an ecosystem of EDA and IP companies, along with TSMC’s manufacturing, to speed up innovation everywhere. Because EDA and IP groups need to start work before everything about the process is ready and stable, the OIP ecosystem requires a high level of cooperation and trust.

When TSMC was founded in 1987, it really created two industries. The first, obviously, is the foundry industry that TSMC pioneered before others entered. The second was the fabless semiconductor companies that do not need to invest in fabs. This has been so successful that two of the top 10 semiconductor companies, Qualcomm and Broadcom, are fabless. And all the top FPGA companies are fabless.

The foundry/fabless model largely replaced IDMs and ASIC. An ecosystem of co-operating specialist companies innovates fast. The old model of having process, design tools and IP all integrated under one roof has largely disappeared, along with the “not invented here” syndrome that slowed progress since ideas from outside the IDMs had a tough time penetrating. Even some of the earliest IDMs from the “real men have fabs” era have gone “fab lite” and use foundries for some of their capacity, typically at the most advanced nodes.

Legendary TSMC Chairman Morris Chang’s “Grand Alliance” is a business model innovation of which OIP is an important part, gathering all the significant players together to support customers. Not just EDA and IP but also equipment and materials suppliers, especially high-end lithography.

Digging down another level into OIP, there are several important components that allow TSMC to coordinate the design ecosystem for their customers.

- EDA: the commercial design tool business flourished when designs got too large for hand-crafted approaches and most semiconductor companies realized they did not have the expertise or resources in-house to develop all their own tools. This was driven more strongly in the front-end with the invention of ASIC, especially gate-arrays; and then in the back end with the invention of foundries.

- IP: this used to be a niche business with a mixed reputation, but now is very important with companies like ARM, Imagination, CEVA, Cadence andSynopsys, all carrying portfolios of important IP such as microprocessors, DDRx, Ethernet, flash memory and so on. In fact, large SoCs now contain over 50% and sometimes as much as 80% IP. TSMC has well over 5,500 qualified IP blocks for customers.

- Services: design services and other value-chain services calibrated with TSMC process technology helps customers maximize efficiency and profit, getting designs into high volume production rapidly

- Investment: TSMC and its customers invest over $12B/year. TSMC and its OIP partners alone invest over $1.5B. On advanced lithography, TSMC has further invested $1.3B in ASML.

Processes are continuing to get more advanced and complex, and the size of a fab that is economical also continues to increase. This means that collaboration needs to increase as the only way to both keep costs in check and ensure that all the pieces required for a successful design are ready just when they are needed.

TSMC has been building ecosystems of increasing richness for over 25 years and feedback from partners is that they see benefits sooner and more consistently than when dealing with other foundries. Success comes from integrating usage, business models, technology and the OIP ecosystem so that everyone succeeds. There are a lot of moving parts that all have to be ready. It is not possible to design a modern SoC without design tools, more and more SoCs involve more and more 3[SUP]rd[/SUP] party IP, and, at the heart of it all, the process and the manufacturing ramp with its associated yield learning all needs to be in place at TSMC.

The proof is in the numbers. Fabless growth in 2013 is forecasted to be 9%, over twice the increase for the overall industry at 4%. Fabless has doubled in size as a percentage of the semiconductor market from 8% to 16%, during a period when the growth in the overall semiconductor market has been unimpressive. TSMC’s own contribution to semiconductor revenue grew from 10% to 17% over the same period.

The OIP ecosystem has been a key pillar in enabling this sea change in the semiconductor industry.

TSMC’s OIP Symposium is October 1st. Details and to register here.

Texture decompression is the point for mobile GPUs

In the first post of this series, we named the popular methods for texture compression in OpenGL ES, particularly Imagination Technologies PVRTC on all Apple and many Android mobile devices. Now, let’s explore what texture compression involves, what PVRTC does, and how it differs from other approaches.

Continue reading “Texture decompression is the point for mobile GPUs”

Samsung 28nm Beats Intel 22nm!

There was some serious backlash to the “Intel Bay Trail Fail” blog I posted last week, mostly personal attacks by the spoon fed Intel faithful, but there are however some very interesting points made amongst the 30+ comments so be sure and read them when you have a chance.

The Business insider article “The iPhone 5S Is By Far The Fastest Smartphone In The World…” reinforces what I have been saying in regards to technology versus the customer experience. Yes, Intel has very good technology and “factory assets” but that no longer translates to market domination in the new world order of smartphones and tablets.

The chips in the chart below are called SoCs or System on Chips. Per Wikipedia:

A system on a chip or system on chip (SoC or SOC) is an integrated circuit (IC) that integrates all components of a computer or other electronic system into a single chip. It may contain digital, analog, mixed-signal, and often radio-frequency functions—all on a single chip substrate.

The technological benefits of SOCs are self-evident: everything required to run a mobile device is on a single chip that can be manufactured at high volumes for a few dollars each. The industry implications of SoCs are also self-evident: as more functions are consolidated into one SoC semiconductor companies will also be consolidated. For example, Apple is a systems company by definition but they also make their own SoC. Samsung, Google, Amazon, and others are following suit. For those who can’t make their own highly integrated SoC, QCOM is the smart choice as they are best positioned to continue to dominate the merchant SoC market.

The most interesting thing to note is that the Apple A7 chip is manufactured by Samsung on a 28nm LP (Gate-First HKMG) process and the Bay Trail chip is Intel 22nm (Gate-Last HKMG). So tell me: Why is a 28nm chip faster than a 22nm chip? Simply stated, the Intel 22nm process is not a true 22nm process by the standard semiconductor definition. It also has to do with architecture and the embedded software that runs the chip which directly translates to customer experience. The other thing is cost. 28nm silicon is the cheapest transistor we will probably ever see again.

At the IDF keynote Intel CEO Brian K. clearly stated that the new Intel 14nm microprocessor will be out in the first half of 2014 while the new Intel 14nm SoC will be out the first half of 2015. Okay, the first thing that comes out of Intel fabs is the microprocessors then a year or so later the SoC? Either SoCs are harder for Intel than microprocessors or SoCs are just not a priority? You tell me. Unfortunately for Intel, Samsung 14nm will also be out the first half of 2015 and based on what I have heard thus far it will be VERY competitive in terms of cost, speed, and density.

Bottom line: Intel is not an SoC company, Intel is not a systems company, Intel is far removed from the user experience. All things considered, Intel is still not relevant in the mobile SoC market and I do not see that changing anytime soon. Just my opinion of course.

lang: en_US

How Do You Do Computational Photography at HD Video Rates?

Increasingly, a GPU is misnamed as a “graphics” processing unit. They are really specialized architecture highly parallel compute engines. You can use these compute engines for graphics, of course, but people are inventive and find ways of using GPUs for other tasks that can take advantage of the highly parallel architecture. For example, in EDA, Daniel Payne talked to G-Char at DAC in June who use GPUs to accelerate SPICE circuit simulation.

Users don’t actually want to program on the “bare metal” of the GPU. Firstly, often the hardware interface to the GPU is not fully revealed in the documentation since it is only intended to be accessed through libraries. And secondly, whatever the GPU interface is to this GPU, it will not be the same to the next generation GPU, never mind to a competitor’s GPU. So standards have sprung up not just for using GPUs for graphics but also using GPUs for general purpose computation. The earliest of these was OpenCLand Imagination were a pioneer in using mobile GPU compute, being the first mobile IP vendor to achieve OpenCL conformance.

Indeed, to encourage wider adoption of GPU compute in mobile, Imagination has delivered a number of OpenCL extensions related to advanced camera interoperability. They can be used with the Samsung Exynos Soc which is the application processor in the Galaxy S4 and some other smartphones, and is also available on a development board. These new OpenCL API extensions enable developers to implement Instagram-like functionality on real-time camera data, including computational photography and video processing, while offloading the main CPU.

The challenge to doing all this, especially in HD (and 4K is coming soon too), is that video generates a lot of data to swallow, and it has tight constraints: if you don’t like this frame there is another one coming real soon.

Historically, computational photography like this has been done on the main CPU, which is fine for still images (you can’t press the shutter 60 times a second even if the camera would let you) but it doesn’t work for HD video because the processor gets too hot. It is no good adding extra cores either, since the CPU will just overheat and shut down. But modern application processors in smartphones contain a GPU (often an Imagination PowerVR). The trick is to use a heterogeneous solution that combines these blocks and lives under the power and thermal budgets.

In an application, for example, performing real-time airbrushing on a teleconference, there is a camera, the CPU, the GPU and a video codec involved, four components all requiring access to the same image data in memory. Historically, all OpenCL implementations in the market created a behind-the-scenes copy of the image data while transferring its ownership between components. This increases memory traffic, burns power and reduces performance. This negates, or perhaps even eliminates, the advantage of using the GPU in the first place.

Imagination has been working with partners to develop a set of extensions that allow images to be shared between multiple components sharing the same system memory. So no increased memory traffic, lower power and better processing performance, based on Kronos (the OpenGL group) EGL images which handles issues related to binding and synchronization.

See a video explaining and showing it all in action (2 mins)

To accelerate adoption, Imagination is releasing the PowerVR GPU compute SDK and programming guidelines for PowerVR Series5XT GPUs. In the future they will release an SDK supporting PVRTrace for PowerVR Series6 GPUs, which will allow compute and graphics workloads to be tracked simultaneously.

More details are in the Imagination blog here.

Early Test –> Less Expensive, Better Health, Faster Closure

I am talking about the health of electronic and semiconductor design, which if made sound at RTL stage, can set it right for the rest of the design cycle for faster closure and also at lesser cost. Last week was the week of ITC(International Test Conference) for the Semiconductor and EDA community. I was looking forward to what ITC prescribes for the community this year and it happened such that I was able to talk to Mr. Kiran Vittal, Senior Director of Product Marketing at Atrenta. I know SpyGlass has good things about its RTL level test methodology to unveil at ITC this year, so this was an opportune time to indulge in an interesting conversation with the right expert who wrote the SpyGlass whitepaper “Analysis of Random Resistive Faults and ATPG Effectiveness at RTL”

So let’s know about what the trend is in test automation, challenges, issues, solutions,… from this conversation with Mr. Vittal –

Q: How’s the experience from ITC? What’s the top news from there?

We had a very good show at ITC this year, with over 100 of the conference attendees checking out our latest advances in RTL testability solutions and product demonstrations at our exhibitor booth. We also had several dedicated meetings with existing and potential customers from leading semiconductor companies discussing how SpyGlass RTL solutions would address their key challenges.

[Customers watching SpyGlass product demonstrations]

Samsung’s Executive VP, Kwang-Hyun Kim gave the keynote address on “Challenges in Mobile Devices: Process, Design and Manufacturing”. He said that the quality of test for mobile devices is of utmost importance due to the large number (typically millions) of parts shipped. Any degradation in quality can badly impact the rejection ratio which can affect the bottom line as well as the reputation of the particular company.

One of the best paper awards was also related to RTL validation –

ITC 2012 Best Student Paper Award: Design Validation of RTL Circuits Using Evolutionary Swarm Intelligence by M. Li, K. Gent and M. Hsiao, Virginia Tech

Q: What is the industry trend? Is it converging towards RTL level test?

The industry trend for deep submicron designs is to ship parts with the highest test quality, because new manufacturing defects surface with smaller geometry nodes. These defects need additional tests and the biggest concerns are the quality and cost of testing to meet the time-to-market requirements. Every semiconductor vendor performs at-speed tests at 45nm and below. The stuck-at test coverage goal has also increased from ~97% to over 99% in the last couple of years. The other concern has to do with design size and the large quantity of parts being shipped in the mobile and handheld space and the danger of having rejects for missing manufacturing defects due to the lack of high quality tests.

The only way to get very high test quality is to address testability at an early stage in the design cycle, preferably at RTL. The cost of test can be reduced by reducing the test data volume and test time. This problem can also be addressed by adhering to good design for test practices at the RTL stage.

Q: So, there must be great interest in SpyGlass test products which work at RTL level?

Yes, there is great interest in Atrenta’s solution for addressing testability at the RTL stage. We have a majority of the large semiconductor companies adopting our RTL testability solution. They say that by adopting SpyGlass testability at RTL, they are able to significantly shorten design development time and improve test coverage and quality.

Q: What’s the benefit of using SpyGlass? How does it save testing time? What kind of fault coverage does it provide compared to ATPG?

Fault coverage estimation at RTL is very close to that of ATPG, typically within 1%.

SpyGlass DFT has a unique ability to predict ATPG (Automatic Test Pattern Generation) test coverage and pinpoint testability issues as the RTL description is developed, when the design impact is greatest and the cost of modifications is lowest. That eliminates the need for test engineers to design test clocks and set/reset logic for scan insertion at the gate level, which is expensive and time consuming. This significantly shortens development time, reduces cost and improves overall quality.

The test clocks in traditional stuck-at testing are designed to run on test equipment at frequencies lower than the system speed. At-speed testing requires test clocks to be generated at the system speed, and therefore is often shared with functional clocks from a Phase Locked Loop (PLL) clock source. This additional test clocking circuitry affects functional clock skew, and thus the timing closure of the design. At-speed tests often result in lower than required fault coverage even with full-scan and high (>99%) stuck-at coverage. Identifying reasons for low at-speed coverage at the ATPG stage is too late to make changes to the design. The SpyGlass DFT DSM product addresses these challenges with advanced timing closure analysis and RTL testability improvements.

SpyGlass MBIST has the unique ability to insert memory built-in self test (BIST) logic at RTL with any ASIC vendor’s qualified library and validate the new connections.

Q: What’s new in these products which caught attention at ITC?

We introduced a new capability at ITC for analyzing the ATPG effectiveness early at the RTL stage, especially for random-resistive or hard-to-test faults.

ATPG tools have been traditionally efficient in generating patterns for stuck-at faults. The impact on fault coverage, tool runtime and pattern count for stuck-at faults is typically within reasonable limits.

However, the impact of “hard-to-test” faults in transition or at-speed testing is quite large in terms of pattern count, runtime or test coverage. This problem can now be analyzed with the SpyGlass DFT DSM product to allow RTL designers to make early tradeoffs and changes to the design to improve ATPG effectiveness, which in turn improves test quality and overall economics. Details can be seen in our whitepaper on Random Resistive Faults.

Q: Any customer experience to share with these products?

Our customers have saved at least 3 weeks of every netlist handoff by using SpyGlass DFT at the RTL stage. The runtime of SpyGlass at RTL is at least 10 times faster than running ATPG at the netlist level. Our customers have claimed about a 25x overall productivity improvement in using SpyGlass at RTL vs. other traditional methods.

This interaction with Mr. Vittal was much focused on testing aspects of Semiconductor Design. After this conversation, I did take a look at the new whitepaper on Random Resistive Faults and ATPG Effectiveness at RTL. It’s worth reading and knowing about effective methods to improve fault coverage.

Mentor Teaches Us About the Higg’s Boson

Once a year Mentor has a customer appreciation event in Silicon Valley with a guest speaker on some aspect of science. This is silicon valley, after all, so we all have to be geeks. This year it was Dr Sean Carroll from CalTech on The Particle at the End of the Universe, the Hunt for The Higg’s Boson and What’s Next.

Wally Rhines asked who Higgs was but Dr Carroll didn’t give much detail. He is actually an emeritus professor of physics from the Edinburgh University in Scotland. Since the physics department, like computer science, was in the James Clerk Maxwell Building while I was doing my PhD and then working for the university, I presume we had offices in the same building (it’s large). As an aside, Edinburgh is famous for naming its buildings after people that it snubbed. James Clerk Maxwell, he of the equations of electromagnetism, was turned down for a lectureship. Biology is in the Darwin building. Charles Darwin wasn’t much interested in the courses in medicine he took at Edinburgh and either failed and was kicked out or moved on to London on his own volition, depending on which version of the story you like. Anyway, he never graduated.

So what about particles? In the early 20th century, everything was simple: we had protons and neutrons forming the nucleus of atoms and electrons whizzing around the outside like planets. There were all those quantum mechanical things about where the orbits had to be to stop the electrons just spiraling into the nucleus. And how the nucleus stayed together was unclear.

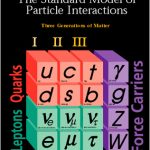

Around the time I was an undergraduate, the existence of the strong nuclear force was postulated (well, something had to stop the nucleus flying apart) and the realization that particles were actually made of three quarks (“three quarks for Muster Mark”, James Joyce in Finnegan’s Wake). The strong nuclear force was transmitted by gluons (that glued the nucleus together). Luckily, in my quantum mechanics courses we only had to worry about Bohr’s simple model of the atom. The weak nuclear force had actually be proposed by Fermi as another way to keep the nucleus together, and it was a force that only operated over tiny distances (unlike, say, electromagnetism, strong nuclear force and gravity which operate over infinite distances albeit vanishingly weakly as distances get large).

Gradually the standard model was filled in (and it turned out that Group Theory had some practical applications outside of pure math). Various quarks were discovered. The gauge bosons that carry the weak nuclear force were found. But there was an empty box in the standard model, the Higgs boson. Rather like Mendeleev predicting certain elements nobody had ever seen because there was a hole in the periodic table, the standard model had a hole. The Higgs boson is required to explain why some particles have mass and why they don’t move at the speed of light all the time.

You probably know that the Higgs boson was discovered (technically it is extremely likely that it was detected but these things are probablistic) at the large hadron collider at CERN, which runs underneath parts of Switzerland and France. and cost $9B to build. There was also a US collider that was started in Texas that would have been 3 times as powerful, but in the wonderful way of Congress, it was canceled…but only after $2B had been spent for nothing.

EDAC Export Seminar: Don’t Know This Stuff…Go Directly to Jail…Do Not Pass Go

I am not making this up: All exports from the United States of EDA software and services are controlled under the Export Administration Regulations, administered by the U.S. Department of Commerce’s Bureau of Industry and Security (BIS). You need to understand these regulations. Failure to comply can result in severe penalties including imprisonment. Many smaller companies do not have the resources to track these complex and ever changing sets of export regulations, but ignorance of the laws is not an excuse. Non-compliance can be costly.

Tomorrow evening, Wednesday 18th September you can learn from one of the experts. Cadence actually has an employee responsible for all this. Larry Disenhof, Cadence’s Group Director, Export Compliance and Government Relations and the chairman of the EDAC Export Committee is an expert in export regulations and will share his knowledge of the current state of US export regulations. Learn the basics as well as best practices. But there is homework you should do before tomorrow: watch the export overview presentation here so you already know the basics.

The seminar will be held at EDAC, 3081 Zanker Road, San Jose. There is a reception at 6pm and the seminar from 7-8.30pm.

If you work for an EDAC member company it is free. If you don’t, then you can’t go. However, you do need to register here.

BTW I assume you already know that one of the benefits of being a member in EDAC in addition to seminars such as this one is that you get a 10% discount off the cost of your DAC booth floorspace. That can make the EDAC membership close to free.

Talking of EDAC, don’t forget the 50th Anniversary of EDA on October 16th at the Computer History Museum (101 and Shoreline, just near the Googleplex). Join previous Kaufman Award recipients like Bob Brayton and Randy Bryant. And the founders of EDAC, Rick Carlson and Dave Millman. Previous CEOs such as Jack Harding, Penny Herscher, Bernie Aronson, Rajeev Madhavan, and Sanjay Srivastava. Current EDAC board members: Aart de Geus, Lip-bu Tan, Wally Rhines, Simon Segars, John Kibarian, Kathryn Kranen, Ravi Subramanian, Dean Drako, Ed Cheng, and Raul Camposano. Investors who have focused on EDA, like Jim Hogan and John Sanguinetti. Not to mention Dan Nenni and I (SemiWiki is one of the sponsors of the event). To register go here.