As AI models grow exponentially, the infrastructure supporting them is struggling under the pressure. At DAC, one company stood out with a solution that doesn’t just monitor chips, it empowers them to adapt in real time to these new workload requirements.

Unlike traditional telemetry or post-silicon debug tools, proteanTecs embeds intelligent agents directly into the chip, enabling real-time, workload-aware insights that drive adaptive optimization. Let’s examine how proteanTecs unlocks AI hardware scaling with runtime monitoring.

What’s the Problem?

proteanTecs recently published a very useful white paper on the topic of how to scale AI hardware. The first paragraph of that piece is the perfect problem statement. It is appropriately ominous.

The shift to GenAI has outpaced the infrastructure it runs on. What were once rare exceptions are now daily operations: high model complexity, non-stop inference demand, and intolerable cost structures. The numbers are no longer abstract. They’re a warning.

Here are a few statistics that should get your attention:

- Training a model like GPT-4 (Generative Pre-trained Transformer) reportedly consumed 25,000 GPUs over nearly 100 days, with costs reaching $100 million. GPT-5 is expected to break the $1 billion mark

- Training GPT-4 drew an estimated 50 GWh, enough to power over 23,000 U.S. homes for a year. Even with all that investment, reliability is fragile. A 16,384-GPU run experienced hardware failures every three hours, posing a threat to the integrity of weeks-long workloads

- Inference isn’t easier. ChatGPT now serves more than one billion queries daily, with operational costs nearing $700K per day.

The innovation delivered by advanced GenAI applications can change the planet, if it doesn’t destroy it (or bankrupt it) first.

What Can Be Done?

During my travels at DAC, I was fortunate to spend some time talking about all this with Uzi Baruch, chief strategy officer at proteanTecs. Uzi has over twenty years of software and semiconductor development and business leadership experience, managing R&D and product teams and high scale projects at leading, global high technology companies. He provided a well-focused discussion about a practical and scalable approach to tame these difficult problems.

Uzi began with a simple observation. The typical method to optimize a chip design is to characterize it across all operating conditions and workloads and then develop design margins to keep power and performance in the desired range. This approach can work well for chips that operate in a well characterized, predictable envelope. The issue is that AI, and in particular generative AI applications are not predictable.

Once deployed, the workload profile can vary immensely based on the scenarios encountered. And that dramatically changes power and performance profiles while creating big swings in parameters such as latency and data throughput. Getting it all right a priori is like reliably predicting the future, a much sought after skill that has eluded the finest minds in history.

He went on to point out that the problem isn’t just for the inference itself. The training process faces similar challenges. In this case, wild swings in performance and power demands can cause failures in the process and wasteful energy consumption. If not found, these issues manifest as unreliable, inefficient operation in the field.

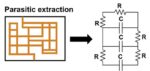

Uzi went on to discuss the unique approach proteanTecs has taken to address these very real and growing problems. He described the use of technology that delivers workload-aware real-time monitoring on chip. Thanks to very small, highly efficient on-chip agents, parametric measurements – in-situ and in functional mode – are possible. The system detects timing issues, operational and environmental effects, aging and application stress. Among the suite of Agents are the Margin Agents that monitor timing margins of millions of real paths for more informed decisions. And all of this is tied to the actual instructions being executed by the running workloads.

The proteanTecs solution monitors the actual conditions the chip is experiencing from the current workload profile, analyzes it and reacts to it to optimize the reliability, power and performance profile. All in real time. No more predicting the future but rather monitoring and reacting to the present workload.

A reasonable question here is what is the overhead of such a system? I asked Uzi and he explained that area overhead is negligible as the monitors are very small and can typically be added in the white space of the chip. The gate count overhead is about 1 – 1.5 percent, but the power reduction can be 8 – 14 percent. The math definitely works.

I came away from my discussion with Uzi believing that I had seen the future of AI, and it was brighter than I expected.

At the proteanTecs Booth

While visiting the proteanTecs booth at DAC I had the opportunity to attend a presentation by Noam Brousard, VP of Solutions Engineering at proteanTecs. Noam has been with the company for over 7 years and has a rich background in systems engineering for over 25 years at companies such as Intel and ECI Telecom.

Noam provided a broad overview of the challenges presented by AI and the unique capabilities proteanTecs offers to address those challenges. Here are a couple of highlights.

He discussed the progression from generative AI to artificial general intelligence to something called artificial superintelligence. These metrics compare AI performance to that of humans. He provided a chart shown below that illustrates the accelerating performance of AI across many activities. When the curve crosses zero, AI outperforms humans. Noam pointed out that there will be many more such events in the coming months and years. AI is poised to do a lot more, if we can deliver these capabilities in a cost and power efficient way.

Helping to address this problem is the main focus of proteanTecs. Noam went on to provide a very useful overview of how proteanTecs combines its on-chip agents with embedded software to deliver complete solutions to many challenging chip operational issues. The figure below summarizes what he discussed. As you can see, proteanTecs solutions cover a lot of ground that includes dynamic voltage scaling with a safety net, performance and health monitoring, adaptive frequency scaling, and continuous performance monitoring. It’s important to point out these applications aren’t assisting with design margin strategy but rather they are monitoring and reacting to real-time chip behavior.

About the White Paper

There is now a very informative white paper available from proteanTecs on the challenges of AI and substantial details about how the company is addressing those challenges. If you work with AI, this is a must-read item. Here are the topics covered:

- The Unforgiving Reality of Scaling Cloud AI

- Mastering the GenAI Arms Race: Why Node Upgrades Aren’ Enough

- Critical Optimization Factors for GenAI Chipmakers

- Maximizing Performance, Power, and Reliability Gains with Workload-Aware Monitoring On-Chip

- proteanTecs Real-Time Monitoring for Scalable GenAI Chips

- proteanTecs AVS Pro™ – Dominating PPW Through Safer Voltage Scaling

- proteanTecs RTHM™ – Flagging Cluster Risks Before Failure

- proteanTecs AFS Pro™ – Capturing Frequency Headroom for Higher FLOPS

- System-Wide Workload and Operational Monitoring

- Conclusion

To Learn More

You can get your copy of the must-read white paper here: Scaling GenAI Training and Inference Chips with Runtime Monitoring. The company also issued a press release recently that summarizes its activities in this important area here. And if all this gets your attention, you can request a demo here. And that’s how proteanTecs unlocks AI hardware growth with something called runtime monitoring.

Also Read:

Webinar – Power is the New Performance: Scaling Power & Performance for Next Generation SoCs

proteanTecs at the 2025 Design Automation Conference #62DAC

Podcast EP279: Guy Gozlan on how proteanTecs is Revolutionizing Real-Time ML Testing