According to a recent whitepaper by Samtec, Channel Operating Margin (COM) didn’t start as an algorithm; it started as a truce. In the late 2000s and early 2010s, interconnect designers and SerDes architects were speaking past each other. The former optimized insertion loss, return loss, and crosstalk against frequency-domain masks; the latter wrestled with real receivers, equalization limits, and power budgets. As data rates jumped from 10 Gb/s per line to 25 Gb/s—and soon after to 50 Gb/s and beyond—the old “mask” paradigm broke. Guard-banding everything to make frequency masks work would have over-constrained designs and under-informed transceivers. The industry needed a shared language that tied physical channel features to receiver behavior. That shared language became COM.

Two technical insights catalyzed the shift. First, insertion loss alone was not predictive once vias, connectors, and packages crept into electrically long territory. Ripples in the insertion-loss curve, codified as insertion loss deviation (ILD) were the visible fingerprints of reflections that eroded eye openings. Second, crosstalk budgeting matured from ad hoc limits to constructs like integrated crosstalk noise (ICN) and insertion-loss-to-crosstalk ratio (ICR), recognizing that noise must be weighed against the channel’s fundamental attenuation. These realizations coincided with the industry’s pivot from NRZ at 25 Gb/s to PAM4 at 50 Gb/s per line, raising complexity and appetite for a more realistic, end-to-end figure of merit.

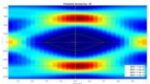

Enter the time domain. The breakthrough was to treat the channel’s pulse response as the “Rosetta Stone” between interconnect physics and SerDes design. Because a random data waveform is just a symbol sequence convolved with the pulse response, you can sample that pulse at unit intervals to quantify intersymbol interference (ISI), then superimpose sampled crosstalk responses as noise. That reframed compliance from static frequency limits to a statistical signal-quality calculation anchored in what receivers actually see. Early debates over assuming Gaussian noise led to a pragmatic conclusion: copper channel noise is not perfectly IID Gaussian; using “real” distributions derived from pulse-response sampling avoids chronic over-design.

COM formalized this flow. Practically, you feed measured or simulated S-parameters into a filter chain (including transmitter/receiver shaping), generate a pulse response via iDFT, and compute ISI and crosstalk contributions as RMS noise vectors relative to the main cursor. Equalization (CTLE/FFE/DFE) and bandwidth limits are captured explicitly. Crucially, minimum transceiver capabilities are not left to inference; they’re parameterized in tables maintained inside IEEE 802.3 projects. The result is a single operating-margin number that reflects both the channel’s impairments and a realistic, baseline SerDes. MATLAB example code and configuration spreadsheets iterated alongside each project made the method transparent, debuggable, and rapidly adoptable across companies.

Process also mattered. Publishing representative channel models into IEEE working groups was an industry first. Instead of “ouch tests” where SerDes vendors reacted to whatever channels interconnect teams produced, standard bodies curated public S-parameter libraries that mirrored real backplanes and cables at 25, 50, and 100+ Gb/s per lane. That transparency let COM evolve collaboratively—tuning assumptions, refining parameter sets, and aligning equalization budgets—with evidence that mapped to shipping hardware. Over time, COM was adopted and extended across many Ethernet projects (802.3bj, bm, by, bs, cd, ck, df, and the ongoing dj effort), and it influenced parallel work in OIF and InfiniBand.

Why has COM endured? Three reasons. It aligns incentives by giving interconnect and SerDes designers a single scoreboard. It scales: the same pulse-response/statistical framework accommodates NRZ and PAM-N, evolving to higher baud rates with updated parameter tables and annexes (e.g., Annex 93A and 178A). And it’s verifiable: open example code and published channels shrink the gap between compliance and system bring-up. Looking ahead, the pressures are familiar—denser packages, rougher loss at higher Nyquist, more aggressive equalization, and tighter power. COM’s core idea—evaluate channels in the space where receivers actually operate—remains the right abstraction. It turns negotiation into engineering, replacing guesswork with a metric both sides can build to.

See the full Samtec whitepaper here.

Also Read:

Visualizing System Design with Samtec’s Picture Search

Webinar – Achieving Seamless 1.6 Tbps Interoperability with Samtec and Synopsys

Samtec Advances Multi-Channel SerDes Technology with Broadcom at DesignCon