Almost every week I read about a slowing world economy, yet in EDA we have some bright spots to talk about, like Tanner EDA finishing its 24th year with an 8% increase in revenue. More details are in the press release from today.

I spoke with Greg Lebsack, President of Tanner EDA on Monday to ask about how they are growing. Greg has been with the company for 2 1/2 years now and came from a software business background. During the past 12 months they’ve been able to serve a broad list of design customers, across all regions, with no single account dominating the growth. Our previous meeting was at DAC, three months ago where I got an update on their tools and process design kits.

Annual Highlights

Highlights for the year ending in May 2011 are:

- 139 new customers

- HiPer Silicon suite of analog IC design tools increasingly being used for: sensors, imagers, medical, power management and analog IP

- Version 16 demonstrated at DAC (read my blog from DAC for more details)

- New analog PDK added for Dongbu HiTek at 180nm, and TowerJazz at 180nm for power management

- Integration between HiPer Silicon and Berkeley DA tools (Analog Fast SPICE)

Why the Growth?

I see several factors causing the growth in EDA for Tanner: Standardized IC Database, SPICE Integration, Analog PDKs and a market-driven approach.

Standardized IC Database

While many of their users run exclusively on Tanner EDA’s analog design suite (HiPer Silicon), their tools can co-exist in a Cadence flow as version 16 (previewed at DAC) uses the OpenAccess database. This is an important point because you want to save time by using a common database instead of importing and exporting which may loose valuable design intent. Gone are the days of proprietary IC design databases that locked EDA users into a single vendor, instead the trend is towards standards-based design data where multiple EDA tools can be combined into a flow that works.

SPICE Integration

Analog Fast SPICE is a term coined by Berkeley Design Automation years ago as they created a new category of SPICE circuit simulators that fit between SPICE and Fast SPICE tools. By working together with Tanner EDA we get a flow that uses the HiPer Silicon tools shown above with a fast and accurate SPICE simulator called AFS Circuit Simulator (see the SPICE wiki page for comparisons). Common customers often drive formal integration plans like this one. I see Tanner EDA users opting for the AFS Circuit Simulator on post-layout simulations where they can experience the benefits of higher capacity.

Analog PDKs

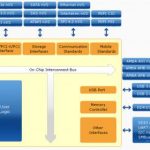

Unlike digital PDKs grabbing the headlines at 28nm the analog world has PDKs that are economical at 180nm as shown in the following technology roadmap for Dongbu HiTek, an analog foundry located in South Korea:

Another standardization trend adopted by Tanner EDA is the Interoperable PDK movement, known as iPDK. Instead of using proprietary models in their PDK (like Cadence with Skill) this group has standardized in order to reduce development costs. In January 2011 I blogged about how Tanner EDA and TowerJazz are using an iPDK at the 180nm node.

Market Driven Approach

I’ve worked at companies driven by engineering, sales and marketing. I’d say that Tanner EDA is now more market-driven, meaning that they are focused on serving a handful of well-defined markets like analog, AMS and MEMS. For the early years I saw Tanner EDA as being primarily engineering driven, which is a good place to start out.

Summary

Only a handful of companies survive for 24 years in the EDA industry and Tanner happens to be in that distinguished group, because they are focused and executing well we see them in growth mode even in a down economy.