From our book “Mobile Unleashed”, this is a detailed history of Samsung semiconductor:

Conglomerates are the antithesis of focus, and Samsung is the quintessential chaebol. From humble beginnings in 1938 as a food exporter, Samsung endured the turmoil and aftermath of two major wars while diversifying and expanding. Its early businesses included sugar refining, construction, textiles, insurance, retail, and other lines mostly under the Cheil and Samsung names.

Today, Samsung is a global leader in semiconductors, solid-state drives, mobile devices, computers, TVs, Blu-ray players, audio components, major home appliances, and more. Hardly an overnight success in technology, Samsung went years before discovering the virtues of quality, design, and innovation. The road from follower to leader was long and rocky. Considering

All Aspects

The first Korean consumer electronics firm was not Samsung, but another well-known name with non-electronic roots. GoldStar, the forerunner of LG, was formed in 1958 as a new line of business for the Lucky Chemical Industrial Corporation. They built a tube-based radio in 1959, a portable transistor radio in 1960, and a black and white TV in 1966 – all Korean firsts.

On the heels of success in other Asian nations, foreign electronics companies sought to develop the productive, low-cost Korean labor pool. With help from the US, the Korea Institute of Science and Technology (KIST) was chartered in May 1965. The government pushed foreign direct investment via joint ventures. Transistor assembly and test facilities sprang up, starting with Komi in 1965, followed by Fairchild and Signetics in 1966, and Motorola in 1967.

Electronics professor Dr. Kim Wan-Hee from Columbia University visited Korea in August 1967 to provide advice on accelerating domestic capability. His initial observation was an overall lack of clean room technology, indicating a dire need for investment on a much broader scale. Dr. Kim began evangelizing Korean firms to consider joining the electronics push, among them Samsung.

Bidding to industrialize the mostly agricultural South Korea, the government designated electronics as one of six strategic export industries. They passed the Electronics Promotion Act, which took effect in January 1969 with subsidies and export stimulation measures intended to draw in more industry participants.

The time was finally right. Samsung founder and Chairman Lee ByungChull gathered his leadership team at the end of December 1968 to decide on a new field of business to enter, and a name. Samsung Electronics Corporation started on January 13, 1969. “Electronics is the most suitable industry for [South Korea’s] economic development stage considering all aspects including technology, labor force, added value, domestic demand, and export prospects,” said Lee.

Even before officially forming its entity, Samsung had signed a joint venture agreement with Sanyo Electric in November 1968. A second joint venture deal was completed with NEC in September 1969. Based in a new manufacturing facility in Suwon, many of the 137 new Samsung Electronics employees ventured off to locations in Japan to learn the art of TV and vacuum tube production.

By November of 1970, Samsung Electronics produced its first vacuum tubes and 12” black and white TVs, based on the designs of their Japanese partners. Samsung TVs evolved for the next few years, including a 19” transistor-based black and white model in 1973. (At the time, Korean domestic TV broadcasts were in black and white; color TVs were outlawed. Japanese TV imports were blocked – except those made through the joint ventures.) Lines of white goods, including refrigerators, air conditioners, and washing machines debuted in 1974.

From the start, Samsung’s strategy in consumer electronics was vertical integration – and a new capability was about to fall into their hands.

Kang Ki-Dong acquired a Ph.D. at The Ohio State University in 1962, and went to work at Motorola in Phoenix, Arizona in one of the largest discrete transistor plants in the world. As a Korean engineer who also spoke Japanese, he gave many plant tours to visiting engineers, building a network. When the time came to open a Motorola facility in Korea, Kang was sent ahead to perform an initial assessment for land and contacts, including interviewing prospective engineering staff.

After returning to the US and working for another firm, Kang encountered two old friends. The first was Kim Chu-Han, a prominent radio network operator Kang had introduced to ham radio years earlier. The second was a classmate, Harry Cho, an operations manager in semiconductors. Kim had access to financing, Cho could sell, and Kang knew fab technology. Together, the three formulated an idea for a new company: Integrated Circuit International, Incorporated, or ICII.

ICII designed a digital watch chip, and made its first 5 micron CMOS large-scale integration (LSI) parts on a small 3” line in Sunnyvale, California during 1973. Demand was substantial, many times bigger than what ICII could produce, and customers held back volume production orders. Kim’s firm was willing to finance expansion, but would only do so if the capital expenditure remained inside Korea.

Kang decided to pivot. He would continue ICII chip design operations in the US, but send the ICII fabrication line to Puchon and expand it there. The new joint venture was Hankook Semiconductor. However, the global oil crisis in 1974 and a litany of import red tape made the relocation much more expensive than planned. The fab finally came up and produced chips, but operating funds dwindled dangerously low within a few months.

There were only so many places to go for major technology financing in Korea. The economic climate was worsening, with oil prices continuing to skyrocket and the Japanese pulling back. Samsung had money. Lee Byung-Chull and his son, Lee Kun-Hee, were convinced they had to enter the semiconductor business, not just buy chips. They tried persuading their management teams that advanced chips such as Hankook was building would be the future. Management balked.

Against such sage advice, on December 6, 1974, the Lees funded a stake in Hankook Semiconductor – using money from their own pockets. By the end of 1977, the operation was fully merged, becoming Samsung Semiconductor.

Developing DRAMs

Lee and his son understood one important concept that Kang and their own management team had missed: when it comes to chips, fab cap ex comes first, and if timed correctly, profits follow. Without sufficient fab capacity ready at the moment demand materializes, even the best chip designs cannot succeed. That lesson would repeat itself many times over as Samsung grew. Happily producing appliances, watches, radios, and TVs, Samsung focused on efficiency. Its assembly plants became more automated, and both Korean consumers and export trading partners were being supplied goods. An indicator of progress: Samsung Electronics America opened in New Jersey in July 1978. Research on semiconductors in Korea was booming. In 1976, the government-backed Korea Institute of Electronics Technology (KIET) opened a research center in Kumi. Among many activities, they set up a joint venture with VLSI Technology, and created a VLSI wafer fab with capability for 16K DRAMs by 1979.

The government tried to draw the chaebols – among them Daewoo, GoldStar, Hyundai, and Samsung – and their talent to Kumi, seeking to create a hub of VLSI fab expertise. Samsung was still focused on LSI technology feeding its own business with linear and digital components, and was fighting allocation battles in procuring those parts from foreign sources. To protect its supply chain, Samsung Electronics integrated Samsung Semiconductors on January 1, 1980.

KIET stopped short of 64K DRAMs for several reasons. The first was the 1981 Long-Term Semiconductor Industry Promotion Plan. Developed by the Korean government with KIET cooperation, it specifically targeted fabrication of memory chips for export. Second, telecommunications assets were being privatized, providing immediate income for the chaebols to invest in semiconductors.

Daewoo, GoldStar, and Hyundai were all in on VLSI fab investments, but Samsung had extra incentive. In March 1983, they began producing a PC, the SPC-1000. Vertical integration goals made producing their own DRAM attractive. Samsung went to work. They had to improve processes from 5 to 2.5 micron, increase wafer size to 130mm, and gain key VLSI insight. A yearlong feasibility study began at their new Suwon semiconductor R&D center in January 1982.471

With some VLSI knowledge in hand, in early 1983 Samsung set up an “outpost” in Santa Clara, California. Its primary objective was competitive research, seeking a licensor for DRAM technology, and it would serve as a recruiting and training center to add semiconductor talent from the US.

Finding companies to talk to was the easy part. Hitachi, Motorola, NEC, Texas Instruments, and Toshiba all rebuffed Samsung’s licensing request. (So much for relying on joint venture partners such as NEC.) The search came down to Micron Technology, who agreed to license its 64K DRAM design in June 1983 after an assembly pilot. Also licensed was a high-speed MOS process from Zytrex.

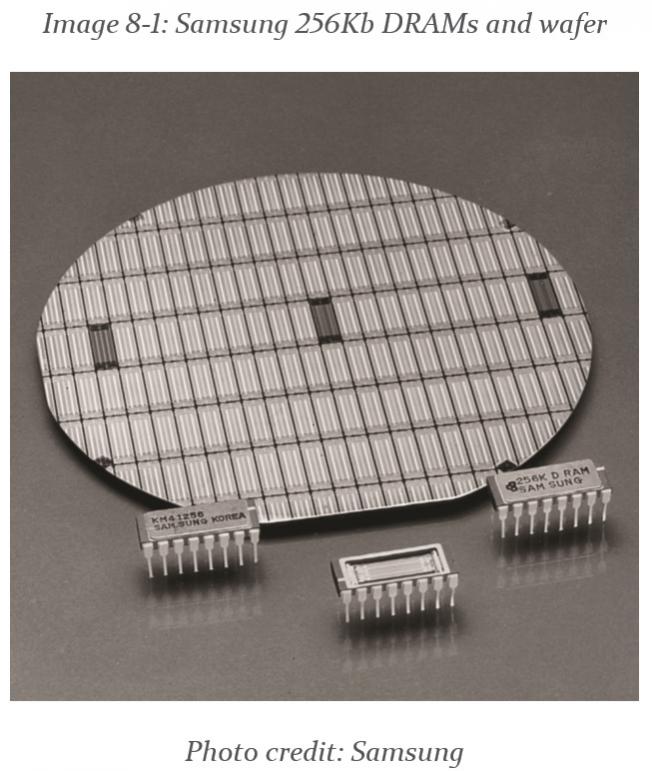

Samsung went from zero to a 64Kb DRAM in six months, sampling fabbed parts in November 1983. They then fabbed a Micron 256Kb DRAM design by October 1984. To break free from licensing fees, the Samsung Santa Clara research team designed a 256Kb DRAM through reverse engineering, sampling an all-new part in July 1985. Volume 256Kb DRAM production started in a new facility in Giheung on a 2 micron process.

These were the first steps along a path of generational breakthroughs that made Samsung the largest producer of memory chips in the world by 1993 – ten years after sampling their first DRAM part. This set the stage for other advances in VLSI chip fabrication that would be critical for the next consumer device initiative.

Mountains in the Way

Just as the mobile craze started with car phones in most other markets, so was the case in Korea. Korea Mobile Telecommunications Services (KMTS, later to be known as SK Telecom) launched its 0G radio telephone network in April 1984, grabbing 2658 subscribers by the end of the year.

Samsung began dabbling in mobile wireless R&D in 1983 with some forty engineers. Without much to go on except the KMTS 0G network, they spent considerable effort reverse engineering a Toshiba car phone. The resulting design was the Samsung SC-100 car phone produced in 1986. It was rife with quality issues, leading to the R&D team being chopped to just ten members and wondering what to do next. That was probably a blessing, because it inspired team leader Lee Ki-Tae to buy ten Motorola cellular phones for benchmarking.

KMTS was upgrading their network to 1G cellular, with an AMPS infrastructure rollout that would be ready to launch by July 1988 – just in time for the Seoul 1988 Summer Olympic Games. The schedule gave Samsung plenty of time to dissect the Motorola designs and learn about AMPS before having their next phone attempt ready.

Chairman Lee Byung-Chull would not see the Olympic festivities. Lee Kun-Hee assumed control of the firm on December 1, 1987, barely two weeks after his father’s passing. At first, it was business as usual – running a large corporation took precedence. The infant cell phone division went mostly unnoticed.

The Samsung SH-100 handheld 1G phone debuted in 1988. It sold fewer than 2000 units, mostly to VIPs, and it too suffered in quality. This cycle repeated several times over the next few years: new Samsung phone, similar lack of quality, and similarly disappointing sales measuring in the few thousands.

Samsung had run head on into the dark art of radio frequency engineering.

Major manufacturing and design issues early on allowed consumer doubt and competition to creep in. Motorola, then the gold standard for quality and mobile phone design, took over half the Korean mobile phone market while Samsung scrapped for mere percentage points in share on their home turf.

When it comes to RF technology, reverse engineering can only tell a team so much. Reproducing a receiver, such as a radio or TV, is an easier but still challenging task. Creating a sophisticated transmit and receive device like an analog 1G AMPS phone, especially with miniaturization techniques applied, is a different matter. Manufacturing quality concerns likely stemmed from a combination of componentry, layout, assembly, and tuning methods.

However, the biggest problem Samsung faced was a serious design flaw. Legend has it that while on a hike in the mountains, a Samsung employee saw another hiker placing a call on a Motorola phone. Sure enough, the Samsung phone the employee was carrying would not connect from that same spot. Whether the anecdote is accurate or slightly embellished, the scenario described held an important clue: two-thirds of Korea’s topography is mountainous.

R&D engineers had failed to account for an RF effect called multipath, where a signal reflects off terrain and buildings, and several versions arrive at the receiver at slightly different times in varying strength. Multipath was the root cause of many Samsung call quality issues. Solving the problem meant redesigning handset antennas, improving the physical connection between the antenna and circuit board, and enhancing signal discrimination.

New Management, Fired Up

The struggles of the cell phone operation and growing concern with Samsung Electronics consumer goods businesses had made it to Lee Kun-Hee’s attention. While visiting Los Angeles in early 1993, Lee noted the sad state of Samsung products tucked away on back shelves at an electronics retailer. Unsatisfied with a reputation as a “knockoff” supplier, Lee began asking questions.

The strategy of low cost, mass production, and reverse engineering that had served Samsung well in the 1970s and 1980s was limiting Samsung’s competitiveness on a global scale in the 1990s. Developing Asian nations were entering the fray as even lower cost producers of electronics. Lee was carefully observing the Japanese response to the situation, and watching consumer electronics shift from analog to digital technology.

Sensing the urgency, Lee went outside for help. One of the people he called on was advisor Tamio Fukuda, who went on to become professor at the Kyoto Institute of Technology. From a set of inquiries developed by Lee and his senior staff, Fukuda prepared a comprehensive response – now known as the Fukuda Report. On June 4, 1993, Fukuda presented his thoughts.

When asked what design is, Fukuda wrote: “[Design] is not simply creating a form or color of the product, but rather forming or tapping cultural activities to create a new kind of user lifestyle by increasing added value, starting from the study of the convenience of the product.”

It affirmed what Lee had already decided to do. Days later at a larger gathering of Samsung executives in Frankfurt, Lee rolled out his New Management initiative. Dislodging employees from ingrained practices would not be easy. Lee issued workers the famous challenge: “Change everything except your wife and children.” He then detailed a sweeping set of reforms, including a mandate all workers report two hours early each day.

Edicts to the cell phone division got very specific. “Produce mobile phones comparable to Motorola’s by 1994 or Samsung will disengage itself from the mobile phone business.” The days of reverse engineering were over. Samsung engineers had a head start on the signal quality problems, and furiously explored every other facet of design from weight to strength.

In November 1993, they presented their product: the SH-700. It was a candy-bar, light at 100g, with an all-new mountain-tested antenna. Lee was given a model to try – and immediately threw it on the ground and stepped on it. He then picked it up, made a call, and to everyone’s relief it worked. Designers had placed special pillars into the plastic case and circuit board to withstand typical abuse such as being sat on, or stepped on.

There was more to design than just engineering. A new marketing campaign debuted: “Strong in Korea’s unique topography,” a reference to the phone actually making calls in the mountains. The SH-700 initially sold 6,000 units a month, and by April 1994 was selling 16,000 units a month. The follow-on SH-770 Anycall was introduced in October 1994, enhancing the brand. By mid-1995, Samsung displaced Motorola as the cell phone market share leader in Korea.

What most consumers didn’t see, but Samsung employees felt, was the real quality story. Individual phone screening at the factory reduced problems before shipment, but a massive bone pile of dead phones developed. Lee sent some of the first SH-770s as holiday gifts, getting back reports of some of them not working. Embarrassed by quality escapees, he investigated further – and discovered the bone pile.

In March 1995, Lee visited the Gumi facility where the SH-770 was manufactured. Two thousand employees were invited to a rally in the courtyard, complete with “Quality First” headbands for all. Under a “Quality is My Pride” banner was the bone pile with phones and fax machines from the plant – some say numbering 150,000 units. A handful of workers smashed the defective devices with hammers, threw them into a bonfire, and bulldozed the ashes. Many of those who saw the spectacle wept openly. It was a lesson never forgotten.

Finding Digital Footing

Design teams across Samsung got a message too: get digital capability into products as fast as possible. The push was on three technologies now taken for granted in mobile devices: DRAM, flash, and CDMA.

Samsung had caught the pack in DRAM. With massive fab investments of $500M or more for five consecutive years to get running on 200mm wafers, Samsung vaulted over Toshiba to become global DRAM market share leader in 1993. Heavy R&D was lining up for the next major step, a 256Mb DRAM that would put them ahead of the Japanese technology giants for the first time.

Toshiba had developed a new technology – NAND flash memory – but was losing ground quickly to Intel who was outproducing them on a NOR flash alternative (with different application characteristics). To close the capacity gap, Toshiba licensed its NAND flash design to Samsung in December 1992. Again, it was about a ten-year cycle from licensing to leadership – Samsung shipped its first NAND flash parts in 1994, and by the end of 2002 was the global market share leader at 54% in NAND flash.

Digitizing cell phones meant another large investment in new technology. Where Europe forged ahead with its GSM vision, and US carriers waffled between D-AMPS, GSM, and CDMA for 2G, Korea would make a bold move.

Dr. William C. Y. Lee, an integral part of AMPS development at Bell Labs, was deeply involved with a young company developing CDMA technology: Qualcomm. As chief scientist at Pacific Telesis, Lee led several CDMA network trial installations. It was through Lee that Qualcomm was introduced to the Korean government, where the Ministry of Communications (MoC) was seeking a way to help propel Korea onto the world telecom scene.

After rounds of complex negotiation, Qualcomm and Korea’s Electronics and Telecommunications Research Institute (ETRI) reached a joint technology development agreement for CDMA infrastructure in May 1991.

On the surface, the CDMA choice seemed simple. Among its many technical advantages was better subscriber capacity achieved with fewer cell towers compared to TDMA systems like D-AMPS or GSM, meaning lower infrastructure rollout costs. However, the technology was unproven in a large-scale deployment, and Qualcomm owned it, requiring royalties.

The economic side effects were more compelling. Selecting CDMA would effectively lock out both foreign infrastructure suppliers and handset vendors, buying Koreans time to develop a unique solution with Qualcomm. If CDMA were adopted elsewhere, Korean firms could profit in exports. Four Korean electronics manufacturers – Hyundai, LG, Maxon Electronics, and Samsung – were recruited for the effort, targeting a 1996 launch.

It was a huge win for Qualcomm who would initially provide the chip sets for CDMA phones and infrastructure throughout Korea. In a unique arrangement, Korean manufacturers paid a percentage of their handset selling price as a royalty, and Qualcomm helped fund further joint development effort with ETRI. SK Telecom IS-95A CDMA service was ready in Seoul in January 1996. Just over a year later, one million subscribers would be using CDMA across Korea.

Among the first CDMA phones in Korea was the Samsung SCH-100, a 175g candy bar handset released in March 1996 with an early Qualcomm MSM chipset. (The beginnings of the Qualcomm MSM family were not ARM-based; Qualcomm didn’t have an ARM license until 1998. More ahead in Chapter 9.) Exports of a modified SCH-1011 phone to Sprint in the US started in June 1997.

CDMA did gain acceptance in many regions. Samsung quickly garnered 55 percent of the global CDMA handset market by the end of 1997. They were beginning an envelopment strategy in mobile, working on many phone models at once for the home market and for various export markets. Some of the phones appearing in 1998: the SCH-800 CDMA flip phone with SMS messaging, the SPH-4100 PCS phone setting a new lightweight mark of 98g, and the SGH-600 GSM phone that helped European exports.

An Unprecedented ARM License

The pace was also picking up in ASIC design. In May 1994, Samsung paid a substantial sum for an ARM6 and ARM7 license – accompanied with consulting efforts from ARM. One of the first products to receive an ARM-based chip was in a new category: the Samsung DVD-860. Released in November 1996, the DVD player contained four Samsung developed ASICs, beating major firms including Toshiba, Matsushita, and Pioneer to market.

At Hot Chips 1996, Samsung presented what was likely the basis of one of those ASICs: the MSP-1 Multimedia Signal Processor. It combined an ARM7 core with a proprietary 256-bit vector co-processor, used for real-time MPEG video decoding, and 10K of free gates for customization. The MSP-1 was fabbed in 0.5 micron CMOS, came in either a 128-pin package or an extended 256-pin package with a frame buffer memory bus, and consumed 4W.

Extending its ASIC efforts into phones, Samsung licensed the ARM7TDMI core in September 1996. By late 1998, Samsung had a CDMA chipset ready for internal use, appearing first in the Samsung SCH-810 CDMA phone in early 1999 paired with a Conexant Topaz chipset for the CDMA baseband. In February 1999, Samsung took an ARM9TDMI core and ARM920T processor license, preparing parts on a new 0.25 micron process running at over 150 MHz.

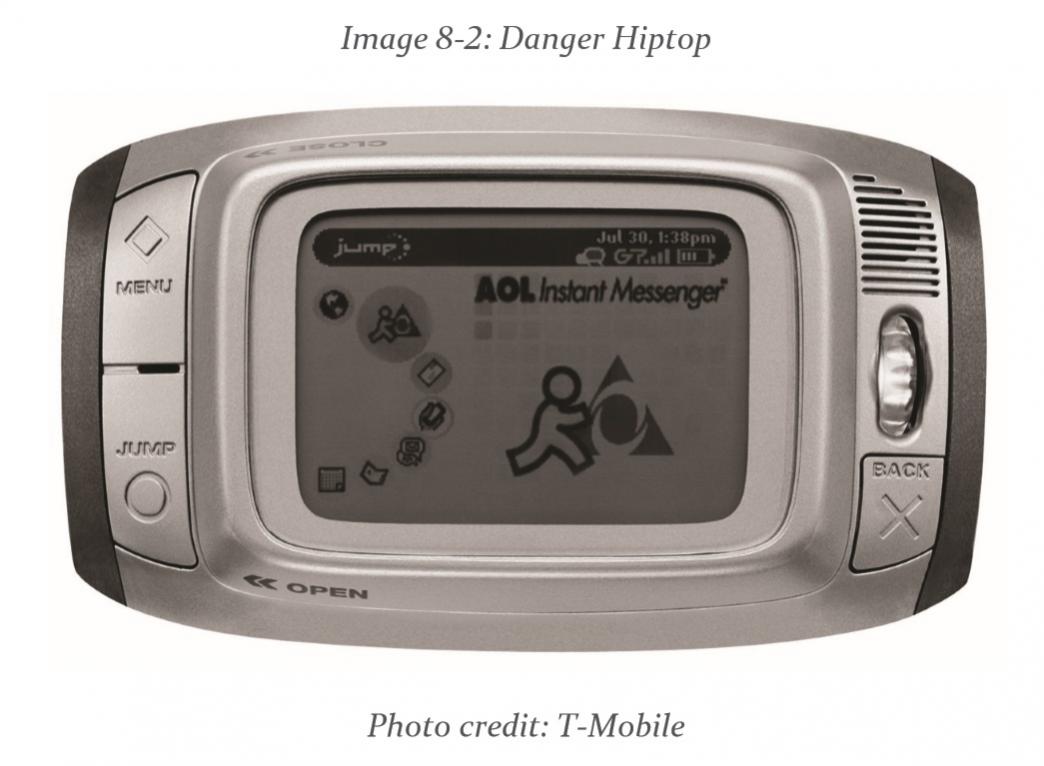

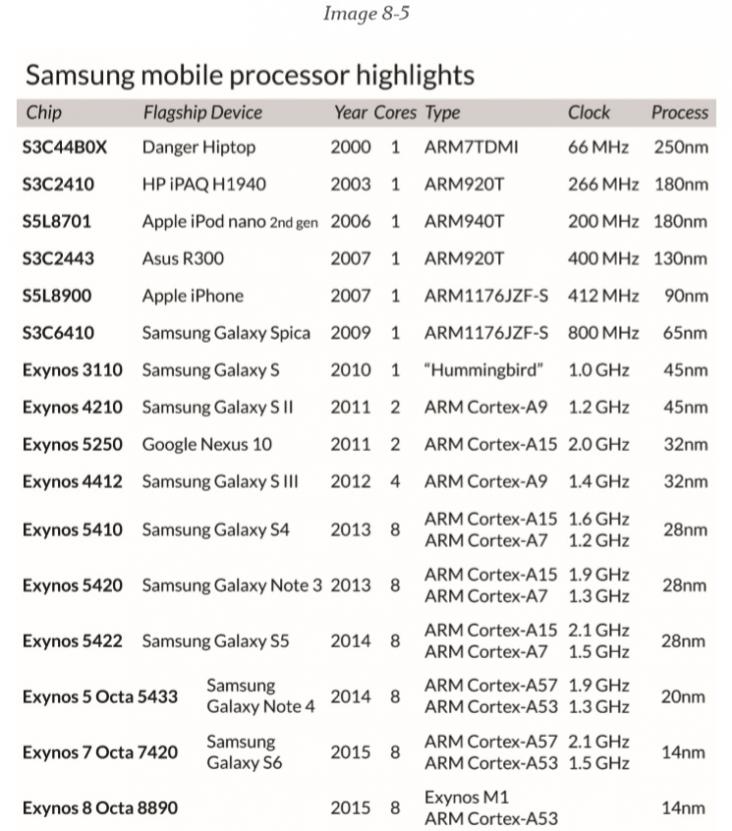

The first big merchant SoC hit for Samsung came in 2000 with the S3C44B0X, a 66 MHz ARM7TDMI-based part designed into the Danger Hiptop – created by Andy Rubin. That chance encounter between Samsung and Rubin would loom large several years later and prove to be a turning point for the entire mobile industry. The S3C44B0X was fabbed in 0.25 micron, in a 160-pin package.

In July 2002, ARM and Samsung announced a comprehensive longterm licensing agreement. It gave Samsung full access to all current and future ARM IP for the unspecified duration of the agreement – what one industry observer termed the “all-you-can-eat” license. The unprecedented agreement moved Samsung from just another ARM customer into a partner developing the future direction of ARM roadmaps.

At the Microprocessor Forum in October 2002, Samsung showcased just how much impact they could have. The stock ARM1020E core shipped from ARM was designed for a 0.13 micron process running at 325 MHz. Using techniques borrowed from the Alpha microprocessor that Samsung had taken over fabbing at the end of its life, Samsung reengineered the ARM1020E into the “Halla” core. Halla was still on 0.13 micron, but ran at up to 1.2 GHz, becoming the first ARM-based design to break the 1 GHz barrier.

Samsung had assembled a stunning array of semiconductor technology for mobile devices: DRAM and flash, ARM processor cores and ASICs, and miniaturized LCD panel capability derived from larger efforts for TVs and monitors – all from its own fab facilities. In what was becoming Samsung modus operandi, they applied their portfolio and expertise in an attempt to envelop two emerging mobile application segments: music players, and the smartphone.

For a Few Flash Chips More

Commodity semiconductors are a tricky business. Insufficient capacity means competitors win sockets with better pricing and shorter lead times. Too much capacity leads to a drag on costs as expensive equipment sits idle, or worse yet layoffs occur and facilities close. Once major new fab capacity comes on line, demand has to be stimulated to fill it. Many times, long-term deals are cut with customers to lock in forecasts and pricing.

The vertical integration strategy Samsung deployed was initially a response to being on the tail end of the supply chain. If parts were scarce, production lines for consumer goods could halt. Paying too much for parts hurt competitiveness. Samsung’s own semiconductor fabs assured electronics factories kept running.

As Samsung’s DRAM offerings improved, exports boomed. Profits were plowed back into fab capacity and R&D, even in economic downturns, to increase market share and outpace competitive technology advances. Flash memory was an extension of the success, another way to utilize wafer starts.

Lee Kun-Hee was operating in a very different competitive environment from what his father experienced. His passion for quality, branding, and design stemmed from understanding that success in electronics would mean opening new markets and then creating new and exciting application segments. Competing in both semiconductor technology and finished consumer goods meant competing with Samsung’s own customers – or suppliers – in many cases.

With the DVD player off and running, Samsung was again looking for a new consumer application segment for digital technology. 1998 brought the flash-based MP3 player, with the SaeHan MPMan and the Diamond Multimedia Rio PMP300 hitting the market. The Rio PMP300 came with 32 MB of internal flash and a more aggressive $200 price tag, and sold well – too well. It drew the wrath of the RIAA as the mobile device, along with Napster, that was enabling copyright infringement on a mass scale.

Samsung smelled flash sales and dove in anyway. At CES 1999, they introduced the Yepp brand of flash-based MP3 player. There was an ominous footnote in the press release indicating they were seeking “official approval from the RIAA,” with agreement to participate in the SDMI initiative. Yepp would become a sprawling MP3 product line with many models and variants. After 2003, the Yepp brand was retired outside Korea, but new models continued under Samsung branding until 2013.

A few months after the Yepp introduction came another category breaker in August 1999. The Samsung SPH-M2100 was a PCS phone with an integrated MP3 player and 16 or 32MB of flash – the first phone with an MP3 player. (The Samsung Uproar, or SPH-M100 introduced later in November 2000, usually gets that credit; it was exported to Sprint and got far more visibility.)

Curiously, Samsung went with a non-ARM architecture for early MP3 players. Most players had an 8-bit MCU for the user interface, a DSP for the MP3 decoding, flash to store the MP3 files, and a USB chip for connecting to a PC. In August 2001, Samsung announced a new chipset called the CalmRISC Portable Audio Device (C-PAD) for MP3 player OEMs and use in its Yepp lines. The single chip S3FB42F combined a CalmRISC 8-bit MCU with a 24-bit DSP.

On October 23, 2001, Apple changed almost everything about music players. Given the timing, the Samsung S3FB42F was probably not one of the nine MP3 chips Apple evaluated for the iPod. Once word got out about the PortalPlayer PP5002 being in the iPod, the S3FB42F and 8bit chips like it were old news.

New companies flowed in to MP3 players, including iriver formed by ex-Samsung personnel. A big barrier fell when SDMI went on indefinite hiatus, killed by a combination of concerns from sound quality to a controversial cracking of their encryption mechanism. Even Samsung eventually turned to PortalPlayer; the PP5020 appeared inside the YH820 at CES 2005.

Coincidentally, January 2005 was the same time Apple decided to get into flash-based players with the iPod Shuffle. That threw more demand for NAND flash into a tight market – and there was a lot more demand coming shortly, if someone could assure Apple of supply.

It sounds odd to characterize a $20B market as “tight”, but indeed flash producers were having trouble keeping up in 2005. After the 2001 dotcom downturn, the overall flash market – NAND plus NOR – doubled in three years. Like other DRAM producers, Samsung was shifting capacity toward flash to capture higher selling prices and margins while filling demand. They moved so much to the flash side, flash average selling prices (ASPs) fell under DRAM ASPs for the first time, and it was looking like 2006 might be an overcapacity year.

The wildcard was Apple. Samsung controlled nearly a third of the flash market, and could boost its share and expand further. Apple was preparing to launch the iPod nano in September 2005, with up to 4GB of flash in each unit. One analyst estimated the nano introduction, by itself, could increase the worldwide flash market some 22%. To win the Apple business, Samsung pledged 40% of its NAND flash fab capacity at a 30% discount. Apple covered its bases, saying they had supply agreements with Hynix, Intel, Micron, Samsung, and Toshiba for five years of NAND flash. The bulk of their supply came from Samsung.

When elephants make deals, it is the grass that suffers. Flash was finally cheap, if one could get it. Apple got all it wanted, but hundreds of smaller MP3 flash-based player manufacturers worldwide suddenly found themselves with enormous lead times for flash – or no allocation at all. Many of these firms simply disappeared, unable to ship product to meet increasing demand.

Capacity would catch up, but it would take a few years. Samsung immediately kicked off expansion at their Hwaseong complex for more DRAM and FLASH capacity in a seven-year, $33B cap ex program. Another expansion was coming soon, deep in the heart of Texas, and it would lead to even bigger deals.

Smartphone Sampler

Meanwhile, Samsung started pumping out smartphones everywhere, in a myriad of styles and configurations, hot on the trail of competitors. Nokia was firmly behind Symbian, for better or worse. Research in Motion was on its own operating system platform, and Apple would develop their own as well.

At the other end of the spectrum, Motorola at one point had 20 different phone operating systems in various stages of development. Similarly, Samsung spent the period from 2001 to mid-2009 wandering between several operating system options. A quick look at some of their more significant efforts during this period shows just how broad their smartphone – or “mobile intelligent terminal” as they called it in the beginning – portfolio became.

The darling of the early smartphone era was Palm OS, and for a while, Sprint was very enamored with it. The Samsung SPH-i300 released in late 2001 ran Palm OS 3.5 on a 33 MHz Motorola DragonBall 68328 processor. In 2003, the SPH-i500 introduced a sleeker clamshell design running Palm OS 4.1 on a 66 MHz DragonBall 68328. The updated SPH-i550 had Palm OS 5.2, featuring a 200 MHz Motorola DragonBall MX1 and its ARM920T core. After delays, it was finally ready in early 2005, but Samsung was suddenly jilted right before shipping. Sprint cancelled the phone, going with the Palm Treo 650 instead. With Palm about to lose all momentum, Samsung shifted resources and the Palm OS phase was over.

Next up was Microsoft Windows Mobile. In November 2003, Samsung and Verizon teamed up on the SCH-i600. It was in almost the same clamshell exterior as its Palm-based counterpart the SPH-i500. Inside was an Intel XScale PXA255 at 200 MHz supporting Windows Mobile 2003. June 2005 brought the all-new SCH-i730 with a slider physical keyboard design, and a much faster Intel XScale PXA272 running at 520 MHz.

The Motorola Q was announced a month later with Windows Mobile 5.0. The nearly yearlong FCC delays in shipping the Q gave Samsung an opportunity to catch up. The controversial new phone introduced in late 2006 was the Samsung SGH-i607, better known as the BlackJack drawing a swift legal response from Research In Motion for camping near their name. Both the Q and the BlackJack featured a thumb-board layout eerily similar to RIM phones. Samsung’s processor choice was the TI OMAP1710, a 220 MHz ARM926EJ-S core. Alongside was the Qualcomm MSM6275 baseband chip with another ARM926EJ-S core.

Windows Mobile 6.1 moved to an all-touchscreen metaphor for its user interface, typified by the Samsung i900 Omnia in June 2008. Most of its variants had the Marvell PXA312 with an ARM920T core at 624 MHz. By this time, users were comparing any smartphone against the Apple iPhone. Creating a distinct look and feel, Samsung introduced the TouchWiz UI on the i900 Omnia, but its integration with apps was questionable in many cases. Samsung has continued efforts into Windows Phone 7 and beyond under the Omnia brand.

Then, there was Symbian. Samsung tested the waters with the SGHD700, a Symbian flip phone sporting an unusual camcorder-style rotating screen and lens, demonstrated at a special event in September 2003 but never launched. The first Symbian phone released by Samsung was the SGH-D710 in May 2004. It was small, 51x101x24mm and 110g, and its 192 MHz TI OMAP5910 with an ARM925 core ran Symbian OS 7.0s. That chipset served several years through the Samsung SGH-Z600 flip phone with Symbian OS 8.1 in March 2006.

For Symbian OS 9.2, Samsung went to the TI OMAP2430, with an ARM1136 core at 330 MHz. The slider SGH-i520 was the first Samsung release in April 2007, followed by the SGH-i550 that borrowed the BlackJack look in May 2008, and the GT-i8510 large screen slider in October 2008. The last of the Samsung Symbian phones was the GTi8910, with two variants running Symbian OS 9.4 released in mid2009. These ran on a 600 MHz TI OMAP3430 and its Cortex-A8 processor core.

During this period, Samsung had seven phones that sold over 10 million units each. None ran on these three operating systems – instead, it was a homebrew J2ME platform doing most of the selling.

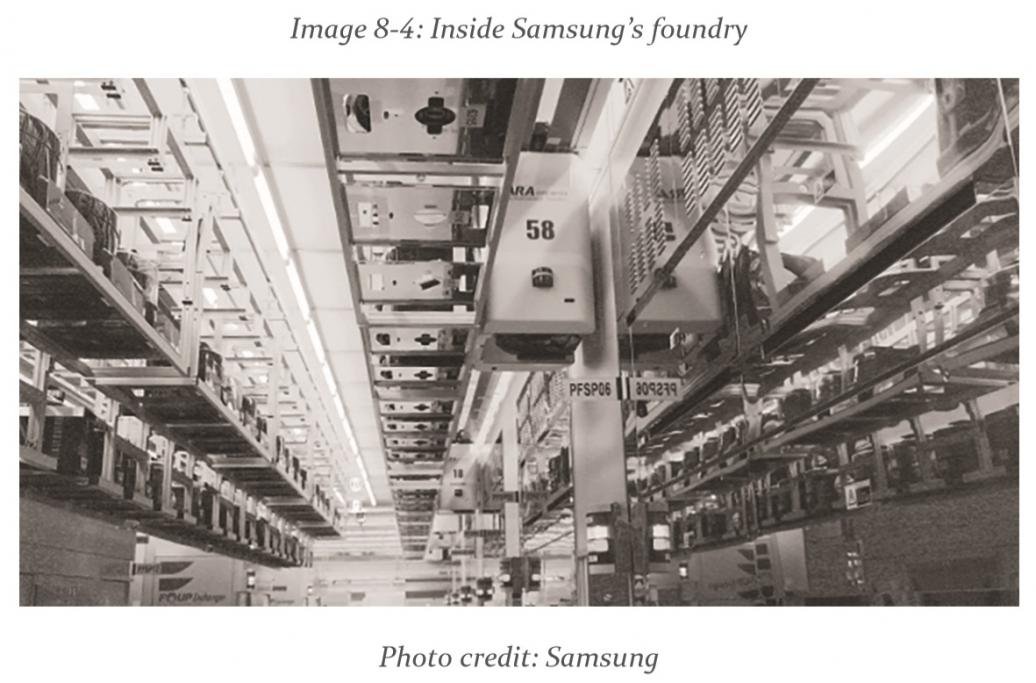

Your Loss is Our Foundry

Perhaps Samsung-branded smartphones hadn’t done all that well early on, but Samsung was definitely a lynchpin in launching the smartphone revolution. Apple’s immediate smash hit with the iPhone was a direct result of a buildup of fab capability at Samsung over nearly ten years. Prudent investment in multiple areas was about to pay off in a big way. The first fab Samsung established outside of Korea was in Austin, Texas. With an opening investment of $1.3B, the 200mm Fab 1 began sampling memory chips in 1997, moving to full-scale production in 1998. Part of the fab costs were offset with an equity investment from Intel, widely believed to be 10%, in return for guaranteed allocation of memory products.

Logic products were also growing. After licensing ARM9 cores in 1999, Samsung scored big wins in 2003. A Samsung chip was powering pocket PCs including the HP iPAQ H1940, the Everex E500, and later the Acer n30, all running Microsoft Windows Mobile. Inside was the S3C2410, a 266 MHz ARM920T processor fabbed on 0.18 micron, in a 272-pin BGA. The S3C2410 even showed up in the HP49g+ graphing calculator, underclocked to save power. Another important part in this family was the S3C2443 in 2007, running at 533 MHz on 0.13 micron in a 400-pin package, which found design wins in portable navigation devices like the Asus R300 and the LG LN800.

Pushing its process further, Samsung opened its 300mm S1 line in Giheung in mid-2005, establishing production of DRAM on a 90nm process by the end of the year. To develop the next 65nm process node, estimated to cost $5B or more, Samsung allied with Chartered Semiconductor and IBM in the Common Platform initiative. IBM piloted a 65nm process at its Fishkill, New York facility and Samsung was preparing to roll it out at Giheung in early 2006.

With advanced fab capacity in the works, Samsung quietly began socializing its foundry capability – supporting fabless semiconductor firms with fabrication services. In November 2005, they announced their first major foundry customer: Qualcomm. In March 2006, they added industry veteran Ana Molnar Hunter (fresh off experience at Chartered) to run the foundry business. In April 2006, Samsung announced plans for Fab A2 at its Austin complex, adding a 300mm line in a new larger building.

Samsung VP Jon Kang then walked into the SEMI Strategic Business Conference in Napa, California on April 26, 2006 and spouted, “I knew PortalPlayer would take a dive.” His bravado stemmed from a new SoC, the Samsung S5L8701, slated for future Apple iPod designs. The new chip carried an ARM940T processor and a DSP core on a conservative 0.18 micron process. Perhaps most importantly it integrated a flash controller as well as LCD and USB controllers, reducing power consumption and cost.

That was where PortalPlayer lost. True, Samsung had created a higher performance, low power ARM9 chip for Apple. Using its fab might, Samsung then bundled everything together – SoC, DRAM, and flash – and applied forward pricing plus allocation commitments. PortalPlayer was late to update its chip leaving them vulnerable. Realistically, they never stood a chance at Apple in the face of a combined supply chain and system design onslaught.

An erroneous analyst report in late 2006 had NVIDIA with its new PortalPlayer acquisition winning back iPod designs and perhaps the rumored Apple phone. That never happened. Apple moved beyond simply buying SoCs into specifying RTL designs, with the Samsung foundry doing the rest. Starting the sequence, the 90nm S5L8900 with its ARM11 core shipped in the original iPhone in June 2007 and the iPod Touch in September 2007.

What appeared as a sudden disruptive change in Samsung’s favor was in fact the result of nearly a decade of planning and a wide-ranging chain of events. Samsung was about to stretch the limits of its complex ecosystem even further.

On Second Thought, Android Works

The late 2004 meeting between Andy Rubin and Samsung executives in Seoul was no random event. Rubin had been a Samsung customer with some success at Danger. Samsung was working with the three most prominent smartphone operating systems of the day, so they were a logical place to seek venture capital for another operating system, what would become Android. At that point, Samsung had no idea that most of their existing operating system choices would turn sour.

Rubin threw everything he had at the room, and got silence back. From Samsung’s perspective, it was all risk: How many people do you have working on this operating system? Six. How many phones have shipped with it? None. How many OEMs are committed as customers? None. Thanks for coming.

Things started to change while Samsung executed its original three pronged software strategy. Google bought Android. Palm OS sank. Research in Motion didn’t share its code with anyone, for any price. Nokia wore its Symbian albatross. Microsoft Windows Mobile had significant traction until Apple showed up with the iPhone and iOS.

Open source teaming arrangements, especially in contrast to Apple’s closed environment, became more and more interesting – and far less risky. In January 2007, the LiMo Foundation set up an effort for Linuxbased mobile devices, with members including Motorola, NEC, NTT DoCoMo, Panasonic, and Samsung. In November 2007, the Open Handset Alliance unveiled its plans for Android phones, backed by Google, HTC, LG, Motorola, Qualcomm, and Samsung.

Motorola hired Sanjay Jha away from Qualcomm in August 2008, giving him the co-CEO title and oversight of the mobile device operation. Jha promptly ended the nonsense of far too many mobile operating systems. His strategy for mainstream Motorola smartphones was Android with a bit of Windows Mobile and the proprietary J2ME P2K mixed in the portfolio.542

By 2009, Samsung also set its sights on Android and Windows Mobile, along with LiMo and the homegrown ‘bada’ operating system– later merged into Tizen.

The Samsung Galaxy GT-i7500 launched in June 2009. It measured 115x56x11.9mm and weighed 114g, with a 3.2” OLED touchscreen at 320×480 pixels. Running Android 1.5 was the Qualcomm MSM7200A, a 528 MHz part in 65nm. Highly integrated, a single chip had an ARM1136J-S processor, a Qualcomm DSP core for applications, and an ARM926 core running the baseband with another smaller Qualcomm DSP core.

That was just the beginning of what Dan Rowinski of ReadWrite called the Samsung “spray and pray” strategy. The approach launched many smartphone models under Galaxy and Omnia umbrellas and other subbranding, tailored for different carrier requirements (sometimes changing out SoCs or baseband chips), in every market Samsung could qualify in across the globe. Some sold well, some didn’t, but new models were always on the way shortly. This was key to enveloping Apple, who essentially launched one hugely successful phone per year until the iPhone 5s and iPhone 5c and the iPhone 6 and iPhone 6 Plus duos.

Quickly heading for their own chips, Samsung launched many models on its S3C6410 SoC starting in 2009. This 65nm, 424-pin part ran at 800 MHz with an ARM1176JZF-S core and Samsung’s own FIMG-3DSE GPU core. In the Samsung GT-i5700 Galaxy Spica with Android 1.5 that shipped in November 2009, the S3C6410 was alongside the Qualcomm MSM6246 HSDPA baseband chip. The S3C6410 was also in the Samsung GT-i8000 Omnia II with Windows Mobile 6.1 that shipped in August 2009, with a slightly larger 3.7”, 480×800 pixel touchscreen display.

Exynos Takes Over the Galaxy

The flagship Samsung Galaxy S (GT-i9000) began shipping in June 2010 on Android 2.2, and within seven months became the first smartphone in the Samsung 10-million-seller club. Inside was the S5PC110 chip – rebranded as Exynos 3110, and later known as the Exynos 3 Single.

At a block diagram level, the overall similarity of the Exynos 3 Single to the Apple A4 (shipped in the iPhone 4 beginning the same month) is hard to overlook. Inside was the same 1 GHz Intrinsity Hummingbird core and a PowerVR SGX540 GPU at 200 MHz. It was fabbed in Samsung 45nm LP, and came in a 598-pin BGA. Matching Apple’s tablet move earlier in the year, Samsung announced the 7” Galaxy Tab (GT-P1000) in September 2010 using the same chip.

With the next step, Samsung beat Apple to the line. The Exynos 4 Dual debuted as the Exynos 4210 on February 15, 2011, fabbed in a Samsung 45nm LP process. The dual 1.2 GHz ARM Cortex-A9 cores gave a 20% processing edge. At that moment, the ARM Mali-400 MP4 GPU at 266 MHz put Samsung briefly ahead in smartphone graphics.

For May 2011, the Samsung Galaxy S II (GT-i9100) was ready to ship using the Exynos 4210, running Android 2.3. Its claim to fame was its thinness, checking in at only 8.49mm. The Exynos 4210 also powered the first of the Samsung “phablets”, the larger Galaxy Note (GT-N7000) measuring 147x83x9.65mm, and the Galaxy Tab 7.0 (GT-P7560), both launched in October 2011.

Also in October 2011, the Exynos 4212 got a bump to a new 32nm HKMG process – brought up and running at Austin Fab A2 – reducing power and increasing clock speed to 1.5 GHz.

First sampled in November 2011 was the Exynos 5 Dual, with two new ARM Cortex-A15 cores at 2.0 GHz, also on 32nm HKMG. The Exynos 5250 doubled memory bandwidth to 12.8 GB/sec, and used the ARM Mali-T604 MP4 GPU clocked at 533 MHz. It would appear in the Samsung XE303C12 Chromebook, and the Google Nexus 10 tablet.

Another new part showed up in 2011: a Samsung LTE baseband chip. First spotted in the Samsung Droid Charge for Verizon, the CMC220 provided the 4G Cat 3 connectivity. An enhanced CMC221 also appeared in the Galaxy Nexus.

Following in April 2012 was the Exynos 4 Quad, a 1.4 GHz Cortex-A9 quad core again in 32nm HKMG. To help with power consumption, the Exynos 4412 used power gating and per-core frequency and voltage scaling on all four cores. The same Mali-400 MP4 GPU from the dual version was clocked at 400 MHz. This processor and its slightly faster “Prime” version appeared in several versions of the Galaxy S III (GTi9300) with Android 4.0 as well as the Galaxy Note II (GT-N7100). One of the more interesting attempts with this part was the EK-GC100 Galaxy Camera.

2013 marked the move to 28nm. Samsung chose the ARM big.LITTLE path, previewing the Exynos 5 Octa at CES 2013. The Exynos 5410 had four Cortex-A15 cores at 1.6 GHz paired with four Cortex-A7 cores at 1.2 GHz. In an unusual move, it used the Imagination PowerVR SGX544MP3 GPU running at up to 533 MHz in some cases, but often throttled back to 480 MHz to prevent overheating.

The Exynos 5410 powered the Galaxy S4 (GT-i9500) with Android 4.2 in April 2013 – labeled as “life companion”, with an even bigger 5” Super AMOLED display with 441ppi as its most prominent feature. In its first month, the Galaxy S4 shipped over 10 million units, becoming Samsung’s fastest selling phone. Most of that success came on a different S4 configuration in the US with a 1.9 GHz Qualcomm Snapdragon 600 inside. (More ahead in Chapter 9.)

Reverting to an ARM Mali-T628 MP6 GPU for the next iteration, Samsung enhanced the big.LITTLE configuration in the Exynos 5420. Core speeds were upped to 1.9 GHz for the Cortex-A15s and 1.3 GHz for the Cortex-A7s. They repaired a serious CCI-400 cache coherency bug, and added support for global task scheduling (GTS) to improve performance and power consumption. The beneficiaries of the new chip were the Galaxy Note 3 and the Galaxy Tab S.

At Mobile World Congress 2014, Samsung rolled out the Exynos 5422. Still in 28nm and 113mm2, clock speeds were bumped up to 2.1 GHz for the Cortex-A15s and 1.5 GHz for the Cortex-A7s, with the same MaliT628 MP6 GPU. Added was HMP, or heterogeneous multi-processing, enabling all eight cores to be active concurrently for the first time. With a 16 MP rear camera and computational photography features such as high dynamic range (HDR), the Galaxy S5 again had both Exynos 5422 and Qualcomm Snapdragon 801 variants.

A brief stop in 20nm was next for the Galaxy Note 4 and the Galaxy Tab S2 in September 2014. Caught in between nomenclature, the Exynos 5433 was originally part of the Exynos 5 Octa family. However, its new 64-bit configuration of four Cortex-A57 cores at 1.9 GHz plus four Cortex-A53 cores at 1.3 GHz, plus a Mali-T760 MP6 GPU at 700 MHz, earned it Exynos 7 Octa branding.

Avoiding the Zero-Sum Game

Vertical integration and design innovation have brought Samsung mobile devices on par with any in the world with just under 21% market share closing 2014. Moving from dubious quality and threatened capitulation in 1994 to leadership in mobile devices 20 years later is an amazing story. Despite legal challenges from Apple over who may have had an idea for a smartphone feature first, Samsung’s record shows how innovation in design and heavy investment in fab technology recreated the brand and reshaped the mobile industry.

Continued expansion in Texas has kept Samsung fabs at the cutting edge, and kept much of the mobile industry running. The latest 14nm FinFET process in Austin is home to the Apple A9 and the Samsung Exynos 7420, the real Exynos 7 Octa. It may also become home to the Qualcomm Snapdragon 820.

That would be an interesting twist given the drama surrounding the launch of the Samsung Galaxy S6 on March 1, 2015. Most observers expected a version using the Qualcomm Snapdragon 810. Weeks earlier, Qualcomm reported loss of “a major customer” in its quarterly earnings call.

Numerous reports surfaced that the Snapdragon 810 was experiencing overheating issues, denied but never quite disproven by Qualcomm. The Galaxy S6 debuted with the Exynos 7420, a tiny 78mm2 die with four 2.1 GHz Cortex-A57s and four 1.5 GHz Cortex-A53s, plus a MaliT760 MP8 at 772 MHz. Found with the Exynos 7420 in the S6 was the Samsung “Shannon” Exynos Modem 333 for 4G LTE baseband, along with Samsung-designed RF and PMIC parts.

More than likely, Samsung has just decided it is ready to move to its own parts. The baseband program has been several years in the making, tracing back to 2011 when their LTE basebands were first spotted. In addition to Shannon stand-alone baseband chips, ModAP is the brand integrating baseband controllers with application processors as part of a custom ASIC business.

Two lessons should be obvious. Samsung will use their own chips, if they have them, to improve availability and margins. They will use somebody else’s chips if that is the most expedient way to qualify and release a device in a particular market – until they can design and build equivalent parts. Qualcomm likely has some content in some Galaxy S6 versions somewhere, but if the Exynos 7420 ships in the US versions of the S6, that would be a significant change.

Questions continue to circulate as to whether Samsung has enough capacity to service all three sources of demand – Apple, Qualcomm, and themselves. It’s a legitimate concern. Samsung has cross fab flexibility with the Giheung complex and a partnership with GLOBALFOUNDRIES, which helps. An early S6 teardown indicated Exynos 7420 parts came from Albany, pointing to GF as an alternate foundry for some of the first chips produced.

Speaking of alternate foundries, Samsung and TSMC are pitted against each other in the Apple iPhone 6s, each supplying a version of the A9 chip in an undisclosed split. Labeled as “Batterygate”, viral reports surfaced of a significant power consumption difference between phones using either part, anywhere from 10 to 33% difference in favor of TSMC. Some social media suggested any phones with Samsung parts were defective and should be returned immediately.

Test conditions for those reports were suspect at best. Apple later issued statements saying they were observing only a 2 or 3% difference. TSMC proponents jumped on the advantages of their 16nm process, particularly its reduced leakage power. Samsung supporters point out that the 14nm part with its smaller die size is likely cheaper for Apple and that dual-sourcing means higher volumes and fewer shortages.

The fact is both A9 parts started from the same Apple high-level design but there are variations between more than the processes. Libraries vary between Samsung and TSMC. Dynamic voltage and frequency scaling (DVFS) makes it difficult to say what a complex processor is doing with precision. Power and clock domain differences likely exist. In other words, the two parts are the “same”, but different.

Most consumers don’t care what chip is in a phone, right? The uproar seems to have died down, in spite of third-party apps to check what chip is inside an iPhone 6s. Just as Apple solved “Sensorgate” with an iOS update, ultimately any major differences between Samsung and TSMC A9 chips may disappear with an iOS 9 update soon. What Apple does for the iPhone 7 and if dual-sourcing continues is worth watching.

The smartphone market is changing. IDC reports annual growth has slowed to around 11%. Slowing growth in smartphones makes competition between Apple, Qualcomm, and Samsung closer to a zerosum game – when one wins, the other two lose, at least from a highend application processor view.

Samsung is responding by trying to differentiate its application processors. With both Apple and Qualcomm already having ARMv8-A custom cores, Samsung has chimed in with its own core design codenamed “Mongoose” and recently introduced as Exynos M1.

The Exynos 8 Octa 8890 is the first high-end Samsung part to combine an application processor cluster with a baseband modem on a single chip. The application processor side pairs four Exynos M1 cores with four ARM Cortex-A53 cores in big.LITTLE, fabbed in 14nm FinFET.

Where the Exynos M1 core lands against Qualcomm’s “Kryo” core in performance will be interesting. Initial benchmark results put the Exynos 8 Octa 8890 ahead of the Snapdragon 820 – however, judging Qualcomm on pre-release silicon may be premature. We will know a lot more when the Samsung Galaxy S7 and competing phones with the Qualcomm Snapdragon 820 release in 2016.

A bigger question is how Samsung, and others, continue to innovate in smartphones beyond just more advanced SoCs. There are also other areas of growth, such as smartwatches and the IoT, where Samsung is determined to play. There are me-too features, such as Samsung Pay, and new ideas like wireless charging and curved displays. (More ahead in Chapter 10.)

How this unfolds, with Samsung both supplier and competitor in an era of consolidation for the mobile and semiconductor industries, depends on adapting the strategy. Innovations in RF, battery, and display technology will be highly sought after. Software capability is already taking on much more importance. As Chinese firms improve their SoC capability, the foundry business may undergo dramatic changes – and the center of influence may shift again.

History says Samsung invests in semiconductor fab technology and capacity during down cycles preparing for the next upturn. Heavy investments in 3D V-NAND flash, the SoC foundry business, and advanced processes such as 10nm FinFET and beyond are likely to accelerate, and competition with TSMC and other foundries will intensify as fab expenses climb.

Also read:

A Detailed History of Qualcomm

How Apple Became a Force in the Semiconductor Industry

Share this post via:

Semidynamics Unveils 3nm AI Inference Silicon and Full-Stack Systems