Memory Hierarchy and the Memory Wall

Computer programs mainly move data around. In the meantime, they do some computations on the data but the bulk of execution time and energy is spent moving data around. In computer jargon we say that applications tend to be memory bound: this means that memory is the main performance limiting factor. A plethora of popular applications are memory bound, such as Artificial Intelligence, Machine Learning or Scientific Computing.

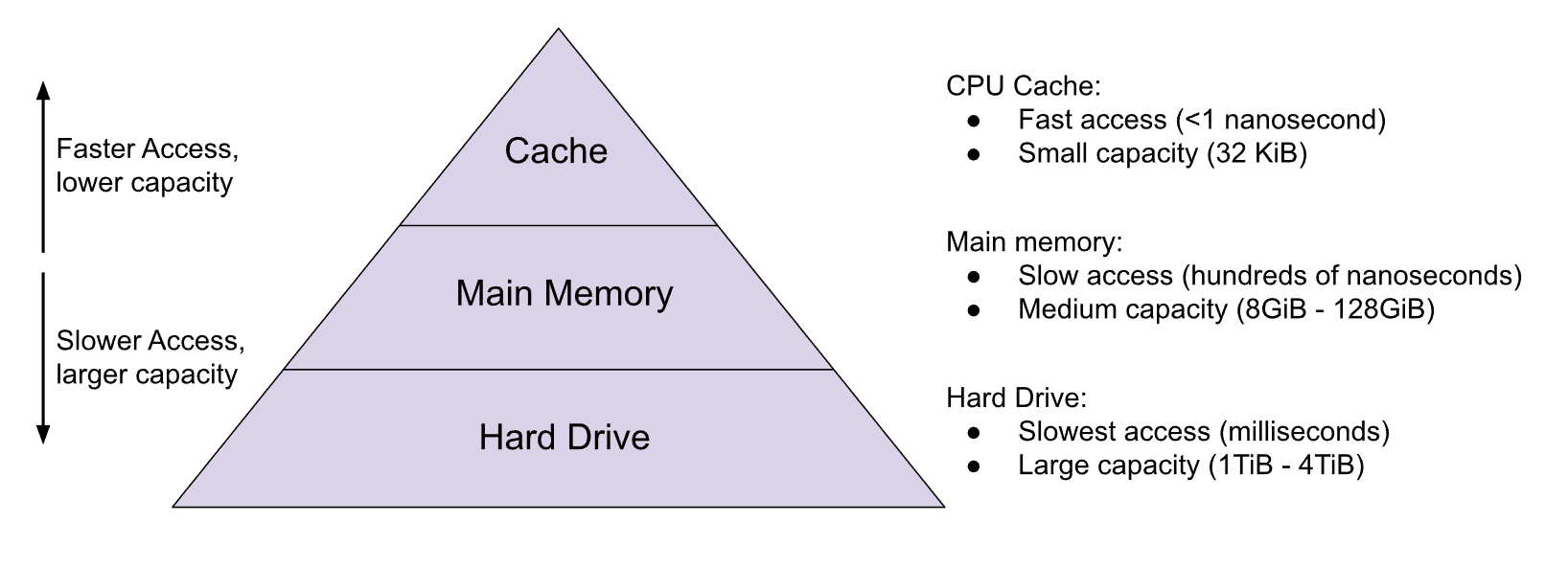

By memory we mean any physical system able to store and retrieve data. In a digital computer, memories are built out of electrical parts, such as transistors or capacitors. Ideally, programmers would like the memory to be fast and large, i.e. they demand quick access to a huge amount of data. Unfortunately, these are conflicting goals. For physical reasons, larger memories are slower and, hence, we cannot provide a single memory device that is both fast and large. The solution that computer architects found to this problem is the memory hierarchy, illustrated in the next figure.

The memory hierarchy is based on the principle of locality, which states that data accessed recently are very likely to be accessed again in the near future. Modern processors leverage this principle of locality by storing recently accessed data in a small and fast cache. Memory requests that find the data in the cache can be served at the fastest speed; these accesses are called cache hits. However, if the data is not found in the cache we have to access the next level of the memory hierarchy, the Main Memory, largely increasing the latency for serving the request. These accesses are called cache misses. By combining different memory devices in a hierarchical manner, the system gives the impression of a memory that is as fast as the fastest level (Cache) and as large as the largest level (Hard Drive).

Cache misses are one of the key performance limiting factors for memory bound applications. In the last decades, processor speed has increased at a much faster pace than memory speed, creating the problem known as the memory wall. Due to this disparity between processor speed and memory speed, serving a cache miss may take tens or even hundreds of CPU cycles, and this gap keeps increasing.

In a classical cache, whenever a cache miss occurs, the processor will stall until the miss is serviced by the memory. This type of cache is called a blocking cache, as the processor execution is blocked until the cache miss is resolved, i.e. the cache cannot continue processing requests in the presence of a cache miss. In order to improve performance, more sophisticated caches have been developed.

Non-Blocking Caches

In case of a cache miss, there may be subsequent (younger) requests whose data are available in the cache. If we could allow the cache to serve cache hits while the miss is solved, then the processor could continue doing useful work instead of just being idle. This is the idea of non-blocking caches [1][2], a.k.a. lockup-free caches. Non-blocking caches allow the processor to continue doing useful work even in the presence of a cache miss.

Modern processors use non-blocking caches that can tolerate a relatively small number of cache misses, typically around 16-20. This means that the processor can continue working until it reaches 20 cache misses and then it will stop, waiting for the misses to be serviced. Although this is a significant improvement over blocking caches, it can still result in large idle times for memory intensive applications.

Gazzillion Misses

Our Gazzillion MissesTM technology takes the idea of non-blocking caches to the extreme by providing up to 128 cache misses per core. By supporting such a large number of outstanding misses, our Avispado and Atrevido cores can avoid idle times waiting for main memory to service the data. Furthermore, we can tailor the aggressiveness of the Gazzillion to fulfill customer’s design targets, providing an efficient area-performance trade-off for each memory system.

There are multiple reasons why Gazzillion Misses results in significant performance improvements:

Solving the Memory Wall

Serving a cache miss is expensive. Main memories are located off chip, on dedicated memory circuits based on DDR [3] or HBM [4] technology and, hence, just doing a round-trip to memory takes a non-negligible amount of time. This is especially concerning with the advent of CXL.mem [5], which locates main memory even further away from the CPU. In addition, accessing a memory chip also takes a significant amount of time. Due to the memory wall problem, accessing main memory takes a large number of CPU cycles and, therefore, a CPU can quickly become idle if it stops processing requests after a few cache misses. Gazzillion Misses has been designed to solve this issue, largely improving the capability of Avispado and Atrevido cores to tolerate main memory latency.

Effectively Using Memory Bandwidth

Main memory technologies provide a high bandwidth, but they require a large number of outstanding requests to maximize bandwidth usage. Main memory is split in multiple channels, ranks and banks, and it requires a large number of parallel accesses to effectively exploit its bandwidth. Gazzillion Misses is able to generate a large amount of parallel accesses from a small number of cores, effectively exploiting main memory bandwidth.

A Perfect Fit for Vectorized Applications

Vectorized codes put a high pressure on the memory system. Scatter/gather operations, such as indexed vector load/store instructions, can generate a large number of cache misses from just a few vector instructions. Hence, tolerating a large number of misses is key to deliver high performance in vectorized applications. A paradigmatic example of such applications are sparse, i.e. pruned, Deep Neural Networks [7], that are well known for exhibiting irregular memory access patterns that result in a large number of cache misses. Gazzillion Misses is a perfect solution for such applications.

What does this have to do with Leonardo Da Vinci?

To better illustrate Gazzillion Misses, we would like to borrow an analogy from the classical textbook “Computer Organization and Design” [8]. Suppose you want to write an essay about Leonardo Da Vinci and, for some reason, you do not want to use the Internet, Wikipedia or just tell ChatGPT to write the essay for you. You want to do your research the old-fashioned way, by going to a library, either because you feel nostalgic or because you enjoy the touch and smell of books. You arrive at the library and pull out some books about Leonardo Da Vinci, then you sit at a desk with your selected books. The desk is your cache: it gives you quick access to a few books. It cannot store all the books in the library, but since you are focusing on Da Vinci, there is a good chance that you will find the information that you need in the books in front of you. This capability to store several books on the desk close at hand saves you a lot of time, as you do not have to constantly go back and forth to the shelves to return a book and take another one. This is similar to having a cache inside the processor that contains a subset of the data.

After spending some time reading the books in front of you and writing your essay, you decide to include a few words on Da Vinci’s study of the human body. However, none of the books on your desk mention Da Vinci’s contributions to our understanding of human anatomy. In other words, the data you are looking for is not on your desk, so you just had a cache miss. Now you have to go back to the shelves and start looking for a book that contains the information that you want. During this time, you are not making any progress on your essay, you are just wandering around. This is what we call idle time, you stop working on your essay until you locate the book that you need.

You can be more efficient by leveraging the idea of non-blocking caches. Let’s assume that you have a friend that can locate and bring the book while you continue working on your essay. Of course, you cannot write about anatomy because you do not have the required book, but you have other books on your desk that describe Da Vinci’s paintings and inventions, so you can continue writing. By doing this you avoid stopping your work on a cache miss, reducing idle time. However, if your friend takes a large amount of time to locate the book, at some point you will be again idle waiting for your friend to bring the book.

This is when Gazzillion Misses comes pretty handy. Our Gazzillion technology gives you 128 friends that will be running up and down the library, looking for the books that you will need to write your essay and making sure that, whenever you require a given book, it will be available on your desk.

To sum up, our Gazzillion Misses technology has been designed to effectively tolerate main memory latency and to maximize memory bandwidth usage. Due to its unprecedented number of simultaneous cache misses, our Avispado and Atrevido cores are the fastest RISC-V processors for moving data around.

Further information at www.semidynamics.com

References

[1] David Kroft. “Lockup-Free Instruction Fetch/Prefech Cache Organization”. Proceedings of

the 8th Int. Symp. on Computer Architecture, May 1981, pp. 81-87.

[2] Belayneh, Samson, and David R. Kaeli. “A discussion on non-blocking/lockup-free caches”. ACM SIGARCH Computer Architecture News 24.3 (1996): 18-25.

[3] DDR Memory: https://en.wikipedia.org/wiki/DDR_SDRAM

[4] High Bandwidth Memory: https://en.wikipedia.org/wiki/High_Bandwidth_Memory

[5] Compute Express Link: https://en.wikipedia.org/wiki/Compute_Express_Link

[6] Memory Wall: https://en.wikipedia.org/wiki/Random-access_memory#Memory_wall

[7] Han, S., Mao, H., & Dally, W. J. (2015). Deep compression: Compressing deep neural networks with pruning, trained quantization and huffman coding. arXiv preprint arXiv:1510.00149.

[8] Patterson, D. A., & Hennessy, J. L. (2013). Computer organization and design: the hardware/software interface (the Morgan Kaufmann series in computer architecture and design). Paperback, Morgan Kaufmann Publishers.

Gazzillion Misses is a trademark of Semidynamics

Also Read:

CEO Interview: Roger Espasa of Semidynamics

Semidynamics Shakes Up Embedded World 2024 with All-In-One AI IP to Power Nextgen AI Chips

RISC-V Summit Buzz – Semidynamics Founder and CEO Roger Espasa Introduces Extreme Customization

Share this post via:

Comments

There are no comments yet.

You must register or log in to view/post comments.